Introduction to AOLserver, Part 2

by Philip Greenspun,

illustrated by

Mina

Reimer

(Written in July 1999 for www.linuxworld.com; permanently

installed now in Web Tools Review)

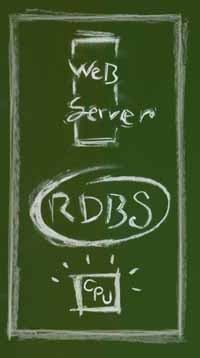

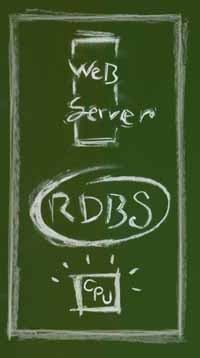

As noted in Part 1 of this article,

AOLserver was designed from the ground up as a tool for building

database-backed Web sites. What does that mean architecturally?

- the server runs as one Unix process

- built-in support for connection pooling (reusing a connection to the

RDBMS from request to request)

- abstraction layer isolating Web scripts from the details of the

particular RDBMS being used

- log file containing a sequential record of all queries sent to the RDBMS

Connection pooling and the one-process design go hand-in-hand. It is

difficult to share resources among processes on a computer and

therefore without a one-process design, it would be tough for AOLserver

to pool database connections. The practical consequence of scripts

running inside AOLserver and sharing database connections is a factor of

100 speed-up over CGI.

Connection pooling and the one-process design go hand-in-hand. It is

difficult to share resources among processes on a computer and

therefore without a one-process design, it would be tough for AOLserver

to pool database connections. The practical consequence of scripts

running inside AOLserver and sharing database connections is a factor of

100 speed-up over CGI.

AOLserver's ns_db abstraction layer means that Web scripts

don't have to contain the database username or password. This makes

your site a bit more secure than a CGI-backed site but, more

importantly, it makes it easier to develop toolkits and reuse code from

site-to-site. The abstraction layer also means that your Web scripts

won't depend on the particulars of an RDBMS vendor's C library. For

example, if you decide to move your service from Sybase to Oracle, you

won't have to change your Tcl code. Sadly, however, you probably

will

have to change some of your SQL code because Oracle and Sybase handle

dates differently. SQL is not a very standard standard.

The single log file idea sounds trivial but it is the key to debugging a

database-driven site. If you're not happy with what is in the database,

you can look through the AOLserver error log and see exactly what

AOLserver told the database to do, in sequence.

How Does AOLserver Actually Talk To The Database?

How can AOLserver talk to so many database management systems? Don't

they all have different client libraries? Yes. The core AOLserver

doesn't understand how to talk to any database management systems.

It understands how to talk to an AOLserver database driver, typically a

500-line C program that bridges the AOLserver C API and the DBMS

vendor's C library.

Currently available database drivers include ones for Informix Universal

Server, Interbase, Oracle, PostgreSQL, Solid, and Sybase. The Solid C

library is basically the ODBC standard and therefore the Solid driver

might well work with any RDBMS vendor's ODBC interface. Nothing in the

AOLserver architecture demands that their ns_db

abstraction/pooling layer be used with a relational database

management system. We've written experimental drivers for the PLS text

database software, for example, and the ideas are the same but you

aren't sending SQL code through the ns_db calls.

There are instructions on how to write your own database driver for

AOLserver in Appendix B of the AOLserver C Developer's

Guide, distributed from www.aolserver.com. It takes a good

C programmer two or three days to write a driver.

Why Oracle?

In an article about an open-source Web server, why give examples that

use a closed-source relational database management system? Why not use

MySQL (not free but open-source), for example? I never considered using

MySQL because it doesn't try to solve any of the problems that I'm

relying on Oracle to solve, e.g., the ACID transaction processing

guarantees:

- Atomicity

-

Results of a transaction's execution are either all committed or all

rolled back. All changes take effect, or none do. Suppose that a user is

editing a comment. A Web script tells the database to "copy the old

comment value to an audit table and update the live table with the new

text". If the hard drive fills up after the copy but before the update,

the audit table insertion will be rolled back.

-

Consistency

-

The database is transformed from one valid state to another valid

state. A transaction is legal only if it obeys user-defined integrity

constraints. Illegal transactions aren't allowed and, if an integrity

constraint can't be satisfied the transaction is rolled back. For

example, suppose that you define a rule that postings in a discussion

forum table must be tied to a valid user ID. Then you hire Joe Novice

to write some admin pages. Joe writes a delete-user page that doesn't

bother to check whether or not the deletion will result in an orphaned

discussion forum posting. Oracle will check, though, and abort any

transaction that would result in you having a discussion forum posting

by a deleted user.

-

Isolation

-

The results of a transaction are invisible to other transactions until

the transaction is complete. For example, suppose you have a page to

show new users and their photographs. This page is coded in reliance on

the publisher's directive that there will be a mugshot for every user

and will present a broken image if there is not. Jane Newuser is

registering at your site at the same time that Bill Olduser is viewing

the new user page. The script processing Jane's registration does

inserts into several tables:

users, mugshots,

users_demographics. This may take some time if Jane's mugshot is

large. If Bill's query starts before Jane's transaction commits, Bill

won't see Jane at all on his new-users page, even if Jane's insertion

into some of the tables is complete.

-

Durability

-

Once committed (completed), the results of a transaction are permanent

and survive future system and media failures. Suppose your ecommerce

system inserts an order from a customer into a database table and then

instructs CyberCash to bill the customer $500. A millisecond later,

before your server has heard back from CyberCash, someone trips over

the machine's power cord. Oracle will not have forgotten about the new

order. Furthermore, if a programmer spills coffee into a disk

drive, it will be possible to install a new disk and recover the

transactions up to the coffee spill, showing that you tried to bill

someone for $500 and still aren't sure what happened over at CyberCash.

An imaginative marketing person might call a computer program a

relational database management system even if it did not provide the

ACID guarantees. However, all commercial RDBMSes provide these

guarantees and therefore asking someone to compare MySQL to Oracle is

almost as absurd as asking for a comparison between Emacs and Oracle.

The open-source purist's only realistic choice for an RDBMS is

PostgreSQL, available from www.postgresql.org. In some ways,

PostgreSQL has more advanced features than any commercial RDBMS. Most

important, the loosely organized unpaid developers of PostgreSQL were

able to convert to an Oracle-style multi-version concurrency system (see

below), leaving all the rest of the commercial competition deadlocked in

the dust. If you've decided to accept John Ousterhout as your personal

savoir, you'll be delighted to learn that you run Tcl procedures inside

the database. If your business can't live without commercial support

for your RDBMS, you can buy it from www.pgsql.com. As of November 1999,

however, there were a few flies in the PostgreSQL ointment, most

glaringly lack of OUTER JOIN and no enforcement of referential integrity

constraints (i.e., PostgreSQL can't be said to do the "C" in ACID).

If you're not going to be an open-source purist, the natural choice is

to use what everyone else uses: Oracle. What's good about Oracle?

- Data Integrity

- Concurrency

- Java and PL/SQL inside the server

To judge by the tales of corrupted database woe from shoestring service

operators, data integrity isn't something one can take for granted with

off-brand RDBMSes. By contrast, I've been running Oracle for five years

and have done some very unfriendly things to it, including filling up

tablespaces and disk drives, power-cycling running Oracle servers, etc.

Yet I've never had a problem starting Oracle right back up and getting

access to all of my data through the last committed transaction.

Sometimes the great herd of MBAs is right!

Concurrency is Oracle's strongest suit relative to its commercial

competitors. In Oracle, readers never wait for writers and writers

never wait for readers. Suppose the publisher at a large site starts a

query at 12:00 PM summarizing usage by user. Oracle might have to spend

an hour sifting through 200 GB of tracking data. The disk drives grind

and one CPU is completely used up until 1:30 PM. Further suppose that

User #356712 comes in at 12:30 PM and changes his email address, thus

updating a row in the users table. If the usage tracking

query arrives at this row at 12:45 PM, Oracle will notice that the row

was last modified after the query started. Under the "I" in ACID,

Oracle is required to isolate the publisher from the user's update.

Oracle does this by reaching into the rollback segment and producing

data from user row #356712 as it was at 12:00 PM when the query

started. Here's the same scenario in a table:

| Time

| Publisher

| Public Web Service

|

| 12:00 PM

| Starts a 90-minute query summarizing usage for preceding

year

| --

|

| 12:30 PM

| usage summary continues to chug away

| User #356712 updates email address from "joe@foobar.com"

to "joe@yahoo.com"

|

| 12:45 PM

| usage summary arrives at User #356712; Oracle reaches

into rollback segment and pulls out "joe@foobar.com" for the report,

since that's what the value was at 12:00 PM when the query started

| --

|

| 1:30 PM

| usage summary report completes

| --

|

How would this play out in another RDBMS, for example Microsoft SQL

Server? The majority of database management systems take the sophomore

computer science student approach to concurrency control. When you're

reading, you take read locks on the information that you're about to

read. Nobody can write until you release them. When you're writing,

you take write locks on the information that you're about to update.

Nobody can read or write until you release the locks. In the preceding

example, User #356712 would submit his request for the address change at

12:30 PM. The thread on the Web server would be blocked waiting for the

read locks to clear. How long would it wait? A full hour with a

spinning Netscape icon. Sound like any of the Web sites you've visited?

The reason that RDBMSes appear to be competitive is that in transaction

processing benchmarks all the transactions are brief and can be handled

in less than one second. Thus every RDBMS vendor can publish impressive

reports of tens of thousands of transactions processed per minute. The

bottom line in the real world is that Oracle can support vastly more

users than competitors due to its unique multi-version approach to

implementing concurrency.

Another important thing about Oracle is that you can run procedural

programs inside the RDBMS server. For example, suppose that you want to

look for all rows in a billion-row table that satisfy a particular

regular expression. Working through one billion rows isn't going to be

fast, no matter what. The problem takes on terrifying dimensions when

you realize that there is no REGEXP operator in standard SQL. You're

going to have to pull all billion rows across the network into AOLserver

Tcl and then discard the ones that don't satisfy a Tcl REGEXP. You can

relax if you're running Oracle 8i, actually. Because the RDBMS includes

built-in Java support, you can write a little Java program to look

through the database locally.

AOLserver Tcl is the best tool for Web programming 99% of the time. But

occasionally you need a procedural language with rich data types. For

example, if you have to parse XML or email headers, it is easy to load

up a standard Java parser into Oracle and shuffle stuff around within

Oracle. In addition to Java, Oracle includes support for the vaguely

ADA-inspired language PL/SQL. In many ways, database programming in

PL/SQL is more concise and convenient than in Java.

Life with Oracle is pretty sweet once you get through the horror of

installation and administration. But would it not be sweeter yet to run

an open-source RDBMS? Probably, but make sure that data integrity,

concurrency, memory leaks, security, and operating system issues are

solidly under control first. We should not be deceived by the

success of open-source operating systems into thinking that an

open-source RDBMS must necessarily be superior. Linux is great but keep

in mind that the OS problem is a very old one, adequately solved by the

late 1960s. Remember further that dozens of brilliant programmers have

labored for more than a decade to bring us Linux. The RDBMS problem

wasn't really solved well by commercial vendors until around 1990 and

few programmers have worked on open-source RDBMS. The success of Linux

should be inspiration for a future assault on the RDBMS problem, not a

means of deluding ourselves into thinking that open-source software is

always better (or even adequate).

The Basics

Here are some examples of db-backed Web site scripts in the AOLserver

Tcl API. For concreteness, we use the Oracle RDBMS but all of the

examples would work without significant modification in any other SQL

database.

The Basics: Reading one row at a time

Let's start by looking at code to get one row of information from

Oracle. Consider the hypothetical page get-user-info.tcl:

# ask AOLserver to give us a database connection from the default pool

set db [ns_db gethandle]

set sql_query "select first_names, last_name

from users

where user_id = $user_id"

# actually send the query to the RDBMS; tell AOLserver we

# expect exactly one row back

set selection [ns_db 1row $db $sql_query]

At this point in the script, the values returned from the database are

in an ns_set data structure, stored in the Tcl variable

$selection. The AOLserver ns_set is a data structure for

storing key-value pairs so that they are accessible to both C and Tcl

procedures. Right now, $selection will contain values for

two keys: first_names and last_name. Here's

how a Tcl program can get them out:

set first_names [ns_set get $selection "first_names"]

set last_name [ns_set get $selection "last_name"]

ns_return 200 text/plain "User #$user_id is named $first_names $last_name"

This program isn't robust to missing data in the RDBMS. Suppose someone

bookmarks this page in June, with an embedded user_id of 37. User #37

is deleted from the database in September and the bookmarked page is

reloaded in December. Oracle won't return any rows in response to the

page query and AOLserver will throw an error rather than return from

ns_db 1row. The fix? 0or1row:

# ask AOLserver to give us a database connection from the default pool

set db [ns_db gethandle]

set sql_query "select first_names, last_name

from users

where user_id = $user_id"

# actually send the query to the RDBMS; tell AOLserver we

# expect at most one rows back

set selection [ns_db 0or1row $db $sql_query]

if { $selection == "" } {

ns_return 200 text/plain "User #$user_id not found"

} else {

# we found a row in the RDBMS

set first_names [ns_set get $selection "first_names"]

set last_name [ns_set get $selection "last_name"]

ns_return 200 text/plain "User #$user_id is named $first_names $last_name"

}

Among our circle of programmers, we got tired of some of this typing

and programmed ourselves some shortcuts, which we distribute from

http://www.photo.net/wtr/thebook/utilities.txt.

With these, you can save yourself some repetitive typing and also

produce terser code where it will be easier to spot errors. Here are

some examples:

# with database_to_tcl_string we can fetch one value from the database

# and stuff it immediately into a variable

set sql_query "select first_names || ' ' || last_name

from users

where user_id = $user_id"

set user_name [database_to_tcl_string $db $sql_query]

# or we could have used set_variables_after_query to save a little

# typing when setting two

set sql_query "select first_names, last_name

from users

where user_id = $user_id"

set selection [ns_db 1row $db $sql_query]

# now we can set first_names and last_name in our local

# environment merely by calling set_variables_after_query,

# which comes up into its calling environment and looks

# at the ns_set stored in $selection

set_variables_after_query

ns_return 200 text/plain "User #$user_id is named $first_names $last_name"

The Basics: Reading a set of rows

You've decided that your script ought to display more than the user's

name. You want to allow users to assess each other's credibility by

looking at past discussion forum postings. The user info page needs to

grab at least the subject lines for this user:

# ask AOLserver to give us a database connection from the default pool

set db [ns_db gethandle]

# let's do an ns_db select to open a multi-row cursor

set selection [ns_db select $db "select one_line

from bboard

where user_id = $user_id

order by posting_time"]

# loop around and fetch the rows

while { [ns_db getrow $db $selection] } {

set one_line [ns_set get $selection "one_line"]

append bboard_contributions "<li> $one_line\n"

}

If you're familiar with relational database programming in another

language, you'll notice that AOLserver Tcl has exactly the same

semantics: open a cursor, fetch each row in succession. This similarity

isn't an accident; all the database vendors' C libraries have the same

semantics.

What if you don't want every row from the database? Maybe the query

user is extremely loquacious. The 75 most recent messages ought to

suffice to give a fellow community member a good idea of what to expect:

# ask AOLserver to give us a database connection from the default pool

set db [ns_db gethandle]

# let's do an ns_db select to open a multi-row cursor

# this time let's ask for the rows sorted by newest first

set selection [ns_db select $db "select one_line

from bboard

where user_id = $user_id

order by posting_time desc"]

set counter 0

# loop around and fetch the rows

while { [ns_db getrow $db $selection] } {

incr counter

if { $counter > 75 } {

# we've already collected up 75 postings, let's close the cursor

ns_db flush $db

break

}

set one_line [ns_set get $selection "one_line"]

append bboard_contributions "<li> $one_line\n"

}

Note: If you're running Oracle 8.1 or later, you can simplify your

application code and limit results to the first 75 with the following

query:

select *

from (select posting_time, one_line

from bboard

where user_id = $user_id

order by posting_time desc)

where rownum <= 75

|

More Advanced Database Programming

Advanced DB: Transactions

By default, we've configured our Oracle driver so that Oracle is in

autocommit mode. Each SQL statement executed by a Tcl script runs as

a transaction, but the statements aren't tied together. Consider the

following script to delete an ad in the ArsDigita Community System

classifieds module:

set_the_usual_form_variables

# classified_ad_id

set db [ns_db gethandle]

# copy the ad to an audit table

ns_db dml $db "insert into classified_ads_audit (...)

select ... from classified_ads

where classified_ad_id = $classified_ad_id"

# delete any bids from the auction system

ns_db dml $db "delete from classified_auction_bids

where classified_ad_id = $classified_ad_id"

# now delete the ad itself

ns_db dml $db "delete from classified_ads

where classified_ad_id = $classified_ad_id"

Suppose someone trips over the server power cord when Oracle is

halfway through processing the delete from

classified_auction_bids command in this script. Each SQL

statement issued from AOLserver automatically executes as a

transaction. If Oracle is unable to commit, the transaction will be

rolled back and none of the bids will have been touched. However,

we'll have an extra row in the classified_ads_audit table

because the deletion of the ad never in fact happened. The solution?

Tell the Oracle driver to go back into "commit when I say" mode:

set_the_usual_form_variables

# classified_ad_id

set db [ns_db gethandle]

ns_db dml $db "begin transaction"

# copy the ad to an audit table

ns_db dml $db "insert into classified_ads_audit (...)

select ... from classified_ads

where classified_ad_id = $classified_ad_id"

# delete any bids from the auction system

ns_db dml $db "delete from classified_auction_bids

where classified_ad_id = $classified_ad_id"

# now delete the ad itself

ns_db dml $db "delete from classified_ads

where classified_ad_id = $classified_ad_id"

ns_db dml $db "end transaction"

Note that you won't find begin transaction in any Oracle

manual. It is simply an instruction to the driver to turn off

autocommit mode. The end transaction tells the driver

to commit the work done so far and go back into autocommit mode.

Need to abort a transaction due to an error discovered in Tcl? Use

ns_db dml $db "abort transaction"

Advanced DB: Keeping a thread alive to log

The ArsDigita Community System contains a banner ad server. After a

user clicks on a banner, the script /adserver/adhref.tcl is run. The

idea behind this script is to figure out as quickly as possible what

the target URL for the ad ought to be, redirect the user there, then

continue to execute the program to update the log. Here is a

simplified version of the script, without the exception handling code:

set_the_usual_form_variables

# adv_key

set db [ns_db gethandle]

# figure out where the banner is supposed to lead

# (on a high-volume site this could be cached with Memoize)

# note that instead of using $adv_key, we use $QQadv_key, a version

# created by set_the_usual_form_variables where any apostrophes are

# escaped so that the whole variable value will be legal as an SQL

# string literal

set target_url [database_to_tcl_string $db "select target_url

from advs

where adv_key = '$QQadv_key'"]

# tell the user's browser to visit the advertiser's site

ns_returnredirect $target_url

# close the connection to the client

ns_conn close

# we've returned to the user but let's keep this thread alive to log

# we keep one row per ad PER DAY to count clickthroughs

set update_sql "update adv_log

set click_count = click_count + 1

where adv_key = '$QQadv_key'

and entry_date = trunc(sysdate)"

ns_db dml $db $update_sql

# ask the Oracle driver how many rows were actually affected by the

# last update

set n_rows [ns_ora resultrows $db]

if { $n_rows == 0 } {

# there wasn't already a row there; this is the first

# clickthrough of the day; insert a row

ns_db dml $db "insert into adv_log

(adv_key, entry_date, click_count)

values

('$QQadv_key', trunc(sysdate), 1)"

}

# see if the publisher has configured per-user logging

# (useful if you want to ask "of the users who read at

# least one article on stain-removal, how many clicked

# on the Tide banner ad")

if [ad_parameter DetailedPerUserLoggingP adserver 0] {

set user_id [ad_get_user_id]

if { $user_id != 0 } {

# we know who this user is

ns_db dml $db "insert into adv_user_map

(user_id, adv_key, event_time, event_type)

values

($user_id, '$QQadv_key', sysdate, 'c')"

}

}

The high-level message to take away from this example: If you want to do

something for your own benefit, like log, don't do it on the user's

time! Return all the bytes that you can to the user's browser, close

the connection, and use the remaining life of the thread to accomplish

the publisher's goals.

Performance

Any threaded Web server like AOLserver will be extremely fast at serving

static files. If you visit http://www.specbench.org/ where

manufacturers brag about how great their computers are, you won't see

any benchmarks submitted with a process-pool server like Apache. How

relevant are these numbers to publishers of dynamic Web services? Not

very. You're going to be more interested in how quickly can a script

start running and, once running, how quickly can it get a connection to

an RDBMS. Architecturally, AOLserver is state-of-the-art in these

regards, with scripts that run inside its own process and database

connection pooling.

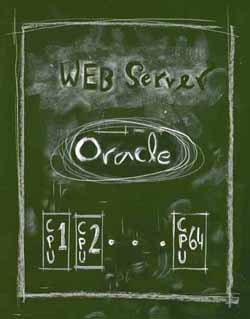

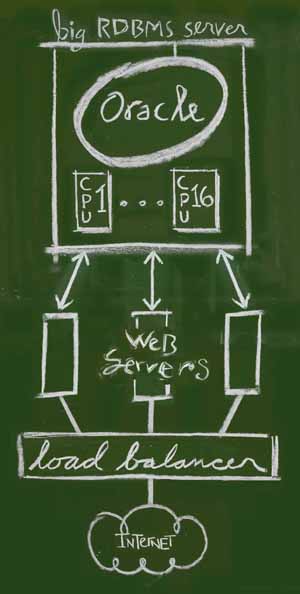

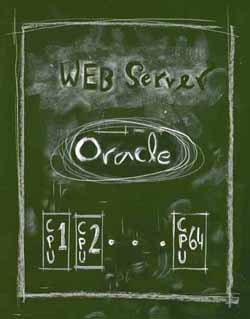

Your situation will boil down to one of the following:

- You have one CPU that you want to use for Web service. ("One small

box")

- You have many CPUs within the same computer that you want to use for

Web service. ("One big box")

- You have many CPUs spread across multiple physical computers that

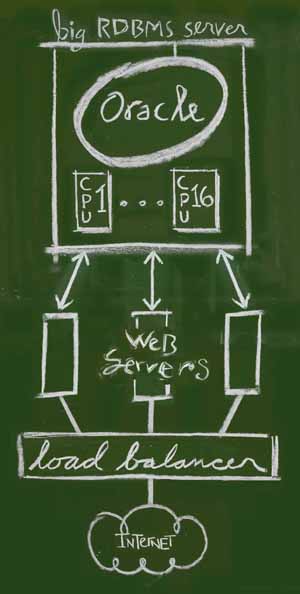

you want to use for Web service. ("Many small boxes")

|

In the "one small box with one CPU" situation, you only have to tune

your operating system kernel parameters to allow one process to keep

many files open simultaneously. Remember that if one AOLserver process

is delivering 1000 files simultaneously to 1000 users, it will need to

keep 1000 files open (the default limit with many OSes is 64 or 256).

|

|

In the "one big box with many CPUs" situation, you first have to decide

whether you want to let one AOLserver process range among the

CPUs. For example, the 4-CPU machine that sits behind http://www.photo.net/

is running an Oracle database and more than 20 AOLservers, each of which

supports a Web service. It might be a good thing that a single

AOLserver can't consume more than 25% of the machine's resources. On

the other hand, if the RDBMS is on a separate computer and the multi-CPU

machine is dedicated to one site, it is important to get all the

horsepower behind one AOLserver process. For this, you need an

operating system with kernel threads, HP-UX 11.0, Solaris, or Windows NT

for example.

|

|

In the "many small boxes" situation, you need some way of sharing the

workload so that your service doesn't depend on them all being up and

running simultaneously. You can get a load-balancing router that will

give all of your servers the same IP address. When a request for a new

TCP connection to your Web service comes in, the router hands it to

whichever physical machine is least busy. Companies that make these

load balancers include Alteon, Cisco, and Foundry Networks

(http://www.alteon.com, http://www.cisco.com, and

http://www.foundrynet.com). If you don't want to invest in learning

about how to run these little boxes, another approach to spreading the

load is to run a standard Unix machine as your load balancer. On this

computer you run a Web server whose only job is to proxy requests back

to a server farm. Because the load balancing server isn't doing

anything except shuffling bytes from one Ethernet card to another, you

ought to be able to handle about 400 requests per second per CPU via

this method. AOLserver 3.0 has 95% of what you need to implement this,

via its nsvhr virtual host redirection module. For a look at the

feature list of a complete implementation, read the clustering support

section of the Zeus Web server literature at

http://www.zeus.co.uk.

|

An astute reader will note that I didn't address the issue of what

happens when the database server runs out of power. This is partly

because modern SMP Unix boxes are tremendously powerful. The largest

individual HP and Sun machines are probably big enough for any current

Web service. The other reason that I didn't address the issue of yoking

many computers together to run an RDBMS is that it is tremendously

difficult, complex, and perilous. The key required elements are

software from the database vendor (e.g., Oracle Parallel Server), a disk

subsystem that can be addressed by multiple computers (typically an EMC

disk array), an expert team of administrators, and a few $million to pay for it all.

Reliability

Construed narrowly, AOLserver is extremely reliable. You could start up

the server from a Unix shell, walk away, and come back three months

later to find that 100 million static files had been served

successfully. Impressive? Not to the user who can't reach a dynamic

Web service built with AOLserver.

To have a reliable Web service, you need to address every possible

source of unreliability. First, admit to yourself that, as much fun as

it might be to blame tools, it is your own code that is going to cause

the user the most trouble. Accept the reality that

Web services are usually built with loose specs, to tight deadlines, by

people who aren't the world's best educated or most experienced

programmers. Once online, Web applications are subject to severe

stresses. For example, operations may get aborted due to network

failure. The consequence? If your transaction processing house isn't in

order, your database will become corrupt. You might get dozens of

simultaneous users in a second. The consequence? If your concurrency

control house isn't in order, your database will become corrupt.

A workable starting point is

- leave the hard stuff of concurrency control and transaction

atomicity to a standard relational database management system (RDBMS)

- develop pages in a safe interpreted language; you don't want a

programmer to be able to break more than one page at a time

- apply version control to the site so that it progresses through dev

and staging servers before going into production and so that you can

always revert to a known and tested version if need be

(see http://www.photo.net/wtr/cvs.html)

Given that you've made these decisions, you end up having to watch the

following layers

- operating system

- RDBMS

- AOLserver

- scripts coded in the AOLserver Tcl API

Any Unix variant tends to be extremely reliable and hence monitoring the

operating system layer is mostly a question of making sure that disk

partitions don't fill up. The RDBMS layer is also generally very

reliable unless a disk drive or tablespace fills up. So again the most

critical monitor to have is one that will alert you when a tablespace or

disk is filling up rapidly. Another good practice is to perform a

nightly export of the entire RDBMS into a Unix file. Not only can this

serve as your database backup, if written to tape, but the act of

completely exporting will expose any corrupt internal data structures.

Reliability: the AOLserver layer

Although AOLserver is extremely reliable, don't rely on it

being reliable. Engineer services so that the Web server could crash

every 10 minutes and users need never be aware. Here are the elements

of a Web service that does not depend on a Web server program being

reliable:

- keep user session state either in cookies on the user's browser or

in the RDBMS on the server; do not keep session state (except perhaps

for cached passwords) in the server

- run the Web server program from /etc/inittab so that if it crashes,

init will immediately restart it. Here's an example line from

/etc/inittab:

nsp:34:respawn:/home/nsadmin/bin/nsd -i -c /home/nsadmin/philg.ini

This tells init that, when it is in run state 3 or 4 (i.e., up and

running), to run the NaviServer daemon (nsd) in interactive mode, with

the config file /home/nsadmin/philg.ini.

- in the event that AOLserver gets stuck or clogged with threads

waiting for a system resource, run ArsDigita

Keepalive on the same machine to make sure that the public server is

delivering files or scripts. If it isn't, Keepalive will kill a hung

AOLserver and notify the sysadmins.

Note that this same set of techniques and open-source software would

work with any other Web server program, especially easily with a

threaded Web server that only requires one Unix process.

How could an AOLserver get clogged with threads and need to be killed?

Easily. Suppose Siegfried in Stuttgart is paying 10 cents/minute for

local phone service to connect his 56K modem. He likes the way that

your home page looks but he doesn't want to read it while paying the

phone company's rates. He instructs his desktop Web robot to "grab all

the pages pointed to by this page recursively, but only if they are on

the same server." With the average corporate site, this would be 30 or

40 pages that could all be downloaded within a few minutes. On a site

like http://www.photo.net/photo/, however, Siegfried's robot is embarking on

a 10 GB download project (500 hours at 56K). It would be fine for

AOLserver except that some of these robots seem to aim for breadth-first

searching. They'll request as many files as possible at the same time.

I've configured the http://www.photo.net/photo/ AOLserver to only spawn 120

threads. At various times I've found European robots requesting 120

large photos at the same time, all going to the same IP address, all

stacking up to get through a 28.8 or 56K modem. In this situation

Keepalive would restart the server and the administrator would get

email. The administrator would probably figure out the situation and

block the robot's IP address via the permissions system.

Another denial-of-service scenario occurs when all the database

connections are behind tied up by users on slow Internet connections.

Here's one way of architecting a Web script:

- get a database connection from the pool

- query to see if this user is authorized to view the requested info

ns_write headers and as many page body bytes as possible to the

client so that the user isn't staring at a blank screen

- query the database to get the meat of the page

ns_write bytes to the connection row-by-row as we get them from the

RDBMS

ns_write the page footer

- let AOLserver scavenge the database connection when it terminates

the thread

This is a good system for users on fast Internet connections. They get

as much of information as soon as they possibly can. It is inefficient

from a network point of view because each line is sent as a separate TCP

packet, i.e., every time ns_write is called the stream is

flushed. For a page displaying hundreds or thousands of rows, the

elapsed time until the entire page is loaded will probably be larger

than if the entire page were accumulated in a string and then

ns_return'd.

Much more problematic than this performance issue is the user on a slow

connection who can't accept all the packets as fast as AOLserver can

send them. Once this user has been sent a certain number of

unacknowledged packets, ns_write will wait for awhile.

During this interval, the script is holding onto a database connection.

If you've got eight database connections configured, enough for a

million-hit-per-day site, it only takes eight users on slow links viewing

a page like this to make every other user of the system wait! The

server will still be handling requests for static files quickly, but any

request that requires the database will leave the user staring at a

spinning browser icon.

The solution to this problem is to build pages with the following

structure:

- get a database connection from the pool

- query to see if this user is authorized to view the requested info

- query the database to get the meat of the page

- accumulate the bytes of the page in a Tcl variable, e.g.,

append whole_page $one_more_row_from_the_database

- explicitly release the database connection via

ns_db

releasehandle

- call

ns_return to send the entire page back to the user

If you've got 25 users on slow links, you'll find 25 threads stacking up

within AOLserver to serve those users. However, none of these threads

will be holding down one of the eight database connections in the pool.

Reliability: scripts written in the AOLserver Tcl API

Before launch, version control and testing on a staging server are the

best ways to ensure the reliability of scripts that you've written to

support your service. A reasonable architecture for a database-backed

Web service is the following:

- three Web servers (can be on one physical computer)

- two RDBMS users/tablespaces, one for dev/staging and one for

production

- one Concurrent Versions System (CVS) root

- two people trained to understand CVS

Here's how the three servers are used:

- developers work continuously in /web/foobar-dev/

- when the publisher is mostly happy with the development site, a

named version is created and installed at /web/foobar-staging

- the testers bang away at the /web/foobar-staging server

- when the testers and publishers sign off on the staging server's

performance, the site is released to /web/foobar/ (production)

- any fixes made to the staging server are merged back into the

development server

With this system, documented at http://www.photo.net/wtr/cvs.html,

in place, anything on the production site will be insulated from

developer mistakes.

What about bugs that manage to escape notice on both the dev and staging

servers? After launching a new Web service, it is common practice to

check the error logs every hour or so to make sure that users aren't

encountering bugs in the scripts. It is also common practice to look at

a server log analyzer's report showing which URLs generated server

errors during the preceding day.

There are a couple of problems with these common practices. First,

users and the publisher might not be too thrilled about server errors

piling up for 24 hours before anyone notices. Second, server errors

that occur infrequently are likely to go unnoticed forever.

What you really need to do is run a program every 15 minutes that checks

the server error log. If it finds any new errors, it emails them to set

of programmers and sysadmins. You probably can't rely on humans to be

vigilant and responsive, but with a vigilant computer program and

responsive humans the users will be reasonably well served. Here's an

actual sequence of events from an ecommerce site that we built:

- 11:30 am: user encounters bug in a help page, outside of the

standard order pipeline that we always tested ad nauseum.

- 11:37 am: our Watchdog server (free, open-source, available from http://arsdigita.com/free-tools/watchdog.html)

notices the bug in the error log and sends email to

eveander@arsdigita.com (among others).

- 11:44 am: Eve Andersson has fixed the bug on the live site and

checked the change back into the development tree.

- 11:45 am: Eve Andersson determines the user's identity from the

logs and sends him an email note apologizing for the error and inviting

him to try the page again.

Reliability: putting it all together

In the early days of the web, the same person was generally system

administrator, database administrator, author, designer, editor, and

publisher. In 1999, it is common for 40 or 50 people to be involved in

the production of a service and for those people to be spread out across

the country or the world. Moreover, cooperation is often needed at

obscure hours. The natural solution to this problem is an online

community!

If you've built a Web site called "foobar.com", why not build a

community for the folks behind it at "staff.foobar.com"? Run this on a

separate computer in a separate network so that when the main server is

unreachable, a person need only connect to the staff site to find out

where the computer is, who the network administrators are, what the

serial number of the computer running the site is, how to reach the

system administrators, etc.

For a comprehensive look at all reliability and monitoring issues, with

links to free open-source packages, see

http://www.photo.net/wtr/arsdigita-server-architecture.html.

Conclusion

AOLserver is a great tool, but it isn't a solution. Don't

spend too much time and energy thinking about something that the

end-user can't see. For me, the best thing that I can say about

AOLserver is that it works well enough to not require any of my time.

Most of my time and energy goes into the ArsDigita Community System

toolkit, which aims to be a solution for a broad class of people who

wish to operate online communities, intranets, and ecommerce sites. The

remaining time and energy goes into building specific Web sites, such as

photo.net.

Postscript

After I'd written this article, one of my students brought to my

attention the fact that some Web developers in California, often talked

about in the press, had discovered the virtues of AOLserver:

telnet webcenters.netscape.com 80

Trying...

Connected to webcenters.netscape.com.

Escape character is '^]'.

HEAD / HTTP/1.0

HTTP/1.0 302 Found

Location: http://home.netscape.com

MIME-Version: 1.0

Date: Sat, 30 Oct 1999 03:23:06 GMT

Server: NaviServer/2.0 AOLserver/2.3.3

Content-Type: text/html

Content-Length: 319

More

philg@mit.edu

Add a comment | Add a link

Connection pooling and the one-process design go hand-in-hand. It is

difficult to share resources among processes on a computer and

therefore without a one-process design, it would be tough for AOLserver

to pool database connections. The practical consequence of scripts

running inside AOLserver and sharing database connections is a factor of

100 speed-up over CGI.

Connection pooling and the one-process design go hand-in-hand. It is

difficult to share resources among processes on a computer and

therefore without a one-process design, it would be tough for AOLserver

to pool database connections. The practical consequence of scripts

running inside AOLserver and sharing database connections is a factor of

100 speed-up over CGI.