As noted in "ArsDigita

Server Architecture", for 99% of Web publishers, the best physical

server infrastructure is the simplest to administer: one computer

running the RDBMS and the Web server.

As noted in "ArsDigita

Server Architecture", for 99% of Web publishers, the best physical

server infrastructure is the simplest to administer: one computer

running the RDBMS and the Web server.

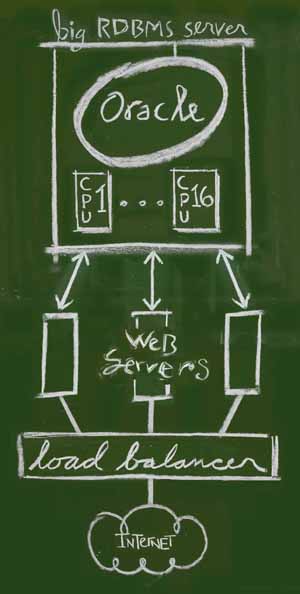

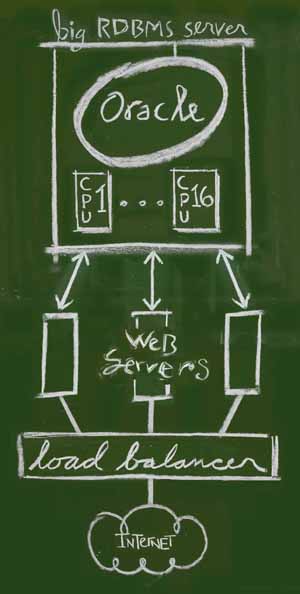

Suppose that you are forced by circumstances to adopt the larger and

more complex architecture detailed in the preceding article. You will

have one big RDBMS server and many small Web servers. This document

describes the best ways to manage a cluster of machines arranged

according to the following diagram:

Here are our objectives:

- simplicity in managing Web content and scripts; it would be nice to

cvs update or mv Web pages in one place,

without having to obtain special training

- user-uploaded files that are stored in the file system must be

written to a common directory on the big RDBMS server box

- if we wish to take the Web service down for maintenance, we can

quickly bring up a comebacklater server and then bring it back down

again, without having some machines in the farm serving the real site

and some serving "come back later" pages.

Put Web content and scripts on the big server; export via NFS

The solution to Objective 1, central simple management of Web content,

is to put the Web server root on the big RDBMS server. This seems

conterintuitive because the big server isn't running any Web server at

all. However, it makes sense because

- the Web site is dead if the big server is dead and live if the big

server is live; keeping the canonical Web content on the RDBMS server

does not introduce extra points of failure

- the big server has lots of I/O bandwidth

How to get the canonical content from the big server out to the actual

Web servers? Export via NFS. This is how cnn.com works (170 million

hits per day). They use NFS on Solaris, which has the property that

frequently accessed files are cached in RAM on the NFS client. I.e.,

after the first request for a given piece of Web content, there is no

additional network overhead for subsequent requests. If you're not

running Solaris, make sure that your Unix's NFS works this way. If not,

you might need to install an alternative distributed file system such as

AFS (Andrew File System, developed at Carnegie Mellon and used by MIT's

Project Athena).

To summarize: the big RDBMS server is also an NFS server; each

individual Web server is an NFS client with read-only access.

Put user-uploaded files in an NFS-exported directory on the big server

When a user uploads a file and it isn't put into an RDBMS large object

column, you have to make sure that it goes into a directory accessible

to all the Web servers. For security reasons, select a directory that

is not under the Web server root. You don't want the user

uploading "crack-the-system.pl" and then requesting

http://yourdomain.com/uploaded-files/crack-the-system.pl to make your

server run this code as a CGI script. However, like the Web content,

this directory must be NFS-exported. Unlike the Web content, this

directory must be writable by the NFS clients.

Maintenance Announcement Strategy

If you have one box and have to take down the Web service to upgrade

Oracle or whatever "ArsDigita Server

Architecture" suggests the use of a "come back later" server. If

you've got 24 little Web servers, it would be a headache to go around to

each one and follow the procedure outlined in the preceding document.

Here are two solutions:

- if you're using round-robin DNS to spread users among the little

Web servers, write some scripts automating the reconfiguring from

production to comebacklater and vice versa (see http://www.infrastructures.org/cfengine/

for some potentially helpful software and ideas)

- if you're using a fancy router that gives N machines the same IP

address, temporarily reconfigure the router to map that IP address to a

permanent comebacklater server

Round-robin DNS seems to have started going out of favor in 1997 so

let's elaborate the "fancy router" solution. Pick two of the Web server

machines and add an extra IP address to each. Keep a permanently

installed "comebacklater" server on both of those machines. When

maintenance time rolls around, pick one of these machines to serve as

the comebacklater server. Edit the message directly in the file system

of that computer and then reconfigure the router to point all traffic to

that normally-unused virtual server. Note that we've not shut down or

brought up any Web servers. Nor have we incurred any additional

hardware or rackspace expense; the permanently-running comebacklater

servers are simply taking up a few MB of RAM on two existing machines.

What is actually on the little boxes then?

Each little box contains

- complete Unix operating system

- Oracle client software

- complete AOLserver installation but without the ArsDigita

"utilities.tcl" file in the /home/nsadmin/modules/tcl/ directory; we

move this into the Web server's private Tcl library and rename it

"00-utilities.tcl" so that it loads first (this keeps us from having to

update utilities.tcl on 12 separate little computers)

- a /home/nsadmin/nsd.ini file specifying IP address and port to bind,

Oracle username, password, etc.

- (sometimes) a /web/comebacklater/ server root and corresponding .ini

file

Note that there is never any need to back up these machines onto tape.

What is actually on the big box?

- Unix

- Oracle server

- the /web/service-name/ content directory NFS-exported read-only

- a /web/uploaded-files directory NFS-exported read-write

- an AOLserver installation to be used in the event of serious

problems with the small Web server farm (i.e., we could revert to the

"one big box" configuration if necessary)

Note that this machine contains all the critical data that must be

backed up onto tape.

Text and photos Copyright

1999 Philip Greenspun.

Illustration copyright

Mina

Reimer

philg@mit.edu

Reader's Comments

For some reason, the folks at www.infrasturctures.org no longer mirror the cfengine software referred to above. The "canonical" URL for cfengine is http://www.iu.hioslo.no/cfengine/.

-- Frank Wortner, March 29, 2000

Add a comment | Add a link

As noted in "ArsDigita

Server Architecture", for 99% of Web publishers, the best physical

server infrastructure is the simplest to administer: one computer

running the RDBMS and the Web server.

As noted in "ArsDigita

Server Architecture", for 99% of Web publishers, the best physical

server infrastructure is the simplest to administer: one computer

running the RDBMS and the Web server.