Moore’s law says that we should get GPUs twice as fast every two years (GPUs are inherently parallel so adding more transistors should add performance). Huang’s law says that GPUs get 3X faster every two years.

Because everything desirable in this world is being scooped up by the Bitcoin enthusiasts, my seven-year-old desktop PC makes due with a seven-year-old ASUS STRIX GTX980 graphics card. It was purchased in April 2015 for $556 from Newegg.

A similar-priced card today, exactly seven years later, should be at least 10X faster, right?

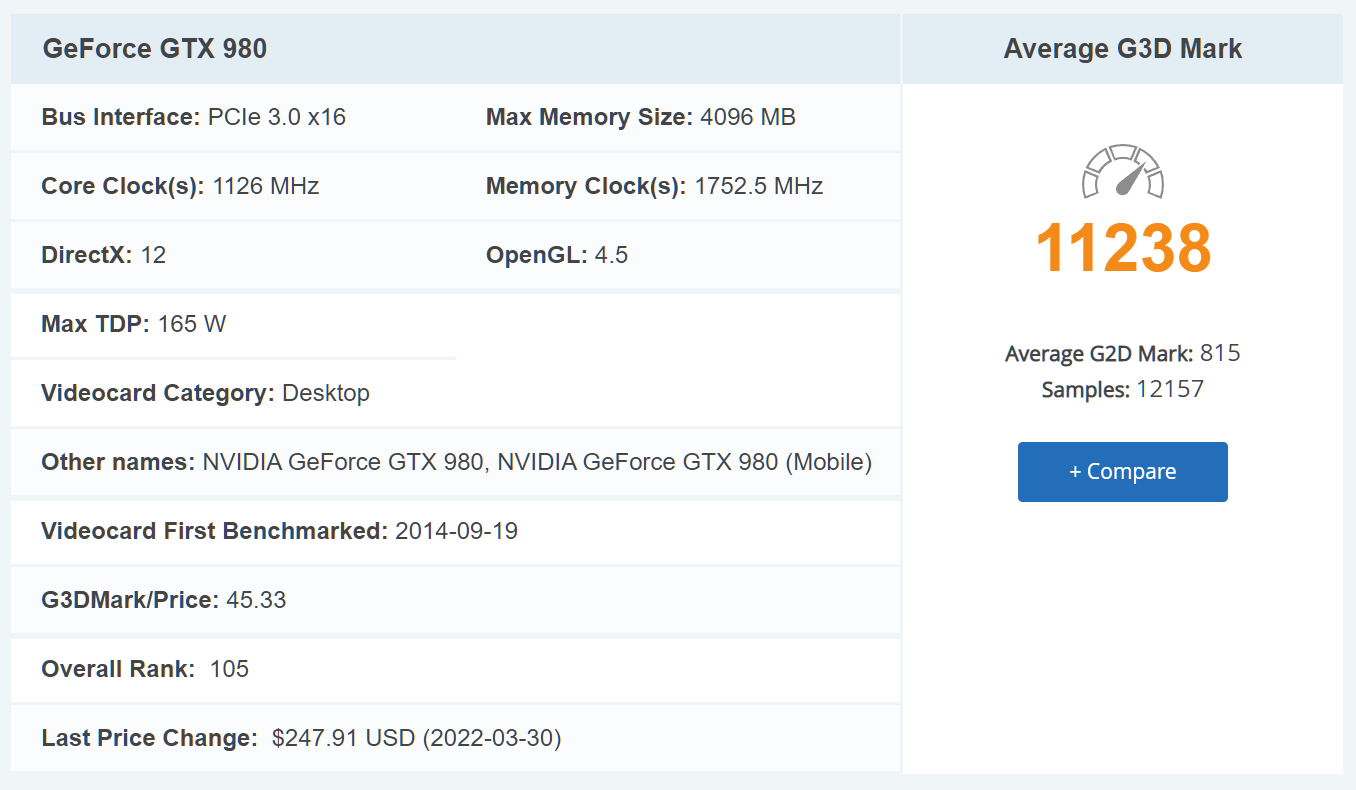

The 2015 card’s benchmark:

It can do 47 frames per second with DirectX 12.

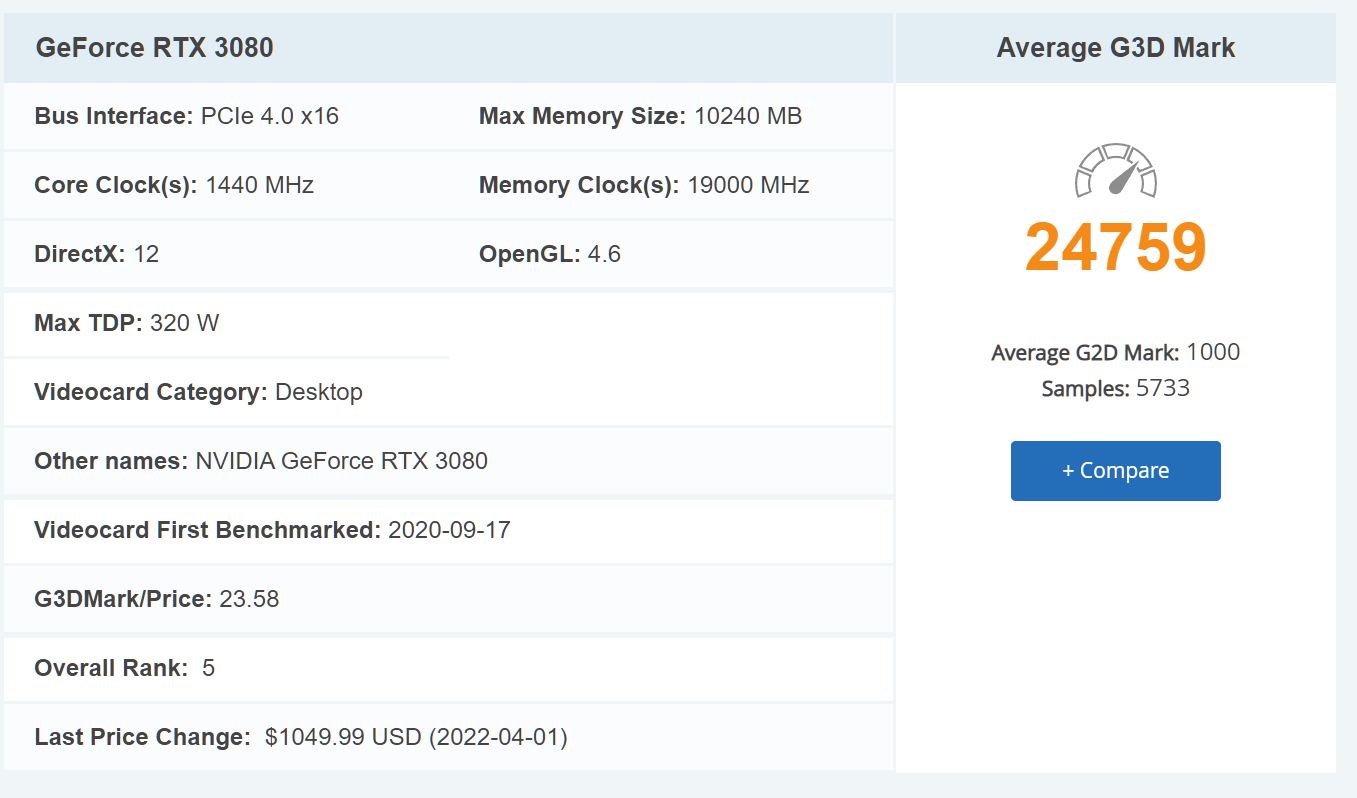

Let’s look at the $1,050 RTX 3080 for comparison. Using the same inflation rate as Palm Beach County real estate, $1,050 today is a little less than $556 in 2015 dollars.

It can do 98 frames per second with DirectX 12. Even the cards that sell for $2,000+ are only slightly faster than the RTX 3080.

I pride myself on asking the world’s dumbest questions so here goes… if building a new PC for activities other than gaming or video editing, why not use the integrated graphics on the motherboard? The latest motherboards will drive 4K monitors. The latest CPUs have a lot of cores, especially AMD’s, so they should be competent at tasks that are easy to parallelize. Back in 2020, at least, a graphics card was only about 2X the speed of AMD’s integrated graphics (Tom’s Hardware). Intel, it seems, skimps in this department.

One argument against this idea for those who want a fast desktop PC is that the fastest CPUs don’t seem to come with any integrated graphics. The AMD Threadrippers, for example, say “discrete graphics card required”. The Intel Core i9 CPUs with up to 16 cores do generally have “processor graphics”, but does it make sense to buy Intel? AMD’s CEO is frequently celebrated for identifying as a “woman” (example from IEEE, which does not cite any biologists) while Intel’s CEO identifies as a surplus white male. Tom’s Hardware says that the latest Intel CPUs are actually faster for gaming: “Intel holds the lead in all critical price bands … In terms of integrated graphics performance, there’s no beating AMD. The company’s current-gen Cezanne APUs offer the best performance available from integrated graphics with the Ryzen 7 5700G and Ryzen 5 5600G.”

Is the right strategy for building a new PC, then, to get the Ryzen 7 Pro 5750G (available only in OEM PCs; the 5700G is the home-builder’s version) and then upgrade to a discrete graphics card if one needs more than 4K resolution and/or if the Bitcoin craze ever subsides? The Ryzen 7 fits into an AM4 CPU socket so it won’t ever be possible to swap in a Threadripper. This CPU benchmarks in at 3337 (single thread)/25.045 (the 5700G is just a hair slower and can be bought at Newegg for $300). The absolute top-end Threadripper PRO (maybe $10,000?) is no faster for a single thread, but can run 4X faster if all 128 threads are occupied. What about the Intel i7 5820K that I bought in 2015 for $390? Its benchmark is 2011 for a single thread and 9,808 if all 12 threads are occupied.

(For haters who are willing to pass up chips from a company led by a strong independent woman, the Intel i9-12900KS is about $600 and includes “processor graphics” capable of driving monitors up to 7680×4320 (8K) via DisplayPort. It can run up to 24 threads.)

These seem like feeble improvements considering the seven years that have elapsed. I guess a new PC could be faster due to the faster bandwidth that is now available between the CPU and the M.2 SSDs that the latest motherboards support. But why are people in such a fever to buy new PCs if, for example, they already have a PC that is SSD-based? Is it that they’re using the home PC 14 hours per day because they don’t go to work anymore?

Related:

- Best Integrated Graphics (from Feb 2022; AMD Vega 11 is the winner)

- ASUS “gaming desktop” with the Ryzen 7 5700G and also a GTX 3060 graphics card (could this ever make sense? Is there any software that can use both the GPU packaged with the CPU and simultaneously the GPU that is in the graphics card?)

- William Shockley, who needs to be written out of transistor history: “Shockley argued that a higher rate of reproduction among the less intelligent was having a dysgenic effect, and that a drop in average intelligence would ultimately lead to a decline in civilization. … Shockley also proposed that individuals with IQs below 100 be paid to undergo voluntary sterilization”

> Is the right strategy for building a new PC, then, to get the Ryzen 7 Pro 5750G (available only in OEM PCs; the 5700G is the home-builder’s version) and then upgrade to a discrete graphics card if one needs more than 4K resolution and/or if the Bitcoin craze ever subsides?

I won’t delve into the deeper questions being asked here, but what you have up above is exactly what I’m thinking of doing.

The only real problem with integrated graphics, of course, is that they share memory with the CPU. Hardcore gamers look at people like that as though they’re wearing “tighty whities.”

Alex: Sharing memory for graphics worked fine on the Apple II, no? Everyone loved Apple II graphics!

@Philg: Oh yuck! Not the Apple ][, for gawd’s sake no! Those were for kids who had to stay after school in detention but promised to do something “enriching” with their time.

Real businessmen-in-training went home to their IBM PC/ATs with the original CGA graphics card to PEEK and POKE around with in a vast 16kB of onboard memory, using compiled BASIC, and then – as soon as it became available as a standard in 640×480, one of the ATi cards.

It’s truly amazing that 640×480 is still with us today as a vestigial nub of a format.

https://en.wikipedia.org/wiki/Color_Graphics_Adapter#:~:text=The%20original%20IBM%20CGA%20graphics,%2Dbit%20(16%20colors).

http://vgamuseum.info/index.php/cpu/item/67-ati-18700-small-wonder-graphics-solution

Then you’d have this pesky kid who loved his Commodore 64s with their sprites and later challenged you to a duel (which he won – for the time being!) with an Amiga. I hate that guy. Lol.

Fun Days! But apropos of the current question, the AMD Ryzens you mention look fine to build a workstation, even a midrange graphics workstation, as long as you’re not doing 3D (just make sure Adobe supports it!) If you’re not a gamer it’ll be fine and keep your “Windows Experience” alive for a few more years at a bargain. I would only go to dedicated cards now if memory more expensive and IF I really needed the firepower.

Don’t buy more gun than you can capably use. It’s a good rule.

For the PC sharing memory is expensive, systems like the Nvidia Jetson or (I think!) the Playstation have unified memory to simplify programming.

The lion kingdom has been stuck with nvidia cards because CUDA & GL shading language have never supported anything else. CPU execution of neural networks is nowhere close to a GPU.

For just blog writing, a raspberry pi would have done the job. Raspberry pi’s ceased production 3 years ago, so maybe all those users are getting pushed into the high end.

Intel’s parts are manufactured internally so you help with domestic job creation. AMD’s parts are made by TSMC so while it’s a better semiconductor technology, you don’t help with domestic job creation.

If you just compare clock speed of the two cards and not the “benchmark score”, it’s hugely different. Issue is with the benchmark (IMHO) not showing off the improvement. Gaming and video editing have completely different requirements/demands on these cards. Also see:

https://gpu.userbenchmark.com/Compare/Nvidia-RTX-3080-vs-Nvidia-GTX-980/4080vs2576

For integrated GPU, Apple Silicon is now clearly the winner (and likely expensive): https://www.apple.com/newsroom/2022/03/apple-unveils-m1-ultra-the-worlds-most-powerful-chip-for-a-personal-computer/

BTW: In my opinion, Moore’s Law and Huang’s Law have been “salted” with some kind of radioactive poison due to the simple fact that people really don’t need that much processing power.

One of my currently usable workstations is still effective with an Intel Core I5 650 CPU with just 16GB RAM and a GeForce 1030, which is such a low-end, pi**-poor card (30 watts, 14nm tech) that people laugh, and then laugh harder when I tell them it’s in a Dell Optiplex 980 case with a 230 watt power supply and the GeForce 1030 (2GB GDDR5 onboard) was all that would fit.

Well, it works fine for 2D graphics in Photoshop, the whole Adobe suite, and CorelDraw. I want to upgrade that workstation a little, which is why I’m thinking of the AMDs you mention in a proper chassis with a real power supply in case I need – in the future – a more powerful graphics card. But their onboard graphics should be fine for the software I currently use.

I was forced to put the GeForce 1030 into the Optiplex 980 because of ADOBE!

The whole show cost under $500. My biggest expenses by far are software.

Back to my main point: why double the processing power every two years when it’s absolutely superfluous? The much better thing to do is sell fractional versions of Moore/Huang law machines at inflationary prices.

Because the new army of Woke Software Developers will continue the crusade to make software as bloated and as buggy as possible. Need MOAR and MOAR power to keep the crap software running! And these days of automatic updates and change for the sake of change you don’t even have the luxury of simply not upgrading. Even with Linux one quickly discovers that if you want to install some newer software doohickey you’ve got to upgrade the whole shebang.

averros: Yes, one must “grow the team” and hire anyone in sight. Then the mediocre team must be kept busy and adds broken and superfluous nonsense. Open Source is the same these days.

As a non-gamer, I had always used integrated graphics until I had to engage with artificial intelligence (artificial stupidity in most cases). The main benefit of the latest cards seems to be the larger memory (4GB is not enough, 12-24 GB is needed because copying data between CPU and GPU memory is the most expensive operation there is).

I only need a Threadripper for compiling C++ software, which still follows Moore’s law in doubling the bloat every 6 months.

> 4GB is not enough, 12-24 GB is needed because copying data between CPU and GPU memory is the most expensive operation there is.

I agree with this and I don’t do much compilation of C++ right now. If I did, I would probably want a faster machine.

As far as the “But why are people in such a fever to buy new PCs if, for example, they already have a PC that is SSD-based?,

The demographics that I see include those that are processing larger data sets, usually for personal investment analysis, those that “need” a PC, those that are heading off to college for the first time, those that have “run” out of space and do not accept a cloud subscription, even if it comes with a free component to store data. This drives the user to merely buy a new PC with a bigger hard drive and move the data from the source to the new destination.

I know this article focused on “building for something other than gaming or video editing”, but if you do get to a post on the “Something other”, it would be interesting to see if any “user” has experimented with OToy/Render. The details of this product can be found in the blog post listed below. In summary this company is helping to reduce the costs that one can occur by using the cloud provider GPU. After all, a cloud provider has very little incentive for you to process your transactions quickly when they are billing you for “compute time”.

******Article on “Renting GPU with a cloud provider******

“Kyle Samani, Co-founder and Managing Partner of Multicoin on Accelerating the Adoption of Sovereign Software, Designing Token Systems that Capture Value, and Why Composability is the Key to Crypto” (https://howardlindzon.com/kyle-samani-co-founder-and-managing-partner-of-multicoin-on-accelerating-the-adoption-of-sovereign-software-designing-token-systems-that-capture-value-and-why-composability-is-the-key-to-crypto/) See (23:04) – The case for compute (Render)

“Let’s look at the $1,050 RTX 3080 for comparison. Using the same inflation rate as Palm Beach County real estate, $1,050 today is a little less than $556 in 2015 dollars.

US median home price seems like a better metric for inflation. According to St Louis Fed web page, median home prices increased from 2015 to Present, 290->408k. And according to moneychimp compound interest calculator , that is roughly 6% annual home price inflation. For comparison my FDIC bank pays 1% before tax.

I assembled a desktop PC last year at this time, starting with storage, M.2 with R/W Speeds 7000/5000. Good for virtual machines. Then 7nm CPU ( just because) and mobo with dual M.2 slots and Wireless 802.11ax and BT 5.1 (just because). Discrete graphics card ~ $100.

My motherboard with 16GB ram and AMD Phenom™ II X4 Black are about 12 years old. I added ATI Radeon RX800 Sapphire nitro or whatever. It’s running great. I don’t need to buy a new computer. Maybe I can get another 5 years out of it.

Related – how progress in chips slowed for 10 years while waiting for “extreme ultra violet” lithography:

I agree with the premise… AFAIK no need to get a discrete video card unless you are gaming, video editing, etc. You can always build the computer without it at first and then put one in if you find you need it. Just want to be sure you have space in the case, adequate power, etc up front.

I built a system around a Ryzen 3400g in November 2020 – it was great. I’m not a gamer, but was curious how it would perform – it seemed to play games (GTA V, Snowrunner) at 720p anyway. Recently, I’ve started playing around with training some simple machine vision models, and it was too slow. I started renting time on AWS EC2 GPU accelerated instances, and after a training a few models, I decided for my purposes it would be cheaper to build a setup at home, so I just acquired an nVidia 3060 card – in training models, it seems comparable in performance to the cheapest AWS EC2 GPU accelerated instance. I have looked at, but haven’t tried, the https://vast.ai/ GPU rental service, where individuals rent free time on their PCs – at a glance, the pricing looks much better than Amazon.