Based on searching the ACM journal database, the dream of parallel computing seems to date back roughly 60 years. Some folks at Westinghouse in 1962 imagined partial differential equations being solved, satellites being tracked, and other serious problems being attacked.

By 1970, multiple processors were envisioned working together to serve multiple users on a time-sharing system (“A processor allocation method for time-sharing”; Mullery and Driscoll (IBM), in CACM). In 1971, a 4-core machine was set up for keeping U.S. Navy ships out of bad weather: “4-way parallel processor partition of an atmospheric primitive-equation prediction model” (Morenoff, et al.).

What about today? A friend recently spent $3,000 on a CPU. Why did he need 128 threads? “I like to keep a lot of browser windows open and each one is running JavaScript, continuously sending back data to advertisers, etc.”

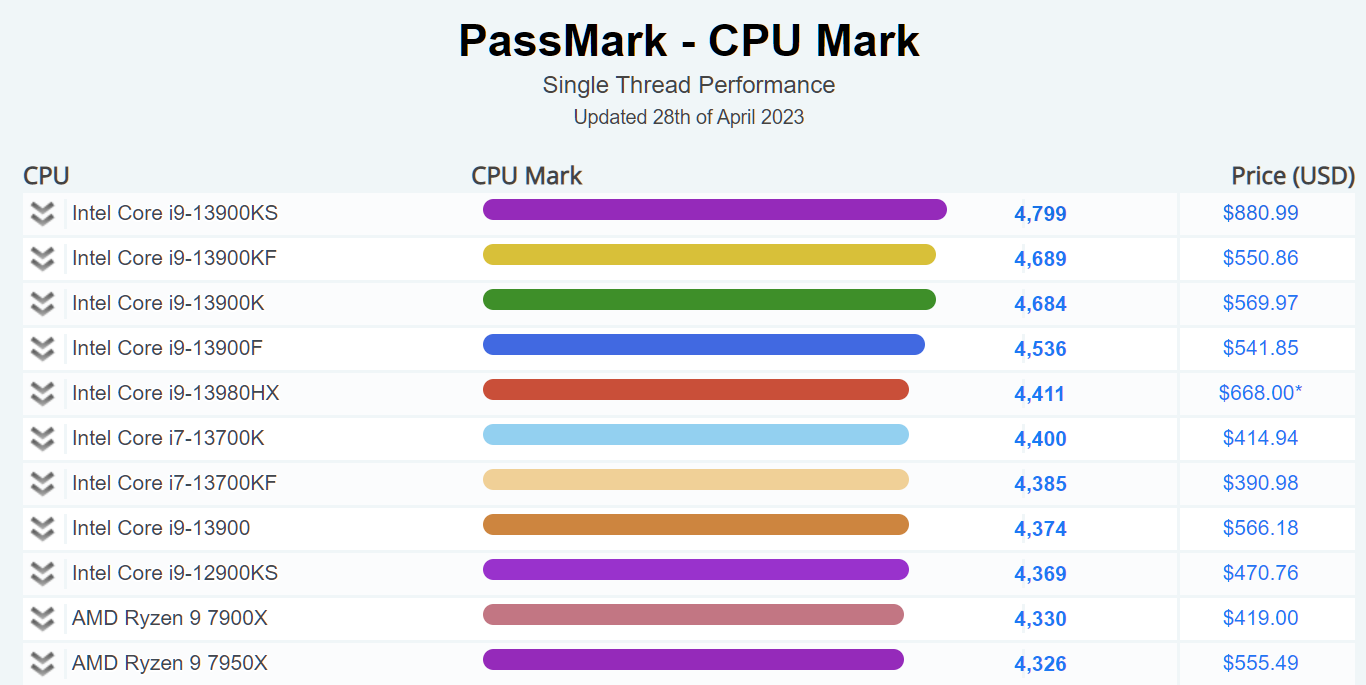

The benchmark nerds say that you don’t need to spend a lot to maximize single-thread performance:

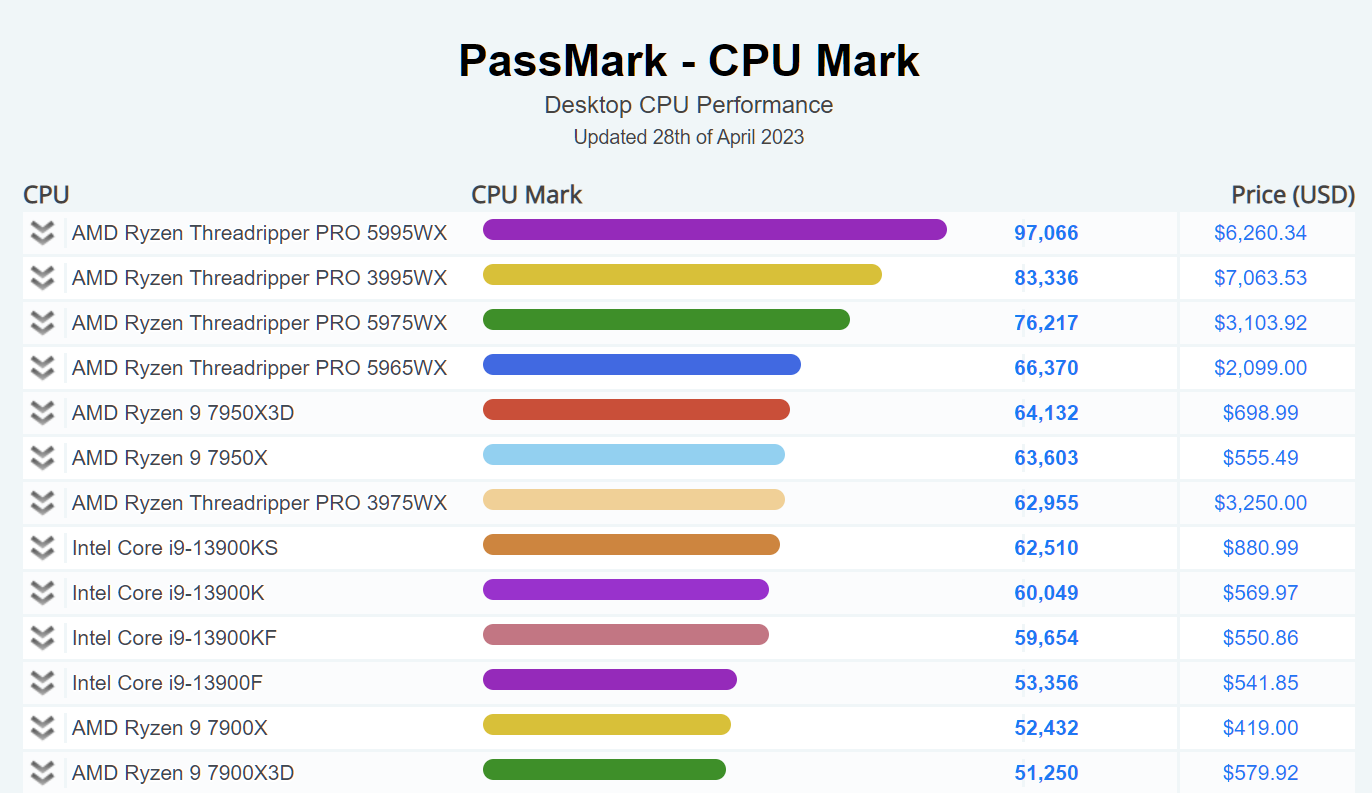

And you also get pretty close to the best benchmark result with a 550-Bidie CPU:

But maybe the benchmarks don’t fully capture the multi-window browser support capabilities of the $6,000+ CPUs?

Parallel computing is very difficult. Yes, there are some problems which parallelize very nicely but most of the things that most people do most of the time don’t. The practical performance improvement that you or I would see operating a desktop computer has barely improved in the last 10 years.

The hardware folks haven’t been able to scale clock speed or single core performance for a while. They can shove a ton of transistors onto a die, so for wont off a better approach they just copy and paste CPU cores and leave it up to the software guys to figure out what to do with them.

Meanwhile the compilers and development tools available for optimizing for multithreaded architectures have barely improved in the last 20 years.

Finally, the thing that would most improve computer performance would be lower memory latency and higher bandwidth. In fact, as you increase the number of cores, memory bandwidth and latency becomes even more critical. If you look at this as a ratio of the number of clock cycles to fetch data from memory, this performance metric has degraded astronomically. If you need something and it’s not in cache or worse, you have to flush the cache, you stall for millions of clock cycles.

I lived near a 11-storey building that was purpose-built in the 1980s by Shell oil to house a Cray supercomputer + support staff. Engineers+geologists would submit 20,000 element models, run reservoir simulations for 3-7 days to get a result, make adjustments, then repeat.

Today much 1M+ element models are run in real-time, using GPU graphics cards as parallelized math engines.

They have 64 cores & 128 threads at least. Amazing how much AMD is able to raise prices now that Intel dropped out. Back when Cyrix was around, the most anyone could charge was $1000. A lot more work is done by the GPU nowadays & those max out at $1500.

Nope. A nVidia H100 as used by ChatGPT costs around $36K.

I borrowed the PNG and asked my Dad. One of the researchers’ names seemed familiar to me, so I thought he might have mentioned it somewhere along the way. I was a little surprised when he wrote back via email:

——————————->

“I never heard of this. I know inherently it exists because computers used to solve for irrational numbers use it. The IBM machines I was exposed to were all fixed length machines which could do this by subroutine but I can see where it is much less efficient…

…It amazes me how much thought went into doing this before they built the first one. The machine designed by Turing is the prototype of the digital computer. He had solved all the problems. Others worked out the processing dynamics, the amount of circuitry you would need to solve problems, how data would be processed (Little Endian or Big Endian.) Big Endian was much faster but required much more circuitry. The first business machines I’m familiar with were CADETs – “Can’t Add Doesn’t Even Try.” It looked up the sum in a table.

<———————————–

I think J.C.R. Licklider surprised a world not ready to hear it inre: "Why do you need 128 threads?" with "The Computer As A Communication Device" but that paper was also ahead of its time.

https://internetat50.com/references/Licklider_Taylor_The-Computer-As-A-Communications-Device.pdf

BTW don’t be surprised if there is an error or two in his memory, he’s in his late ’70s and today was a long and tiring day. Sometimes he “flips a bit” and doesn’t realize it.

“The first business machines I’m familiar with were CADETs – “Can’t Add Doesn’t Even Try.” It looked up the sum in a table.”

That’s no-nonsense engineering!

Based on what I’ve read, support for multi-threading in modern computer languages seems to be pretty weak. And the debugging seems to be a nightmare.

I’ve seen situations at work which led to bugs which were, how shall we say, very inconvenient for everyone involved.

Anyway, from a practical standpoint for _most_ consumers browsing the web, more memory seems a lot more important than having a powerful CPU.

There are programming languages and libraries with decent support for parallel programming.

What do you consider strong support for parallel programming? Relatively weak debugging support is natural on pre-emptive multitasking operating systems, how could debugging of probabilistic threads when debugger is either running on a separate thread or in its own process be made as easy as sequential code debugging?

> Some folks at Westinghouse in 1962 imagined partial differential equations being solved

What they failed to imagine for sure is that Westinghouse is going to be a brand name for cheap Chinese TVs.