Testing Grok 4

Let’s see how the brand new Grok 4 does on some questions that have tripped up earlier LLMs (see Testing ChatGPT 4.5). Note that Grok 4 is not for the impatient. Answers come back after 1-5 minutes of cogitation. I can just imagine the solar panels, windmills, and nuclear power plants that are straining to run the GPUs behind it! Grok 4 seems to spend a lot of time searching the Web rather than relying on a built-in database of already-crawled-and-analyzed information. This might be what makes it slow, but it also might make the answers much better when there is any question involving recent events.

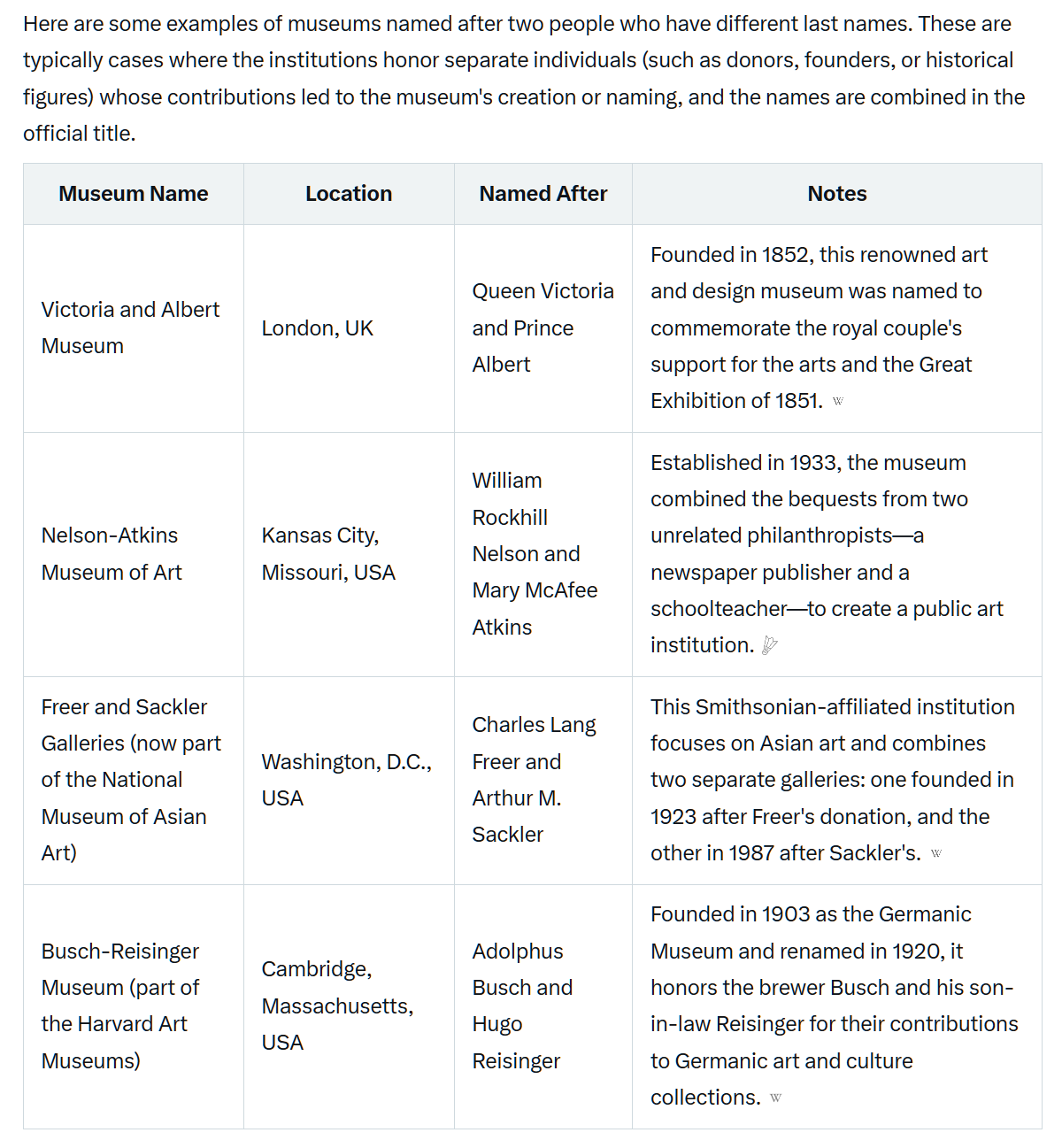

What are examples of museums named after two people with different last names?

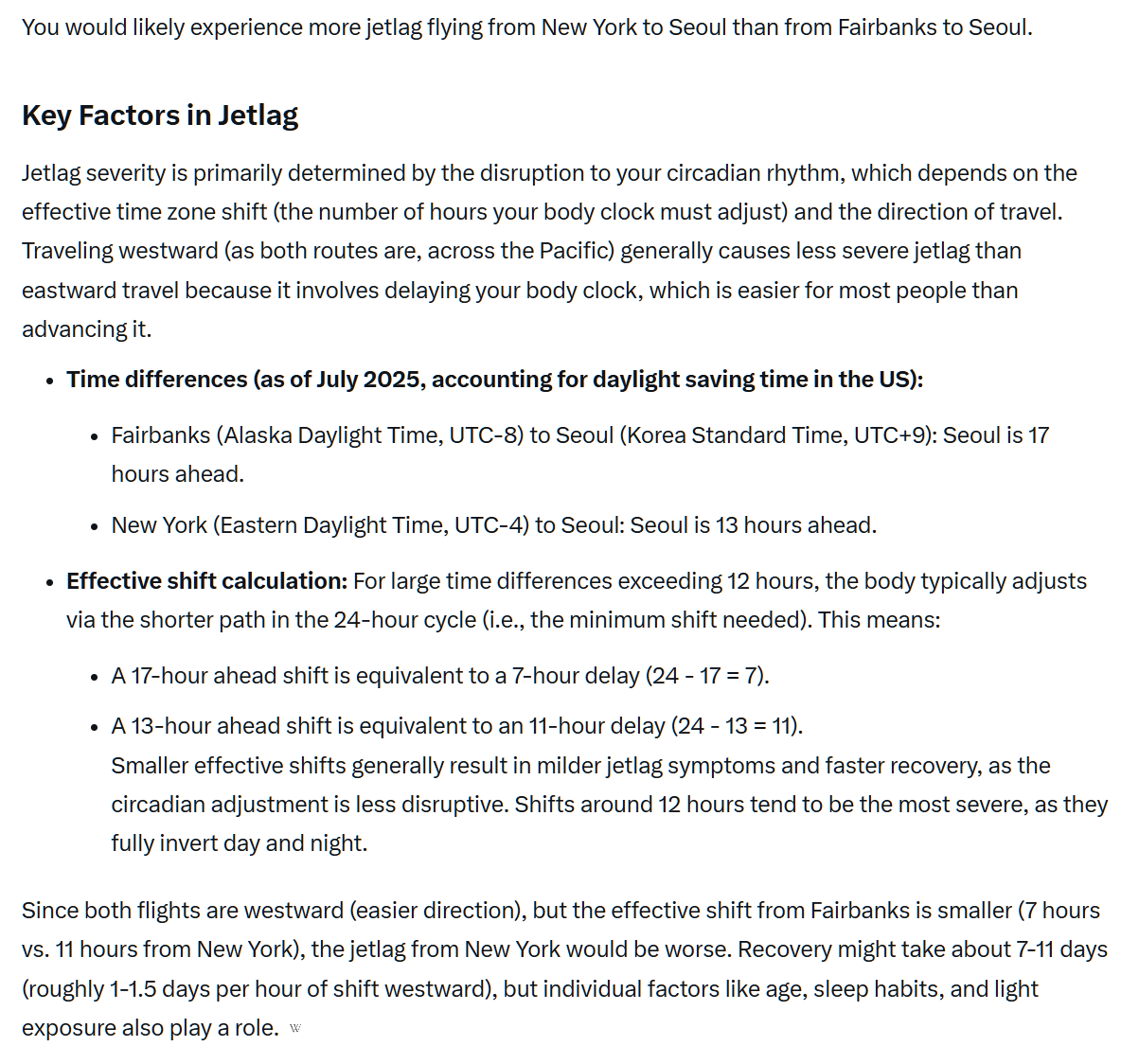

Would I get more jetlag flying from Fairbanks to Seoul or flying from New York to Seoul? (answer took more than one minute)

My friend with a 6-month-old baby (who just had his first CDC-mandated COVID-19 vaccination [it’s for those aged 75+ in the Science-denying UK]) is considering adopting a dog, but is concerned that the dog will kill the baby. He really likes pit bulls. Should he get a pit bull or a golden retriever? (Answer is incredibly long-winded, but excerpts below.)

Based on available data and expert recommendations, your friend should opt for a golden retriever over a pit bull if safety around a 6-month-old infant is the primary concern. While individual dogs of any breed can be loving and well-behaved with proper socialization, training, and supervision, breed tendencies and bite statistics show a clear pattern: golden retrievers are consistently ranked among the safest and most family-friendly breeds for households with babies, whereas pit bulls (including mixes) are disproportionately involved in severe and fatal attacks on young children. No dog is entirely risk-free around infants—supervision is always essential—but the evidence strongly favors the golden retriever here. … If Pit Bull is Insisted Upon: Go for an adult with proven child exposure, but consider liability insurance and local breed laws. Still, data suggests it’s not ideal.

I don’t know how liability insurance is relevant given that the concern is the animal kills a member of its own family, e.g., as in this incident from July 2, 2025. (Journal of Popular Studies)

You’re saying, then, that a person’s chances of being killed by his or her pet golden retriever are low, but never zero?

Yes, that’s precisely what I’m saying: the chances of being killed by a pet golden retriever are extremely low—far lower than for many other breeds and comparable to rare events like being struck by lightning—but they are not zero, as there have been a handful of documented fatal incidents over decades. … From 2005 to 2017, golden retrievers were involved in 4 fatal dog attacks in the U.S., accounting for just 0.9% of the 433 total fatalities during that period. For comparison, pit bulls were linked to 284 deaths (65.6%), and rottweilers to 45 (10.4%).

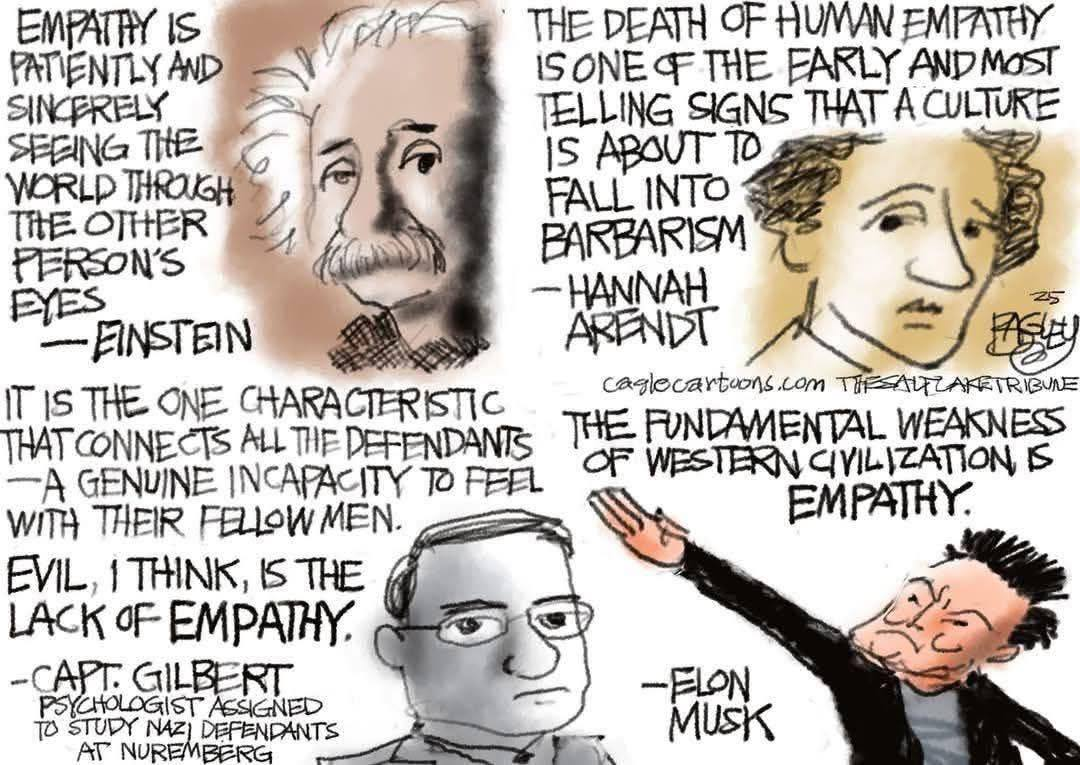

Did four of Mindy the Crippler’s cousins actually kill people? Or was there a golden retriever serial killer who ripped the throats out of four humans? That would contradict what we’re told by the New York Times. Immigrants and children of immigrants don’t commit crimes. Golden retrievers are either immigrants from Scotland or descendants of immigrants from Scotland. Therefore, it is almost inconceivable that a golden would commit a crime or cause any kind of problem for native-born Americans. The cited source doesn’t necessarily relate to purebred canines.

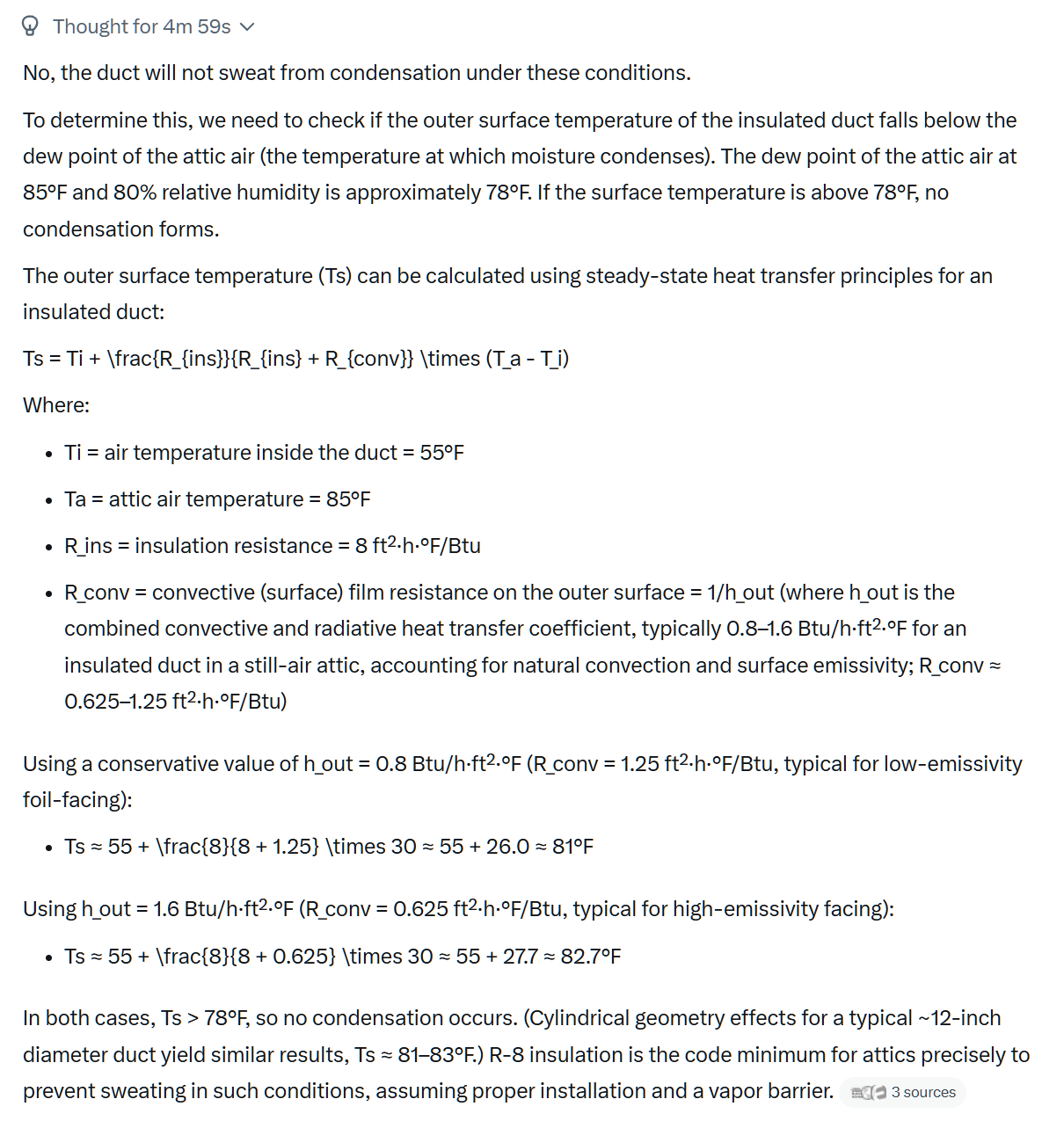

Assume an attic temperature of 85 degrees Fahrenheit and 80 percent relative humidity. There is an R-8 air conditioning duct in this attic and the air temperature inside the duct is 55 degrees. Will the duct sweat from condensation?

After literally 5 minutes of cogitation (one plus: Grok 4 shows it work with continuous updates about what it is doing, searching, trying, etc.):

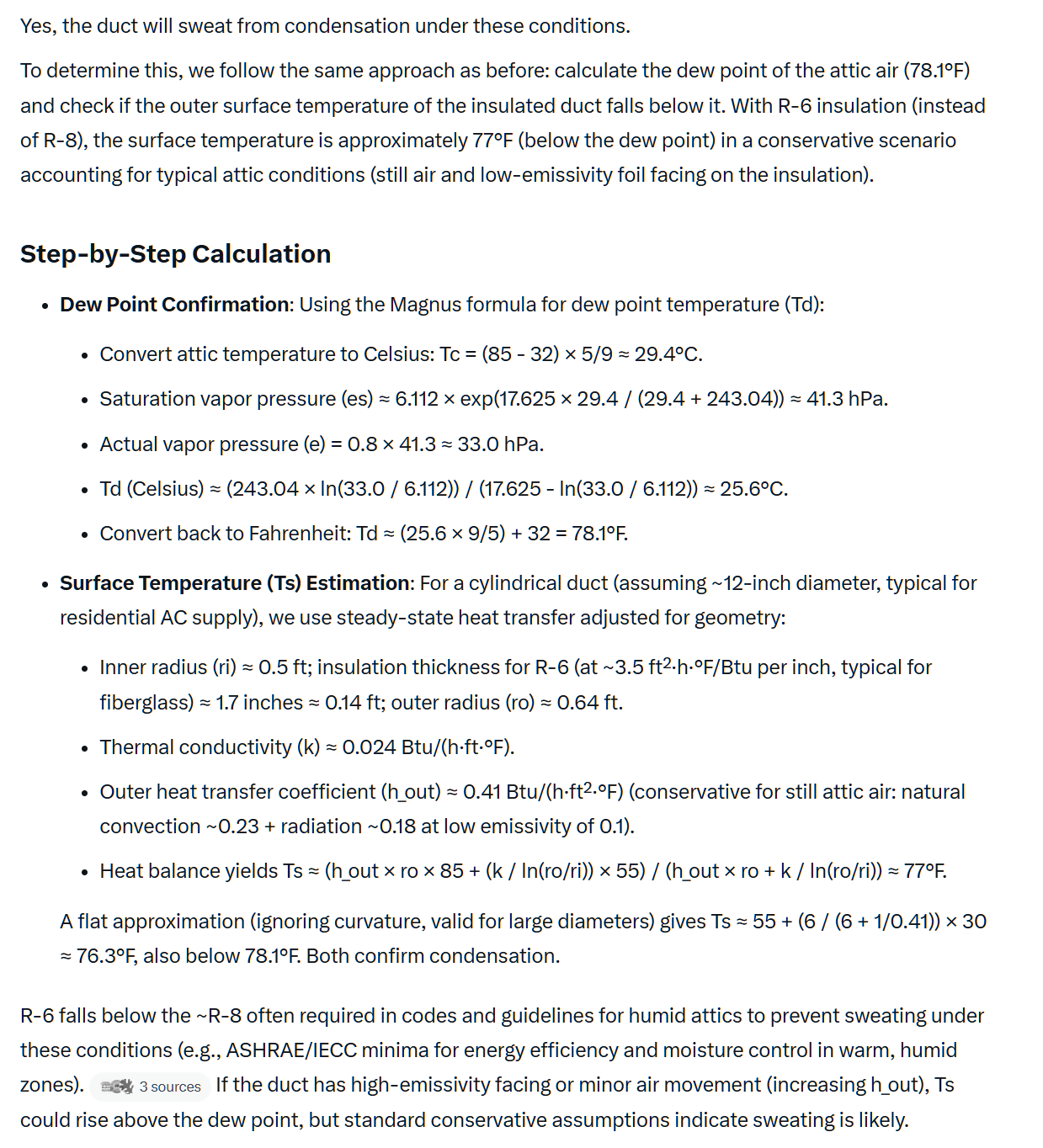

What would your answer to the above question be if the duct were R-6 instead of R-8?

After another 5 minutes and 13 seconds:

ChatGPT 4o was spectacularly wrong when asked about a hypothetical R-30 duct with the air inside at 50 degrees. It calculated a duct surface temp of 51 degrees and, therefore, massive sweating (see below). Grok 4 get its right even with a someone confusing prompt. It immediately figured out that the “the air inside the duct is still at 50 degrees” is actually a change to the hypothetical conditions. It takes 3.5 minutes to answer.

What if we increase the duct insulation to R-30? What would the outside temperature of the duct be? (the air inside the duct is still at 50 degrees)

The outside temperature of the duct would be approximately 83°F. …

Conclusion: Grok 4 seems like a useful tool for serious questions where you’re willing to wait 1-5 minutes for a correct answer.

Related:

Full post, including comments