A corrected history of mRNA vaccines

According to the world’s most prestigious scientific journal, here’s how the history of mRNA vaccines begins:

In late 1987, Robert Malone performed a landmark experiment. He mixed strands of messenger RNA with droplets of fat, to create a kind of molecular stew. Human cells bathed in this genetic gumbo absorbed the mRNA, and began producing proteins from it.

Realizing that this discovery might have far-reaching potential in medicine, Malone, a graduate student at the Salk Institute for Biological Studies in La Jolla, California, later jotted down some notes, which he signed and dated. If cells could create proteins from mRNA delivered into them, he wrote on 11 January 1988, it might be possible to “treat RNA as a drug”. Another member of the Salk lab signed the notes, too, for posterity. Later that year, Malone’s experiments showed that frog embryos absorbed such mRNA. It was the first time anyone had used fatty droplets to ease mRNA’s passage into a living organism.

Those experiments were a stepping stone towards two of the most important and profitable vaccines in history: the mRNA-based COVID-19 vaccines given to hundreds of millions of people around the world. Global sales of these are expected to top US$50 billion in 2021 alone.

The above Nature article is dated September 14, 2021. In late December 2021/early January 2022, the above-referenced Robert Malone was censored by YouTube (UK Independent) and unpersoned by Twitter (Daily Mail). A mixture from the two sources:

Given the doctor’s contested views on Covid-19, including his opposition to vaccine mandates for minors, the act by YouTube has sparked several accusations of censorship amongst right-wing politicians and political commentators.

Malone even questioned the effectiveness of Pfizer’s Covid-19 vaccine in a tweet posted the day before his account was suspended on December 30

He told Rogan that government-imposed vaccine mandates are destroying the medical field ‘for financial incentives (and) political a**-covering’

Malone responded by questioning: ‘If it’s not okay for me to be a part of the conversation even though I’m pointing out scientific facts that may be inconvenient, then who is?’

(What did Malone do for work between 1987 and now? According to Wikipedia, he graduated medical school in 1991, was a postdoc at Harvard Medical School (yay!), and then worked in biotech, including on vaccine projects. “Until 2020, Malone was chief medical officer at Alchem Laboratories, a Florida pharmaceutical company,” suggests that he might live here in the Florida Free State.)

How long would we have to wait for a corrected history of mRNA vaccines from which the unpersoned Malone would be absent? January 15, 2022, “Halting Progress and Happy Accidents: How mRNA Vaccines Were Made” (New York Times), a 30-screen story on my desktop PC. The Times history starts in medias res, but if we scroll down to the point in time where Nature credits Malone, both Malone and Salk are missing:

The vaccines were possible only because of efforts in three areas. The first began more than 60 years ago with the discovery of mRNA, the genetic molecule that helps cells make proteins. A few decades later, two scientists in Pennsylvania decided to pursue what seemed like a pipe dream: using the molecule to command cells to make tiny pieces of viruses that would strengthen the immune system.

The second effort took place in the private sector, as biotechnology companies in Canada in the budding field of gene therapy — the modification or repair of genes to treat diseases — searched for a way to protect fragile genetic molecules so they could be safely delivered to human cells.

The third crucial line of inquiry began in the 1990s, when the U.S. government embarked on a multibillion-dollar quest to find a vaccine to prevent AIDS. That effort funded a group of scientists who tried to target the all-important “spikes” on H.I.V. viruses that allow them to invade cells. The work has not resulted in a successful H.I.V. vaccine. But some of these researchers, including Dr. Graham, veered from the mission and eventually unlocked secrets that allowed the spikes on coronaviruses to be mapped instead.

Perhaps the Times just didn’t have enough space in 30 screens of text to identify Malone? The journalists and editors found space to write about someone who wasn’t involved in any way:

“It was all in place — I saw it with my own eyes,” said Dr. Elizabeth Halloran, an infectious disease biostatistician at the Fred Hutchinson Cancer Research Center in Seattle who has done vaccine research for over 30 years but was not part of the effort to develop mRNA vaccines. “It was kind of miraculous.”

There was plenty of space for a photo of an innumerate 79-year-old trying to catch up on six decades of biology. The caption:

From left: Dr. Graham, President Biden, Dr. Francis Collins and Kizzmekia Corbett. The scientists were explaining the role of spike proteins to Mr. Biden during a visit to the Viral Pathogenesis Laboratory at the N.I.H. last year.

For folks in Maskachusetts who’ve had three shots and are in bed hosting an Omicron festival, the article closes with an inspiring statistic:

He was in his home office on the afternoon of Nov. 8 when he got a call about the results of the study: 95 percent efficacy, far better than anyone had dared to hope.

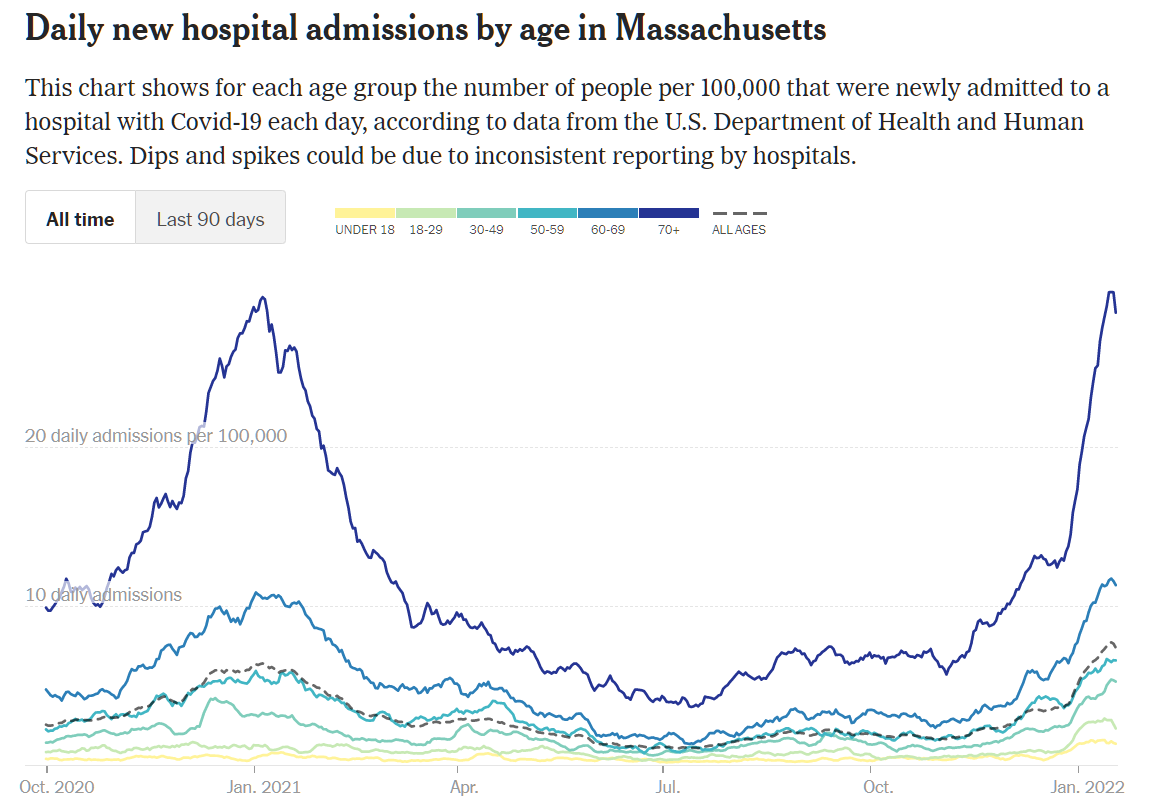

See also the NYT for Massachusetts hospitalization stats in a population that is 95 percent vaccinated with a 95 percent effective vaccine:

So… it was two weeks from Robert Malone being unpersoned by the Silicon Valley arbiters of what constitutes dangerous misinformation to an authoritative history in which Malone is not mentioned.

Full post, including comments