She Has Her Mother’s Laugh: The Powers, Perversions, and Potential of Heredity by Carl Zimmer says that almost everything is heritable and that genetics is the mechanism for heritability. However, there is one big exception… intelligence.

Why does this matter? The book reminds us that the idea that a lack of intelligence will render a person dependent on welfare goes back at least to the 1930s:

The Great Depression was reaching its depths when [Henry Herbert] Goddard came back to Vineland, and he blamed it largely on America’s lack of intelligence: Most of the newly destitute didn’t have the foresight to save enough money. “Half of the world must take care of the other half,” Goddard said.

The idea that intelligence could not be explained by heredity is similarly old:

[British doctor Lionel] Penrose entered the profession as a passionate critic of eugenics, dismissing it as “pretentious and absurd.” In the early 1930s, eugenics still had a powerful hold on both doctors and the public at large—a situation Penrose blamed on lurid tales like The Kallikak Family. While those stories might be seductive, eugenicists made a mess of traits like intelligence. They were obsessed with splitting people into two categories—healthy and feebleminded—and then they would cast the feebleminded as a “class of vast and dangerous dimensions.” Penrose saw intelligence as a far more complex trait. He likened intelligence to height: In every population, most people were close to average height, but some people were taller and shorter than average. Just being short wasn’t equivalent to having some kind of a height disease. Likewise, people developed a range of different mental aptitudes. Height, Penrose observed, was the product of both inherited genes and upbringing. He believed the same was true for intelligence. Just as Mendelian variants could cause dwarfism, others might cause severe intellectual developmental disorders. But that was no reason to leap immediately to heredity as an explanation. “That mental deficiency may be to some extent due to criminal parents’ dwelling ‘habitually’ in slums seems to have been overlooked,” Penrose said. He condemned the fatalism of eugenicists, as they declared “there was nothing to be done but to blame heredity and advocate methods of extinction.”

Even if a country did sterilize every feebleminded citizen, Penrose warned, the next generation would have plenty of new cases from environmental causes. “The first consideration in the prevention of mental deficiency is to consider how environmental influences which are held responsible can be modified,” Penrose declared.

The author finds some cases in which children with severe physical disorders, e.g., PKU, have impaired intelligence. From this he reminds us that it is wrong to believe that “our intelligence is fixed by the genes we inherit.” (Is that truly a comforting idea? I would have been as smart as Albert Einstein, for example, but I watched too much TV as a kid and didn’t work hard enough as an adult?)

We would be as tall as the Dutch if only we were smart enough to build a bigger government (2nd largest welfare state, as a percentage of GDP, is not enough to grow tall!):

The economy of the United States, the biggest in the world, has not protected it from a height stagnation. Height experts have argued that the country’s economic inequality is partly to blame. Medical care is so expensive that millions go without insurance and many people don’t get proper medical care. Many American women go without prenatal care during pregnancy, while expectant mothers in the Netherlands get free house calls from nurses.

How do intelligence distributions change over time, given that environment is supposed to be a huge factor?

Intelligence is also a surprisingly durable trait. On June 1, 1932, the government of Scotland tested almost every eleven-year-old in the country—87,498 all told—with a seventy-one-question exam. The students decoded ciphers, made analogies, did arithmetic. The Scottish Council for Research in Education scored the tests and analyzed the results to get an objective picture of the intelligence of Scottish children. Scotland carried out only one more nationwide exam, in 1947. Over the next couple of decades, the council analyzed the data and published monographs before their work slipped away into oblivion.

Deary, Whalley, and their colleagues moved the 87,498 tests from ledgers onto computers. They then investigated what had become of the test takers. Their ranks included soldiers who died in World War II, along with a bus driver, a tomato grower, a bottle labeler, a manager of a tropical fish shop, a member of an Antarctic expedition, a cardiologist, a restaurant owner, and an assistant in a doll hospital. The researchers decided to track down all the surviving test takers in a single city, Aberdeen. They were slowed down by the misspelled names and erroneous birth dates. Many of the Aberdeen examinees had died by the late 1990s. Others had moved to other parts of the world. And still others were just unreachable. But on June 1, 1998, 101 elderly people assembled at the Aberdeen Music Hall, exactly sixty-six years after they had gathered there as eleven-year-olds to take the original test. Deary had just broken both his arms in a bicycling accident, but he would not miss the historic event. He rode a train 120 miles from Edinburgh to Aberdeen, up to his elbows in plaster, to witness them taking their second test. Back in Edinburgh, Deary and his colleagues scored the tests. Deary pushed a button on his computer to calculate the correlation between their scores as children and as senior citizens. The computer spat back a result of 73 percent. In other words, the people who had gotten relatively low scores in 1932 tended to get relatively low scores in 1998, while the high-scoring children tended to score high in old age.

If you had looked at the score of one of the eleven-year-olds in 1933, you’d have been able to make a pretty good prediction of their score almost seven decades later. Deary’s research prompted other scientists to look for other predictions they could make from childhood intelligence test scores. They do fairly well at predicting how long people stay in school, and how highly they will be rated at work. The US Air Force found that the variation in [general intelligence] among its pilots could predict virtually all the variation in tests of their work performance. While intelligence test scores don’t predict how likely people are to take up smoking, they do predict how likely they are to quit. In a study of one million people in Sweden, scientists found that people with lower intelligence test scores were more likely to get into accidents.

IQ is correlated with longevity:

But Deary’s research raises the possibility that the roots of intelligence dig even deeper. When he and his colleagues started examining Scottish test takers in the late 1990s, many had already died. Studying the records of 2,230 of the students, they found that the ones who had died by 1997 had on average a lower test score than the ones who were still alive. About 70 percent of the women who scored in the top quarter were still alive, while only 45 percent of the women in the bottom quarter were. Men had a similar split. Children who scored higher, in other words, tended to live longer. Each extra fifteen IQ points, researchers have since found, translates into a 24 percent drop in the risk of death.

The author reports that twins separated at birth have almost identical IQs, despite completely different childhood environments. With most other personal characteristics, this would lead to the conclusion that intelligence was mostly heritable. Instead, however, Zimmer points out that if heritability is not 100 percent then it would be a mistake to call something “genetic”:

Intelligence is far from blood types. While test scores are unquestionably heritable, their heritability is not 100 percent. It sits instead somewhere near the middle of the range of possibilities. While identical twins often end up with similar test scores, sometimes they don’t. If you get average scores on intelligence tests, it’s entirely possible your children may turn out to be geniuses. And if you’re a genius, you should be smart enough to recognize your children may not follow suit. Intelligence is not a thing to will to your descendants like a crown.

To bolster the claim that intelligence is not heritable, the book cites examples of children whose mothers were exposed to toxic chemicals during pregnancy. Also examples that staying in school for additional years raises IQ (a measure of symbol processing efficiency).

Here’s an interesting-sounding study:

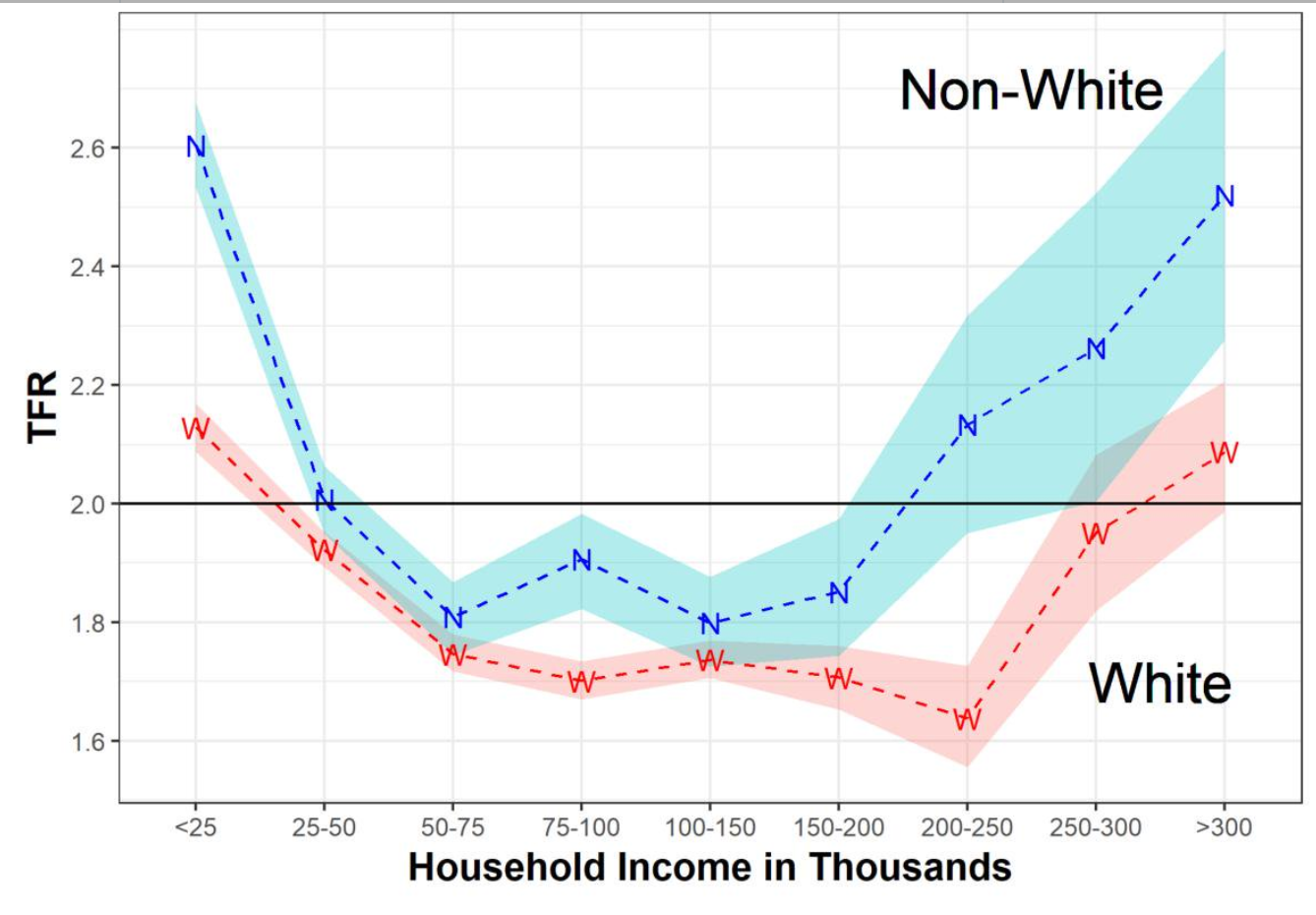

In 2003, Eric Turkheimer of the University of Virginia and his colleagues gave a twist to the standard studies on twins. To calculate the heritability of intelligence, they decided not to just look at the typical middle-class families who were the subject of earlier studies. They looked for twins from poorer families, too. Turkheimer and his colleagues found that the socioeconomic class determined how heritable intelligence was. Among children who grew up in affluent families, the heritability was about 60 percent. But twins from poorer families showed no greater correlation than other siblings. Their heritability was close to zero.

(Do we believe that heritability is zero because identical twins and siblings both have highly correlated IQs? Earlier in the book, the author describes how hospitals and doctors often misclassify twins.)

Buried in the next section is that this finding is not straightforward to replicate (“Why Most Published Research Findings Are False”):

If you raise corn in uniformly healthy soil, with the same level of abundant sunlight and water, the variation in their height will largely be the product of the variation in their genes. But if you plant them in a bad soil, where they may or may not get enough of some vital nutrient, the environment will be responsible for more of their differences. Turkheimer’s study hints that something similar happens to intelligence. By focusing their research on affluent families—or on countries such as Norway, where people get universal health care—intelligence researchers may end up giving too much credit to heredity. Poverty may be powerful enough to swamp the influence of variants in our DNA. In the years since Turkheimer’s study, some researchers have gotten the same results, although others have not. It’s possible that the effect is milder than once thought. A 2016 study pointed to another possibility, however. It showed that poverty reduced the heritability of intelligence in the United States, but not in Europe. Perhaps Europe just doesn’t impoverish the soil of its children enough to see the effect. Yet there’s another paradox in the relationship between genes and the environment. Over time, genes can mold the environment in which our intelligence develops. In 2010, Robert Plomin led a study on eleven thousand twins from four countries, measuring their heritability at different ages. At age nine, the heritability of intelligence was 42 percent. By age twelve, it had risen to 54 percent. And at age seventeen, it was at 68 percent. In other words, the genetic variants we inherit assert themselves more strongly as we get older. Plomin has argued that this shift happens as people with different variants end up in different environments. A child who has trouble reading due to inherited variants may shy away from books, and not get the benefits that come from reading them.

Poverty in the cruel U.S. crushes children! But, measured in terms of consumption, an American family on welfare actually lives better than a lot of middle class European families. The author praises the Europeans with their universal health care systems, but 100 percent of poor American children qualify for Medicaid, a system of unlimited health care spending (currently covering roughly 75 million people).

If unlimited taxpayer-funded Medicaid isn’t sufficient to help poor American children reach their genetic potential, maybe early education will? The book quotes a Head Start planner: “The fundamental theoretical basis of Head Start was the concept

Full post, including comments