Intel claims to have a faster and more cost-effective AI system than Nvidia’s H100. It is called “Gaudi”. First, does the name make sense? Antoni Gaudí was famous for doing idiosyncratic creative organic designs. The whole point of Gaudí was that he was the only designer of Gaudí-like buildings. Why would you ever name something that will be mass-produced after this individual outlier? Maybe the name comes from the Israelis from whom Intel acquired the product line (an acquisition that should have been an incredible slam-dunk considering that it was done just before coronapanic set in and a few years before the LLM revolution)?

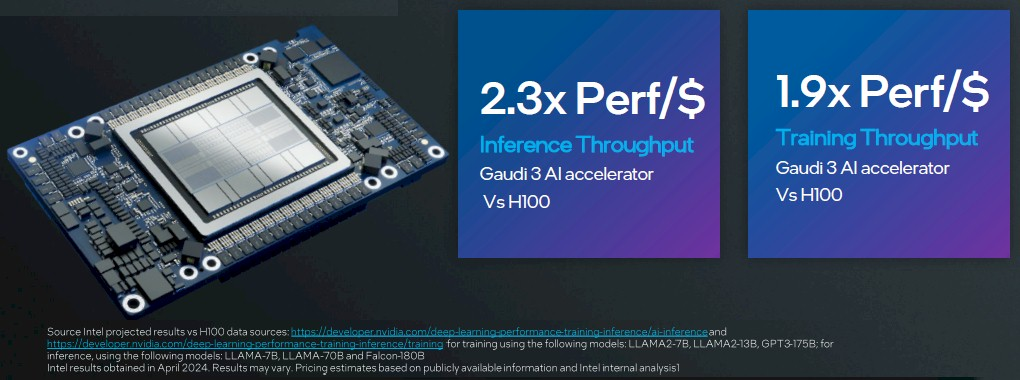

Intel claims that their Gaudi 3-based systems are faster and more efficient per dollar and per watt than Nvidia’s H100. Yet the sales are insignificant (nextplatform):

Intel said last October that it has a $2 billion pipeline for Gaudi accelerator sales, and added in April this year that it expected to do $500 million in sales of Gaudi accelerators in 2024. That’s nothing compared to the $4 billion in GPU sales AMD is expecting this year (which we think is a low-ball number and $5 billion is more likely) or to the $100 billion or more that Nvidia could take down in datacenter compute – just datacenter GPUs, no networking, no DPUs – this year.

Nvidia’s tools are great, no doubt, but if Intel is truly delivering 2x the performance per dollar, shouldn’t that yield a market share of more than 0.5 percent?

Here’s an article from April 2024 (IEEE Spectrum)… “Intel’s Gaudi 3 Goes After Nvidia The company predicts victory over H100 in LLMs”:

One more point of comparison is that Gaudi 3 is made using TSMC’s N5 (sometimes called 5-nanometer) process technology. Intel has basically been a process node behind Nvidia for generations of Gaudi, so it’s been stuck comparing its latest chip to one that was at least one rung higher on the Moore’s Law ladder. With Gaudi 3, that part of the race is narrowing slightly. The new chip uses the same process as H100 and H200.

If the Gaudi chips work as claimed, how is Intel getting beaten so badly in the marketplace? I feel as though I turned around for five minutes and a whole forest of oak trees had been toppled by a wind that nobody remarked on. Intel is now the General Motors circa 2009 of the chip world? Or is the better comparison to a zombie movie where someone returns from a two-week vacation to find that his/her/zir/their home town has been taken over? Speaking of zombies, what happens if zombies take over Taiwan? Humanity will have to make do with existing devices because nobody else can make acceptable chips?

Related:

Greenspun needs to download the SDK & do some benchmarks. AI is still a spectator sport. Suspect the Bide administration was particularly inept at policing any of its stimulus packages, hence all these chips act recipients taking the money & running.

The Gaudi 3 development kit is $125,000. https://autogpt.net/intel-takes-on-nvidia-with-affordable-gaudi-3-ai-solution/

I would rather buy 10 percent of a new piston-powered airplane with this money…

That is 8 gaudi 3’s and it’s much more affordable compared to h100.

Performance is to be seen

Saw Elon speaking (Lex Friedman podcast) about his new 100,000 GPU data center. He said the biggest cost/engineering/complexity wasn’t the GPUs, but rather wiring all of them together for direct memory access to each other. It’s essentially 100,000 x 100,000 connections, and signal latency was important in keeping everything synchronized.

Yes, hence optical interconnects!

Phil, I saw this post and was excited…but was hoping for more insight from you, given your MIT credentials, background in technology, and large technology network (I assume). Specifically, what is your view on how the AI processor market plays out over the next say 5-10 years? Does Nvidia own the entire market and is it able to charge outsized rents for many more years, or do other third players (like Intel) take significant share? Do the hyperscalers (Amazon, Microsoft, Meta, Tesla, Oracle etc.) have success in developing their own chips (as they are all doing) and take most of the market from Nvidia? Is software a key differentiator for Nvidia, which will help it maintain it’s massive market share? What other considerations are there to ponder? Maybe reach out to your network and take their pulse/poll and see if it agrees with your own analysis?

Meh. All the AI hardware hype is just temporary. Lots of GPUs+RAM is only needed while training an AI, which only takes a few months at most. Once trained, it takes a tiny fraction of resources to update and maintain. In a few generations the models will all converge, be more efficient, and require fewer updates. The huge AI data centers will mostly be idle, while a local copy of the trained AI will runs on your iPhone 22.

Okay, thanks—that is very interesting indeed! So should my takeaway be that Nvidia’s sales and monopoly-like margins remain robust for a few years and then revert back to pre-AI levels/trendlines?

I’m sorry to disappoint, but I failed to drop everything and join the AI gold rush. I still can’t figure out why the gold is there given how few humans are paid to generate text or photos. I know a few things, e.g., that programmers are lazy and don’t want to learn anything new so they keep using https://en.wikipedia.org/wiki/CUDA

I also know that NVIDIA chips have tremendous memory bandwidth, which prevents a competitor from making a truly cheap substitute (see https://acecloud.ai/resources/blog/why-gpu-memory-matters-more-than-you-think/ ).

https://www.nvidia.com/en-us/data-center/h100/

says it has more than 3 TB/s of memory bandwidth. That might be 30X what a good Intel desktop CPU can do.

Okay, thanks for the additional information! So, if I think about your comments and those of Anon (Meh) above, it sounds like you are a bit more optimistic that Nvidia’s software gives them some staying power (moat?), but is that just a temporary finger in the dike before it bursts and their business then reverts to the trendlines it was on pre-AI? And, what about the memory bandwidth advantage you mention–surely google, microsoft, meta, amazon and others can figure that out, no? And, then have TSMC make their chips using their best-in-world manufacturing? Any other considerations?

Forgive me if this is common knowledge, but I was under the impression that many already written AI applications used the Nvidia proprietary CUDA libraries and would need to be rewritten for the higher level libraries that can run on Intel. Maybe they are trying to build market share by providing a lower GPU price point for new applications going forward.

Demetri: you’re right and therefore for someone who needs just a few GPUs it wouldn’t ever make sense to move away from Nvidia. But for someone who is buying 100,000 one would think that it would make sense to retarget to Intel’s Gaudi if it were actually cheaper.

It’s probably called Gaudi because all it does it make weird melted-looking art. (And something else is probably named Picasso)

I know zero about this topic, but I guess the market isn’t buying this Intel claim. The stock is down 38%(!) in the last week – written 8/7/24.

I know Intel missed the boat on mobile and AI(?), but did everyone worldwide really stop buying Windows laptops?

For someone who needs only a few GPUs, Nvidia 4090 would do just fine. It is a great cheap card with unfortunately tiny memory. This suggests to me that Gaudi is undercutting the margin of H100 but still has a fat margin. The companies that want 100,000s just build their own and do not pay Intel’s margin.