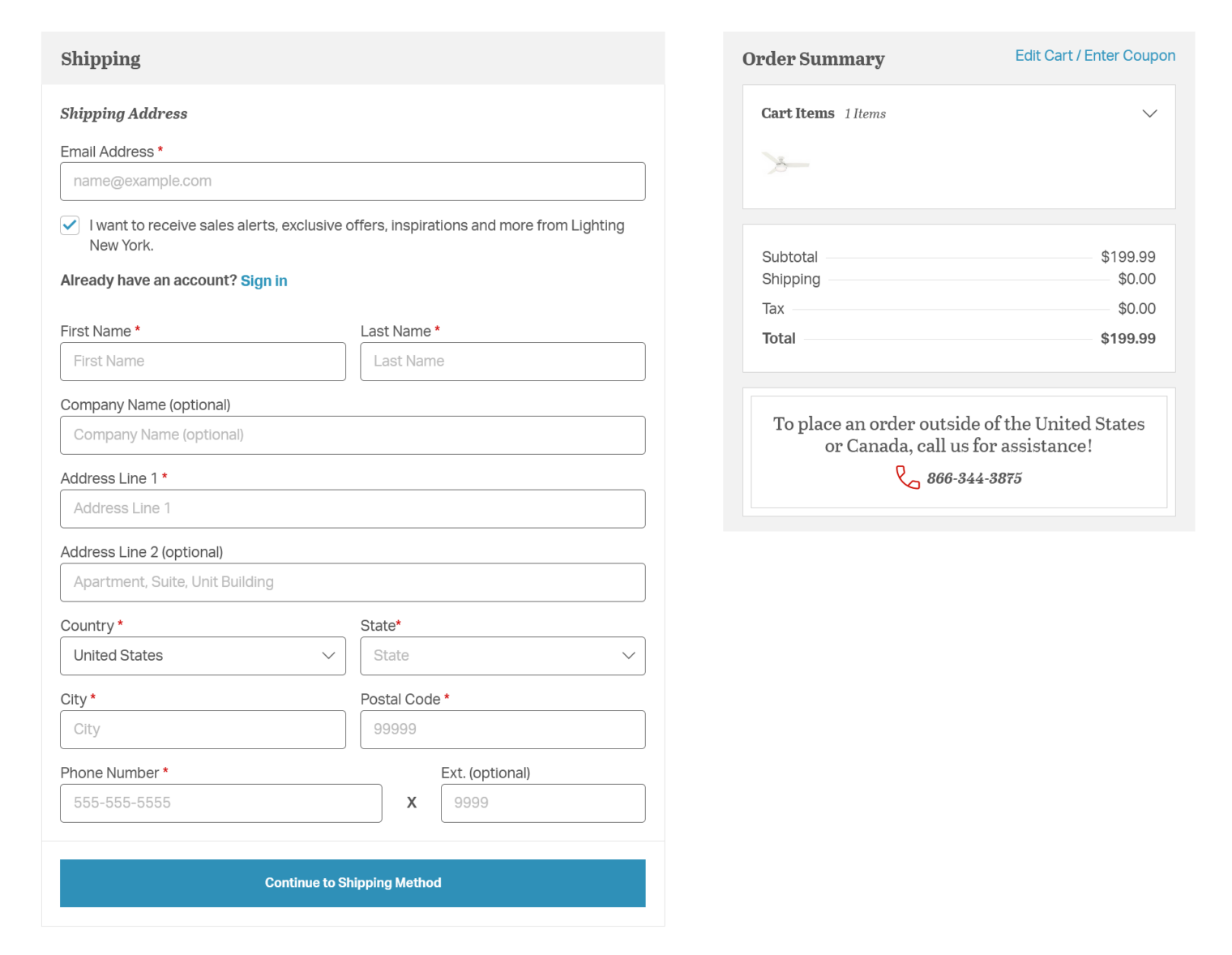

We are informed that AI is going to transform our daily lives. Web browsers are made by companies that are supposedly at the forefront of AI/LLM research and development. Why isn’t a browser smart enough to fill out the entire form below? It has seen fields with similar labels filled in hundreds or thousands of times. Why doesn’t the browser fill it out automatically and then invite the user to edit or choose “fill it out with my office address instead”?

Google Chrome, at least, will suggest values for individual fields. Why won’t it take the next step? Even the least competent human assistant should be able to fill in the above form on behalf of a boss. Why can’t AIs in which $billions have been invested do it?

I think AI could fill it out for us but people wouldn’t like the instant display of data collection overreach.

In my personal experiments it’s been pretty good lining up information into those fields. It’s surprising there isn’t a big company led project to do it.

Also, why do I have to input the code for my avocado. I’ll believe in the AI when I put it on the scale and it picks the correct veggie or fruit.

If the site supports Google Pay (or PayPal), this can all be obviated with a single click (if the vendor site implements it properly). No need to enter anything. Some browser extensions like 1Password can do this kind of entry, but as browser security is steadily increased, it tends to not work as well.

An F-35 nozzle is more effective than a $200 ceiling fan.

There are site that disable auto-fill. In fact, they take it a step further and force you to re-enter the account number info. And if that’s not enough, they disable copy-n-past functionality!

I understand the goal is to safe guard the clueless users, but in the above example, how does this protect the clueless users?

George: I don’t think it is clever JavaScript that is preventing purportedly clever AI. I have been on plenty of forms where Chrome offers to fill out one field, but not the entire form.

Philip, do you let Google track you? If yes, do you hold Google stock? (Attempt on a joke). Most users do not officially let Google track them. Also, with Google being default toolset used by education establishments from elementary school till graduate school, this could be big legal and political issue.

I do let Google track me. I assume that I’m not smart enough to achieve practical privacy on the Internet (I would have to close out my Facebook, Twitter, and Google accounts and then do everything through a VPN for starters!).

Agree that it is safer to be tracked by everyone then by some hidden entities. Are VPNs and local encryptions are safe from local hardware/software or tor hardware/software being compromised?

But it is the matter of principle. Ideally, someone can sue if personal data is tracked without permission, however hard it is to accomplish. Obviously Google and Big Brother can track you without your permission. Typing this in Chrome, lol.

Recursive Bayesian filters ≠ AI

Probably everyone on this forum thinks of HAL when we imagine AI, but the current technology is extremely far from this. ChatGPT and its ilk do not reason. They are text auto-complete algorithms using a vast data set, clever mathematics, and some hard-coded heuristic hints. I have yet to see one of these systems that can learn a set of rules and then apply them to a new situation.

Spot on! And you left out “randomness” which is what all those “AI” algorithms do to give the end user the perception of being intelligent, which is also why “AI” sometimes spits out wrong and misleading answers.

The only clever part of today’s “AI” technology is that the answer it provides is well structured and is a combination of multiple searches into one. For example, I can use non-“AI” search engines to execute multiple searches and construct my final answer from those multiple searches to look just like the final answer we get from “AI”. “AI” is dong this for me, but *without* giving me the opportunity to verify the adequacy, correctness and the source of the data. We are told to trust in “AI”‘s result!

This is why I keep telling anyone who talks to me about “AI” that “I” is fake and is completely misleading in “AI”.

I did work on “AI” back in the early 90’s and before that, I was writing search engine code that searches medical and research publication that came off journals such as ERIC, LISA and JAMA to name some. We got the printed context in text format, index them and burned the data into CD-ROM (the index and the data encrypted). We then shipped the CD’s (some weekly, some monthly, some quarterly, etc.) to subscribers, including libraries, with our software.

If you ever were using search engines, back in the late 80’s, early 90’s, at a library or in your school’s research lab, chances are you were using SilverPlatter [1] and thus my code. 🙂

[1] https://en.wikipedia.org/wiki/SilverPlatter

We can’t know how HAL was programmed, but neurologists have been heard to explain human brain function in terms of auto-complete. Scale makes a “stochastic parrot” into something qualitatively different, even though it doesn’t contain a specifically engineered reasoning module.

df, this is thesis of Karl Marx and his dialectical materialism, quantity becomes quality. Believe it was based on popular science ideas of 19th century which did not pan out, and Hegel’s appropriations from Far Eastern philosophy, which, as a philosophy, is neither provable nor falsifiable. We heard a lot of it but have never seen it, just like true communism.

Are you sure having non-material soul is not a necessary but not sufficient requirement for any intelligent reasoning that presumes free non-random choice at the root of reasoning?

In the computer business the qualitative effect of scale is evident since there have been hardly any fundamental innovations since the 1950s. If a smartphone is qualitatively different from an IBM 704 (and I really think it is), it’s mostly about the many orders of magnitude of improvement in switch and storage size/density/power consumption; the UI technology has moved on a bit, too.

Regarding free will, some of the betting (not by Pascal) is that our perception of it represents ex post facto rationalisation of lower-level actions; for instance, it appears that motor nerve signals *precede* the associated higher-level neural activity. Maybe free will exists in the prior injection of rules and priorities by higher-level brain functions to condition lower-level responses to environmental and other neural stimuli. Some people like to turn to quantum indeterminacy when faced with an apparently mechanical model of a brain acting in response to external stimuli, but that doesn’t work for us Many Worlds adherents: scale is a much better way to go.

df, the increase in computer power so far did not result in qualitative changes. new ML systems do not do anything new; they accomplish approximately what domain chatbots and expert systems from 1980th could do, just to larger audience and more domains. Military analogy: one tank is sufficient to storm one village, thousand tanks are sufficient for a blitzkrieg. Same staff just on larger scale.

UI technology got terrible hit when applications moved to web browser and html, it became less useful. I too converted to www to increase my hourly rate nearly 30 years ago, I can certify to that. Same for new UI libraries, they are mostly there to generate new revenue for software companies or to run on GPUs vs CPUs, with reduction in functionality and dorkier graphics.

On your philosophical notice: Bose-Einstein condensate with spooky action at a a distance to you too. 🙂 One day I’ll try to understand that.