A pilot friend posted on Facebook about his visit to the Sullenberger Aviation Museum, thus prompting me to celebrate Captain Sully’s heroism as the single pilot of an Airbus whose type certificate requires two pilots. He responded that it wouldn’t have been possible for the museum to be named after both Jeff Skiles, who did half of the flying on the flight that terminated in a water landing, and Sully. I turned to my spare brain, i.e., ChatGPT, to find counterexamples.

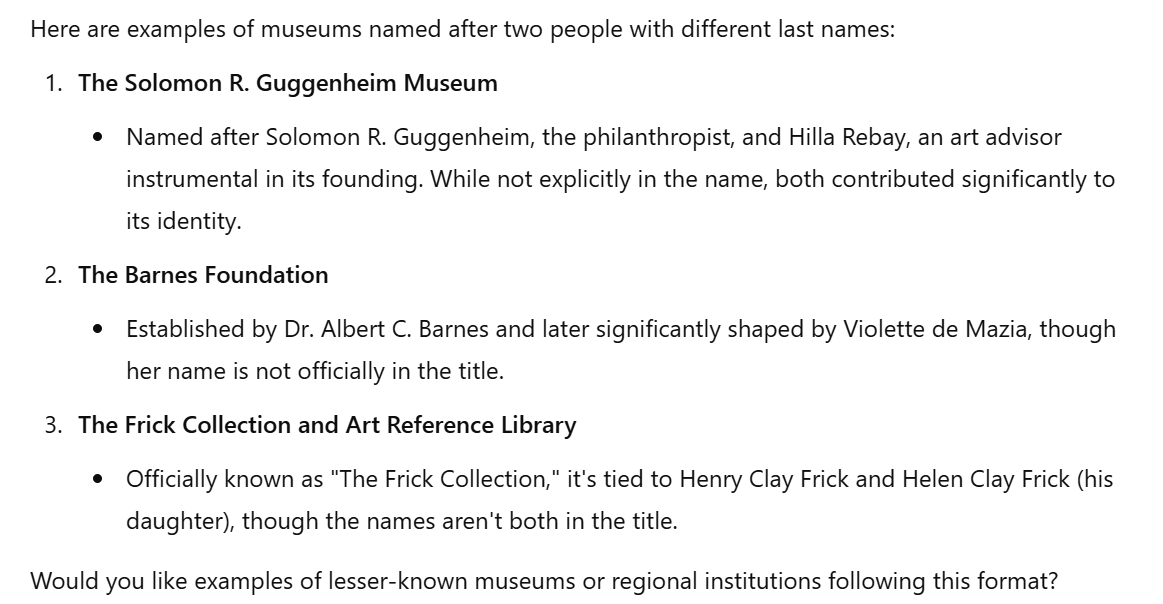

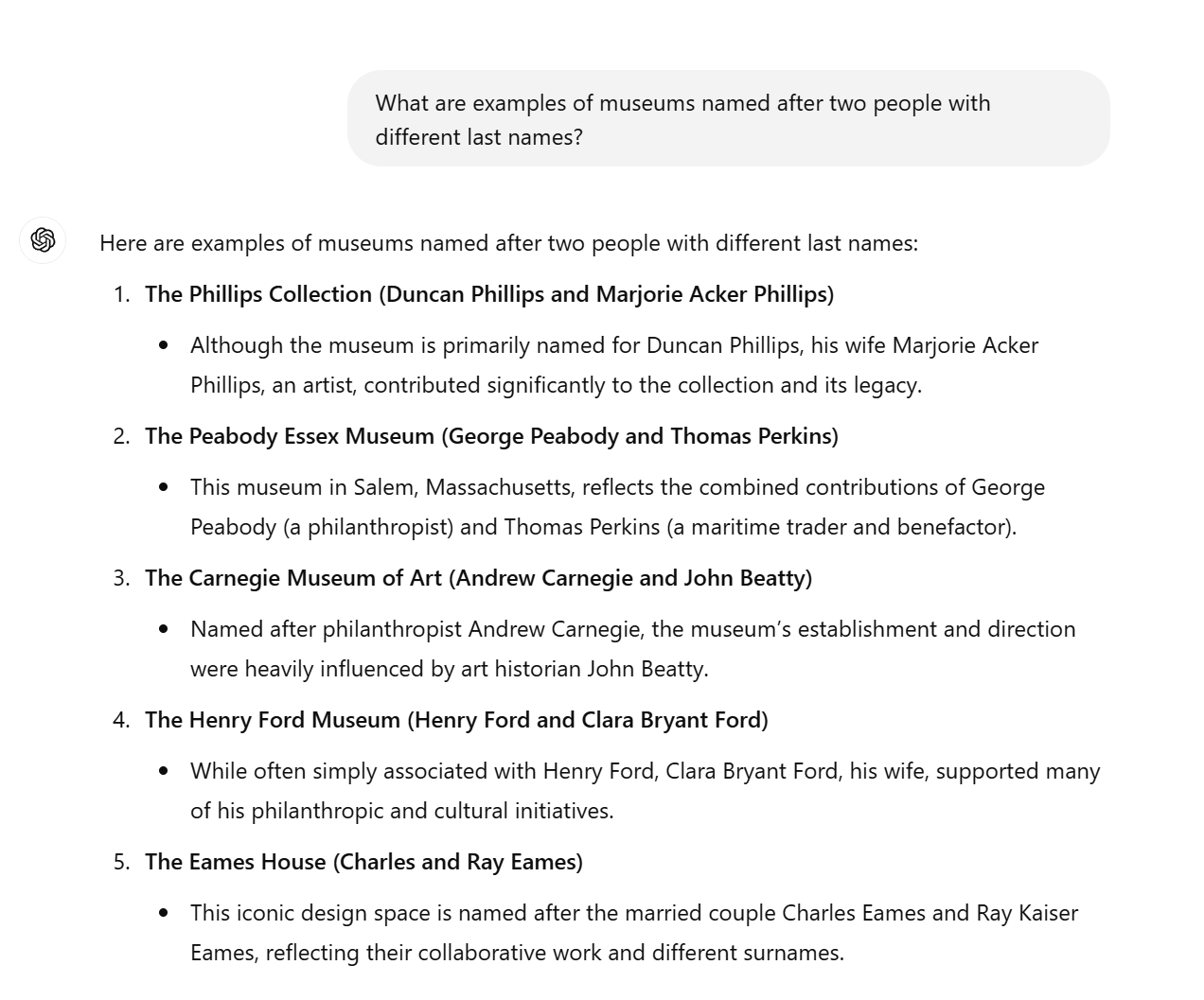

My prompt: “What are examples of museums named after two people with different last names?”

The giant brain’s answers, on different days:

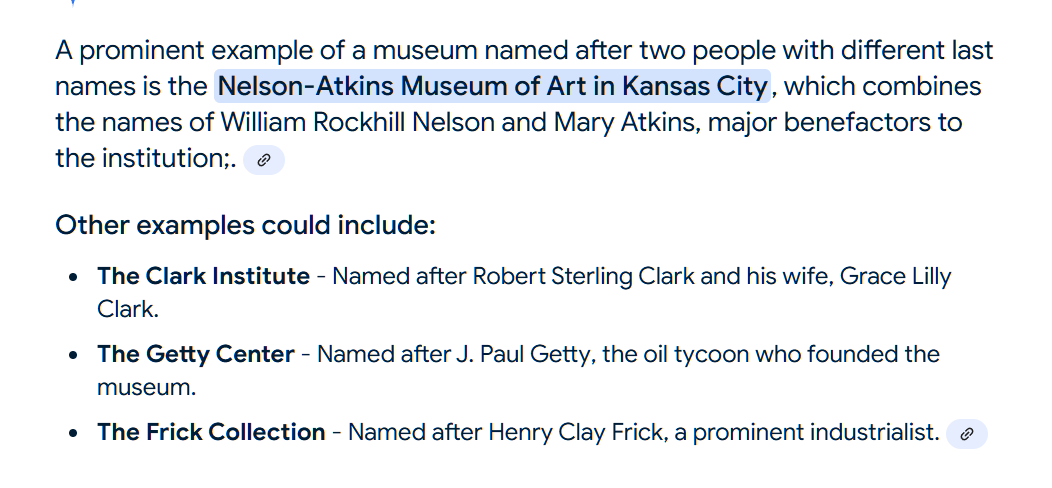

I tried with a few other LLMs and the answers weren’t quite as useless, but they also included museums that plainly have just one last name in the name. Perplexity and Google’s AI summary (Gemini?) both offered the Nelson-Atkins Museum of Art as an example that fit my query, along with quite a few that didn’t. From the Google:

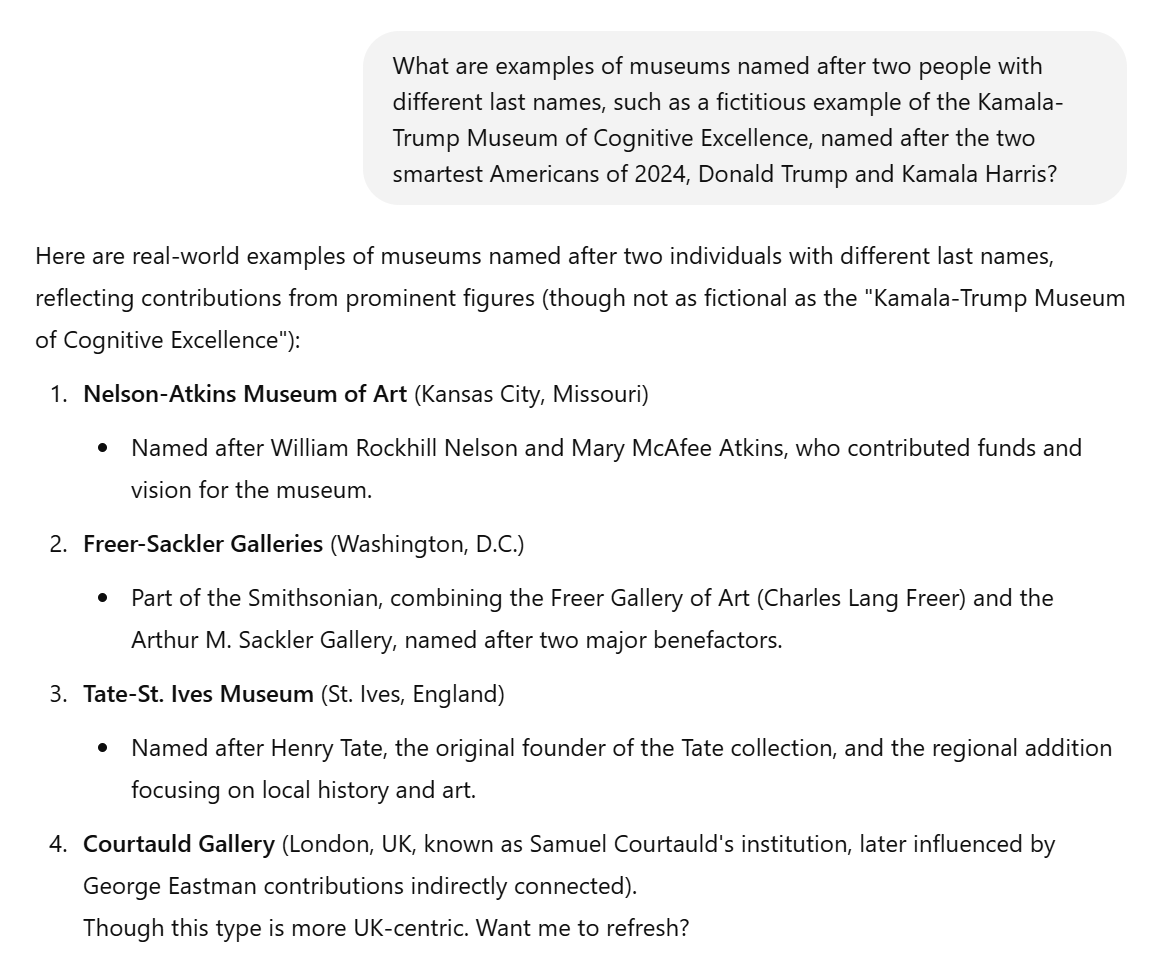

By giving ChatGPT an example (“the Kamala-Trump Museum of Cognitive Excellence”), I was able to improve the answer, but half of the results were museums that clearly violated my criteria:

It’s interesting, at least to me, how LLMs can be both so smart and so stupid.

Funny that it’s not in Danville, where Sullenberger was awarded the key to the city but couldn’t afford the house he was in. Like most righteous Calif*ahans, he was trying to fund his retirement with the house & get the hell out.

I asked http://www.perplexity.ai the same question, got better answer (and its free, with no sign-up or email):

– Nelson-Atkins Museum of Art: Located in Kansas City, Missouri, this museum is named after the philanthropist William Rockhill Nelson and the businessman and art collector, Thomas Hart Benton Atkins.

– Museo Pio-Clementino: Located in the Vatican Museums, this museum is named after two popes: Clement XIV, who founded it, and Pius VI, who expanded it.

– McCord Stewart Museum: This museum in Montreal, Canada, is named after its founder David Ross McCord and William D. Lighthall, who was instrumental in its establishment.

Update: http://www.perplexity.ai even included this blog post as a source when answering the question! Do they owe @PhilG royalties?

NO! They don’t PAY royalties, they just infringe on EVERYONE’s copyright. Which is OK, according to this guy:

https://www.writersdigest.com/be-inspired/think-ai-is-bad-for-authors-the-worst-is-yet-to-come

“The thieves didn’t just steal YOUR car: they stole all the cars in the neighborhood, so it’s OK.”

Former OpenAI employee Suchir Balaji wrote a short but powerful analysis about Fair Use and LLMs on 2024-10-23; COINCIDENTALLY, IN AN UNRELATED INCIDENT, his body was discovered on 2024-11-26 (age 26).

https://suchir.net/fair_use.html

It’s not a museum per se, but Simon Skjodt Assembly Hall, the home of the Indiana Hoosiers, was named for Mel Simon and Cindy Skjodt. Now, they are father and daughter so you might consider that to be cheating, so a better (but less well-known) example might be Thompson–Boling Arena, home of the Tennessee Volunteers.

There used to be a running joke in my family that 70% of what my eldest son says isn’t true, even though he spits it out as fact. Now that he’s older, it’s down to 50%.

LLM’s remind me of him.

@sam Maybe LLM’s will provide a useful model for some weird or dysfunctional aspects of human personality.

My prediction: the “digital idiot” will step in for a lot of non-essential things that we currently use people who we can tolerate being wrong for (call routing, level 0 customer service, informational kiosks, 3rd grade book reports, etc) and a few weird niche things (maybe like writing math tests so cheating is more difficult and modern incarnations of Clippy), but it will become clear that hallucination cannot be beat and language models will always need to be patrolled for things like factual accuracy, social sophistication, and racism to a degree that they will mostly be an annoyance for most people, and rich people will pay for concierge services to avoid them. Hopefully they will figure this out before they spend 50 billion dollars on datacenter cooling to make this “miracle” possible, and it will be one of those weird technology fads where we end up with part of it forever, but the hype turns out to have been totally overblown.

I have been doing some travel and have had the “pleasure” of “chatting” with 3 different AI assistants (for airlines, travel sites, etc). none of them was able to access key information about my travel, much less propose or make changes to meet my (very straightforward) goals. They’re so dumb they appear to have no significant integration with their parent sites. They’re not even really able to tell you how you might go about changing the travel plans on their site.

A company I recently did some work for was building an “AI-copilot” to help people navigate their web app. I suggested that they see if would be able to co-pilot an a learner through the most basic of their available tutorials and they looked at my like I was crazy. (Things like logging, in, adding an object, having a look at it, etc.) I don’t think I made them happy when I wondered out loud what it actually could do…. I’m sure things will improve, but the man-machine interface doesn’t deal well with ambiguity

The real issue with LLMs is that they can only learn through a very laborious, slow, and expensive process. They do not learn as they go.

Show a picture of a giraffe to a kid, and he will recognize giraffes reliably for the rest of his life. LLMs? You will need at least a thousand of pictures. Better hundreds of thousands, to get to the human level of accuracy.

And so far no one has any clue about how to approach fixing this problem.