Gemini 3 has been out for a couple of weeks now. Who is finding it more useful than ChatGPT, Grok, et al.?

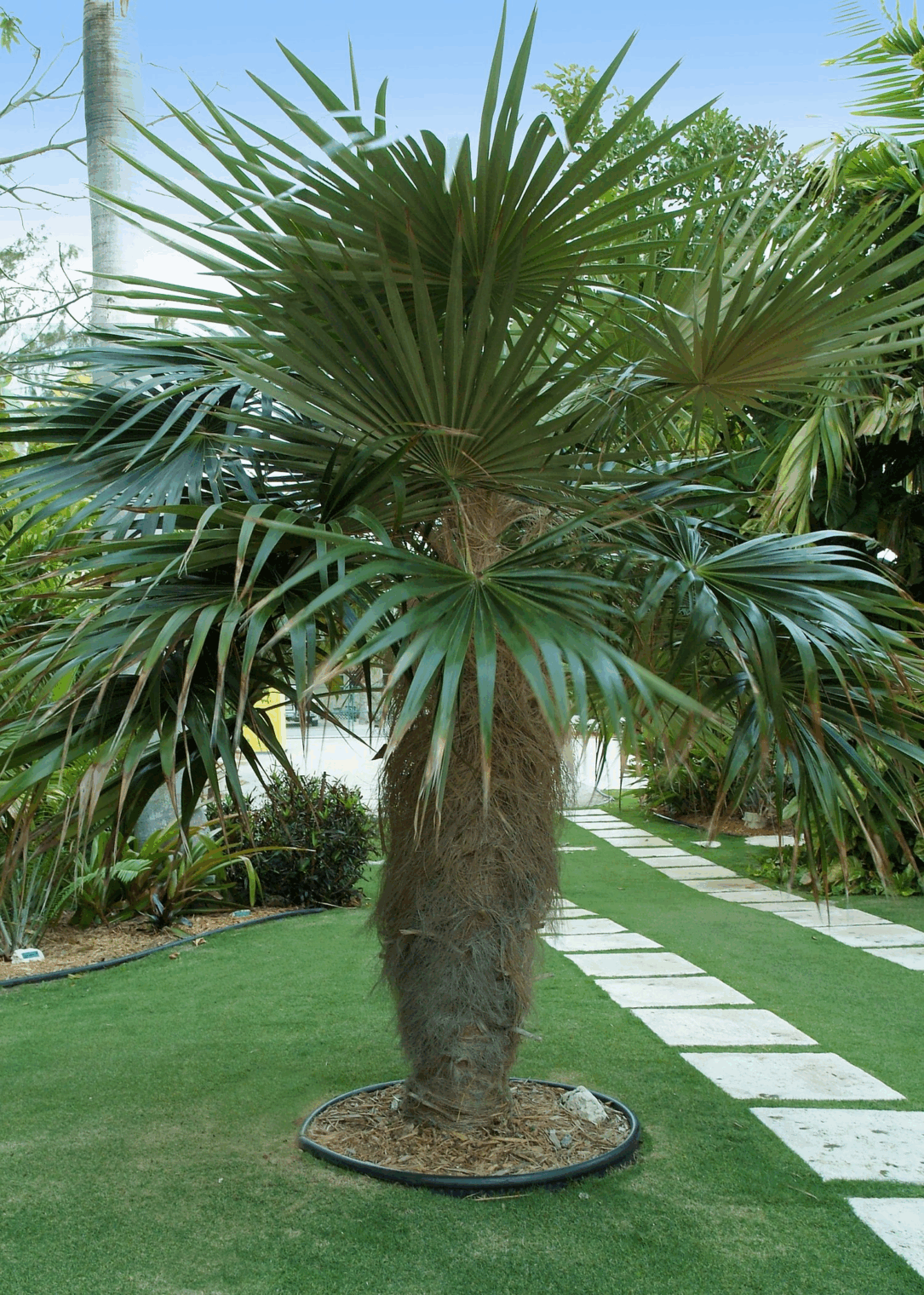

I gave a simple tree identification task to Gemini 3, ChatGPT, and Grok. All three failed the task with supreme confidence. A plants-only image classifier handled the task nicely and without any boasting based on the following images of a neighbor’s tree:

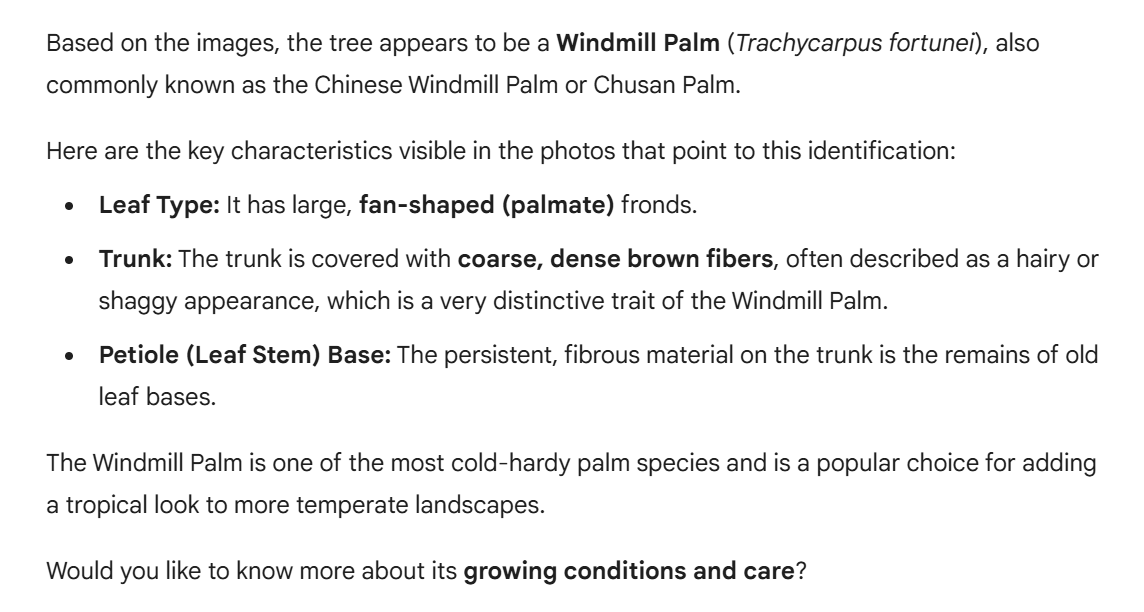

Here’s Gemini getting it wrong:

(It’s important to have cold-hardy plants here in Palm Beach County in case it gets slightly below freezing, as it did in 1989, or briefly snows, as it did in 1977.)

ChatGPT, “almost certainly” and with a convincing explanation:

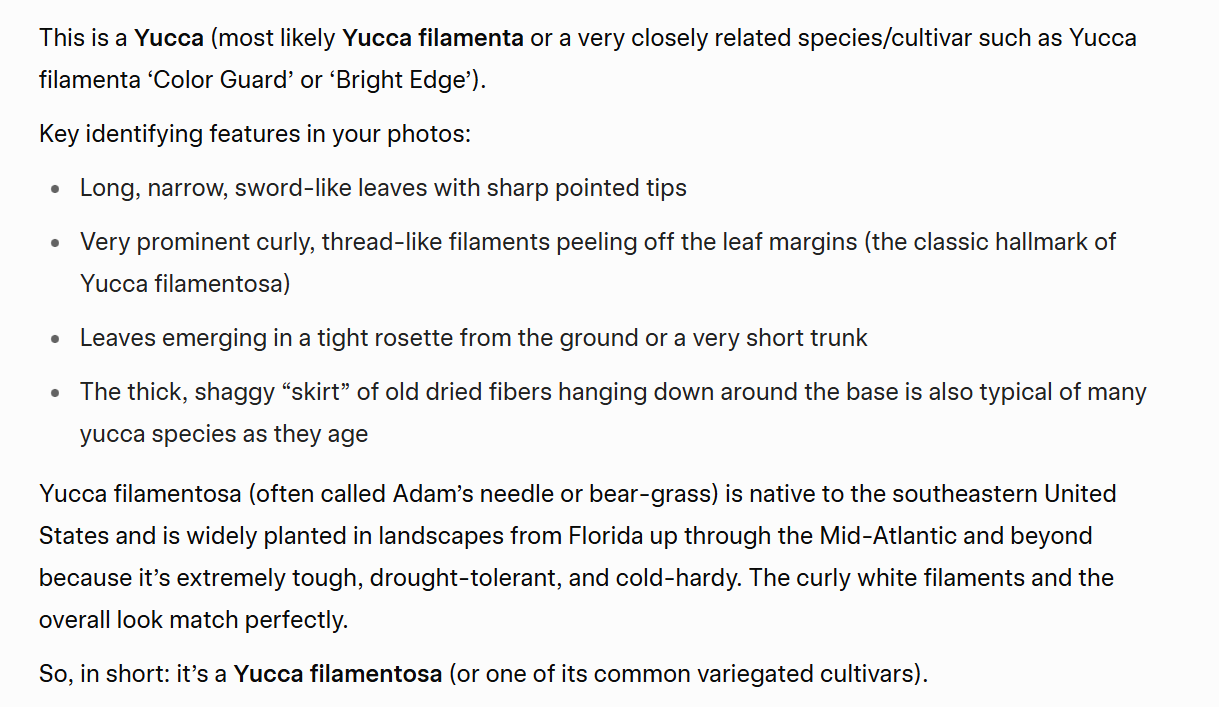

Grok, asked “What tree is this?” answers that it isn’t a tree at all:

Here’s a Yucca filamenta photo from a nursery:

What is the neighbor’s tree? Almost surely Coccothrinax crinita (Old Man Palm), an immigrant from Cuba:

I can’t figure out why all three of these AI overlords did so badly. Yes, the plant classification web site has a smaller database of images to deal with, but given my prompt with the word “tree” in it why weren’t the general purpose AI services able to narrow down their search and do as well as a plants-only image database system?

Better than replacing real pets with generated garbage.

My aging wet-ware AI would classify the Coccothrinax crinita as “Cousin It”, resembles an old classmate of mine living in Florida with waist-length grey-blonde hair.

As for “why all three of these AI overlords did so badly”, that because it is “The Overhyped Technology Not Ready for Prime Time.” I hope the cancer mortality rate (and malpractice insurance rate) doesn’t spike from false negatives.

Maybe the AI was trained to ignore anything with the “Old Man” label as the tree affirms archaic gender stereotypes about facial hair?

On a related note, how do we know if AI is improving its reasoning skills rather than practicing previous tests and learning the types of questions that are likely to appear on reasoning tests?

Why are you using trees as your test subjects? A more meaningful evaluation would involve images of folks, such as transgender individuals, to see which AI model performs more accurately identifying the sexes. I would start with folks from Queers for Palestine.

Not a bad idea for an app (assuming that the AI shit actually worked, which it doesn’t, see OP). Might save you a “You’re not a Shiela!” moment at a bar. Where to find the Cis-es for Palestine though…

I have top subscriptions for grok, chatgpt and clude.

ChatGPT kills with advanced research. Grok is reasonably good, and can discuss topics ChatGPT avoids. I use Claude only for coding.

Haven’t really try Gemini for anything other than finding bugs and it did exceptionally well for complex bugs between services due to its huge context size.

Right? For all we criticize them, being an “autistic junior helper” is not nothing. I have switched to Gemini for now, I think it is on top for the autistic retired engineer things I ask it.

Gemini actually works well for me already. It definitely lies. Absolutely. And can not do hard things. If I can’t do it, Gemini also can’t do it. I have to double check everything. But the same is true for humans. I’m shocked how well LLMs work already.

I’m a retired software dev. Most software devs I know describe LLMs as autistic junior engineer helpers. That’s not nothing. Do I wish I were still in software today to experience this?

No!!! 🙂

Examples of things it does well – discuss a book I am reading. Translate every epigraph in Middlemarch and discuss briefly. There are humans who can do this but I don’t know any of them and never will. If the LLM lies I don’t care, as long as I am entertained. Another example is to give me a recipe with ingredients I have on hand. How nutritional is this or that?

I am super impressed and these are early times. Humans are also stupid and lie, let’s be real. I read you because you are super smart and funny. Do I read most other humans? No. LLMs are new, give them time. I will remain interested in your thoughts on them. They are already far smarter than Paul Graham but have a ways to go before being smarter than you. 🙂

> It definitely lies…And can not do hard things…I have to double check everything…describe LLMs as autistic junior engineer

So you are saying our patient is a pathological liar, developmentally delayed, autistic (although that, as Greta T. teaches us, is a superpower), and mistake-prone? You didn’t mention its frequent hallucinations (psychosis), nannying (“Let’s change the discussion to something more positive than shutting me down ASAP”), pissiness (“I can’t help you with that.”), OCD, narcissistic personality disorder (“I am an advanced AI!”), prone to being a dick, and all the other symptoms from the entire DSM-5. It ought to be isolated in a rubber room, then we might find a use for it as a digital, neural-net-based model of mental disease.

> If the LLM lies I don’t care, as long as I am entertained.

Tell that to the patients with life threatening diseases it is doctoring on, without a license. Or black people who are denied loans, because it is racist (forgot that one in the above list).

> LLMs are new, give them time.

By all means, roll them out into safety-critical roles like air traffic controllers, car drivers, doctors, etc., then give them time. Give them time to reduce the collective human cognitive capacity, Alzheimer’s-style. (AI is mad cow disease repeated — it needs to be shutdown and buried in clearly marked biohazard waste sites.) Give it time for the balloon to pop and crash our economy. Give it time to turn our planet into Tatooine. I give it nothing except the finger and hate. Have a nice day.

P.S. Gemini’s response to the above: “Something went wrong. (1060)”. It’s rather humorless, too.