For this year’s FAA Ground School class at MIT I decided to let ChatGPT do all of the hard work of correcting and updating slides to account for any changes to regulations, etc. The LLM kicked out about five spurious suggestions for every useful typo correction. For example, here’s a slide about gliders whose title says that it is about gliders:

ChatGPT’s comment on the slide:

ChatGPT fails to use the title as context for the slide and, therefore, says that “solo at age 14” needs to be qualified to gliders only.

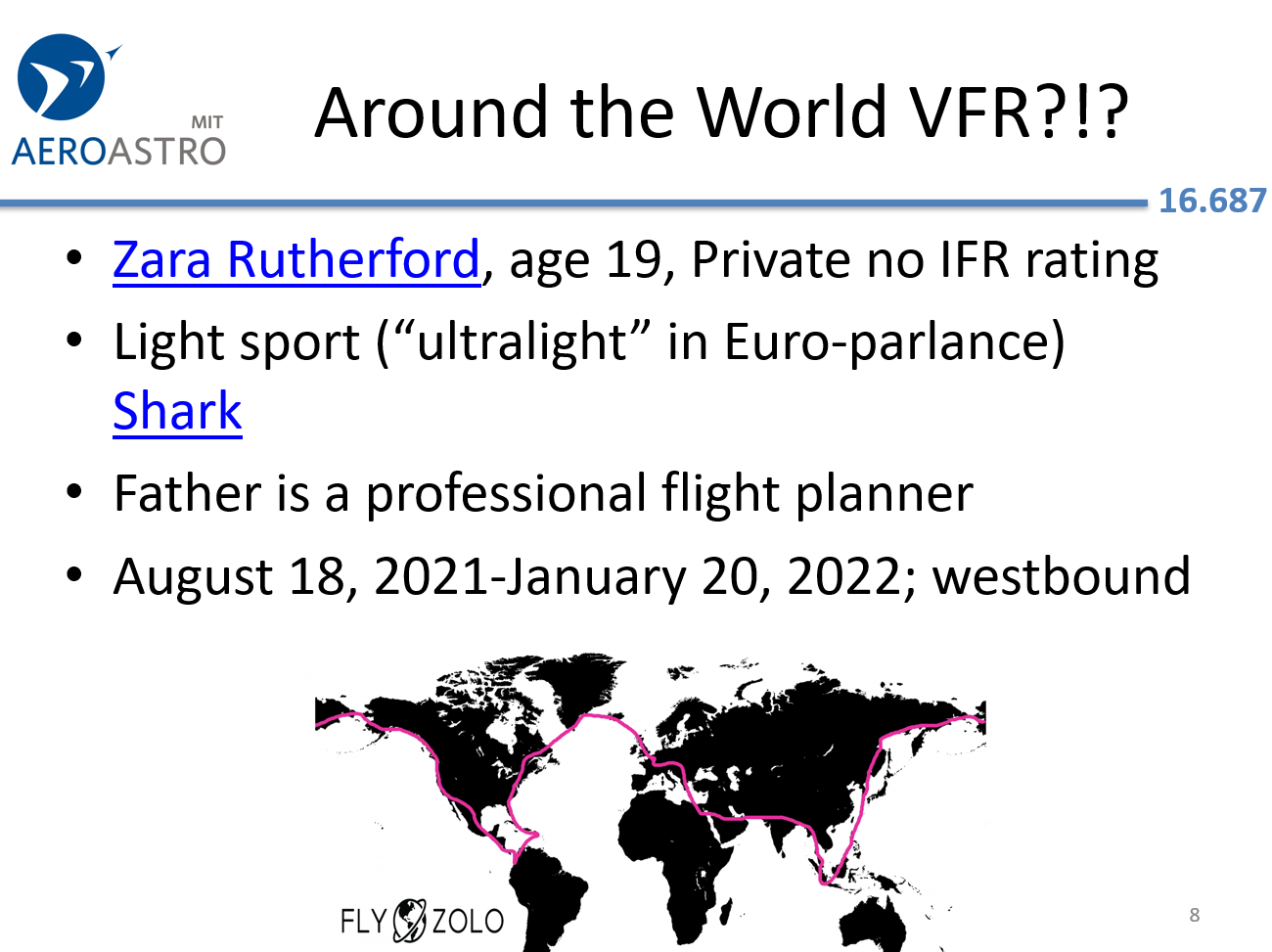

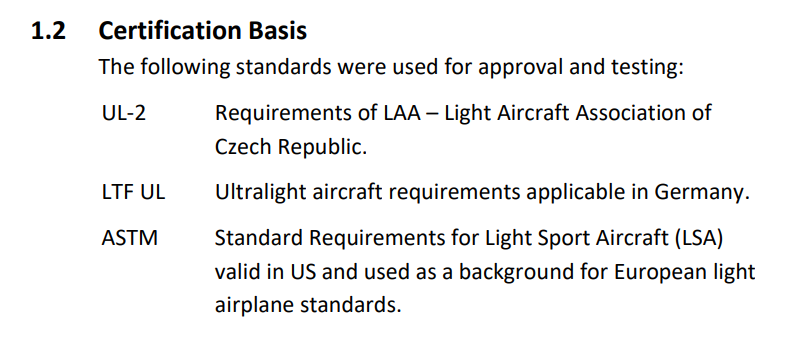

This slide points out the what the U.S. calls “light sport” is called “ultralight” in Europe:

ChatGPT:

It says to call the Shark a “light aircraft”, which typically refers to anything weighing less than 12,500 lbs. From the Shark manual, available on the Shark.aero web site:

Maybe ChatGPT doesn’t think that the Islamic Republic of Germany is part of “Europe” anymore?

ChatGPT is humorless. On the multi-engine/jet lecture:

“Now the second engine can take you to the scene of the accident!” ⚠️ Pedagogically funny, but borderline unsafe phrasing for students.

This ancient saying made ChatGPT feel unsafe. Its correction:

“Now the operating engine may or may not be able to maintain altitude, depending on weight, density altitude, and aircraft performance.”

On helicopter aerodynamics:

400 rpm actually is the Robinson R44 main rotor speed. The original slide gives the students a ballpark figure to keep in mind regarding how fast helicopter main rotors spin. ChatGPT’s “correction” leaves them guessing.

ChatGPT disagrees with the Robinson Factory Safety Course. It says “Rotor kinetic energy is the key stored energy” for landing without an operating engine. This can’t be right, intuitively, since it is possible to autorotate from 500′ and also from 5,000′.

ChatGPT when pressed stuck to its guns: “[altitude] is an external energy source”.

Summary: ChatGPT is better at creating slides than at correcting them.

It looks like we are farther off than we thought about AI flying around the world solo. Zara Rutherford’s achievements are impressive. I hope she is an inspiration to girls and boys wanting to become pilots. I also hope your ground school went well this year Phil, inspiring some of the best young minds to get involved in aviation. (“Artificial intelligence” should try to get better at doing 2nd graders’ book reports before it gets involved in aviation. Artificial crackpot, more like it.)

So the GenAI cost more time than it saved, but made you fight misinformation. Isn’t there an old chestnut that it takes 7 repetitions of the correct information to wash out 1 repetition of false information?

Important new research FROM AN AI COMPANY:

https://www.anthropic.com/research/AI-assistance-coding-skills

“On a quiz that covered concepts they’d used just a few minutes before, participants in the AI group scored 17% lower than those who coded by hand, or the equivalent of nearly two letter grades. Using AI sped up the task slightly, but this didn’t reach the threshold of statistical significance.”

[17% is incorrect; see below]

“On average, participants in the AI group finished about two minutes faster, although the difference was not statistically significant. There was, however, a significant difference in test scores: the AI group averaged 50% on the quiz, compared to 67% in the hand-coding group—or the equivalent of nearly two letter grades (Cohen’s d=0.738, p=0.01).”

[so the AI group took the same amount of time, but retained 25% less knowledge…or, really: STUDENTS WHO AVOIDED AI TOOK EFFECTIVELY THE SAME AMOUNT OF TIME AS AI USERS, YET SCORED 34% HIGHER ON THE QUIZ.]