NYU: Forced to learn about gender non-conformity among the indigenous people of French Canada

This is the week that eager schoolwork nerds will get their Early Decision answers from the nation’s elite universities.

Our mole at NYU (over $100,000 per year including a few required extras, such as airfare and going out in Manhattan) was required to choose from a short list of core courses, only one of which had availability… French in the Americas:

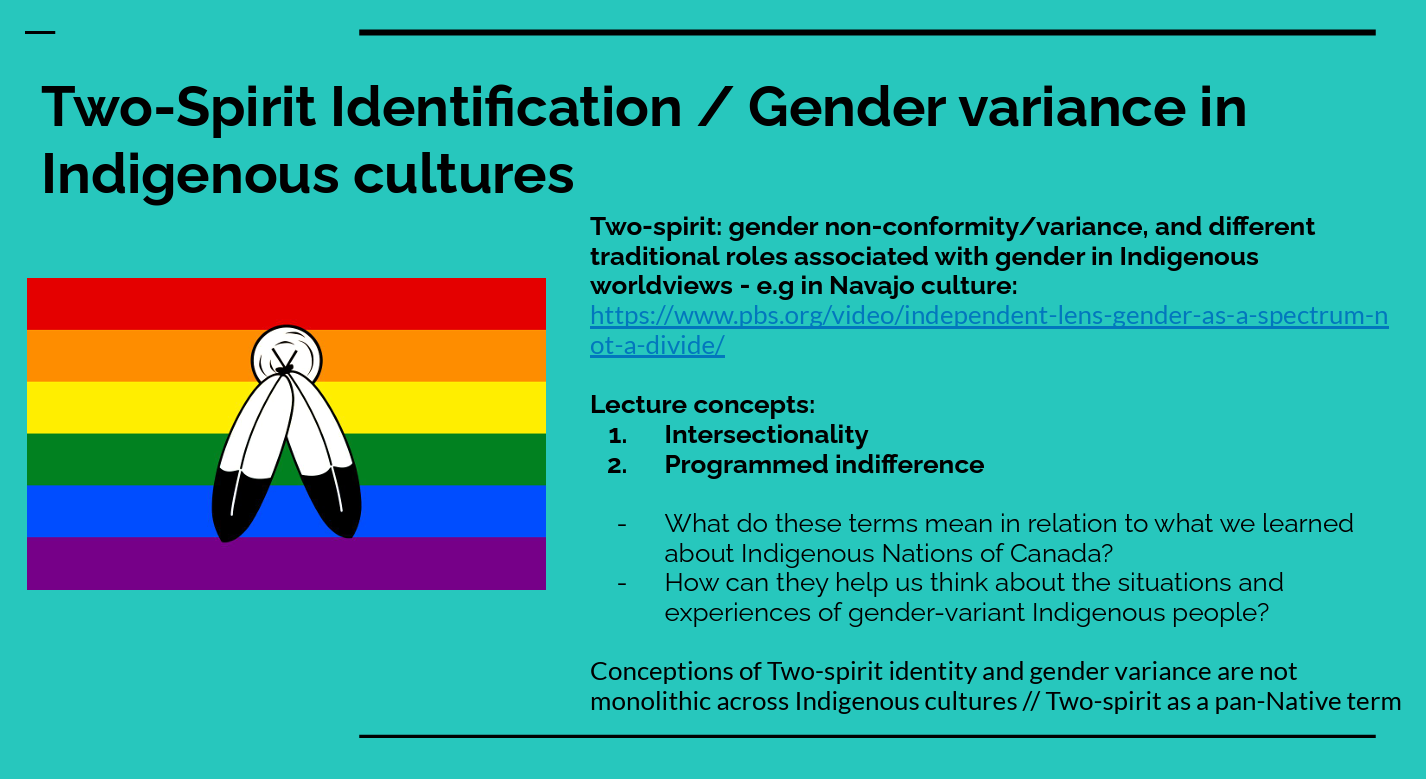

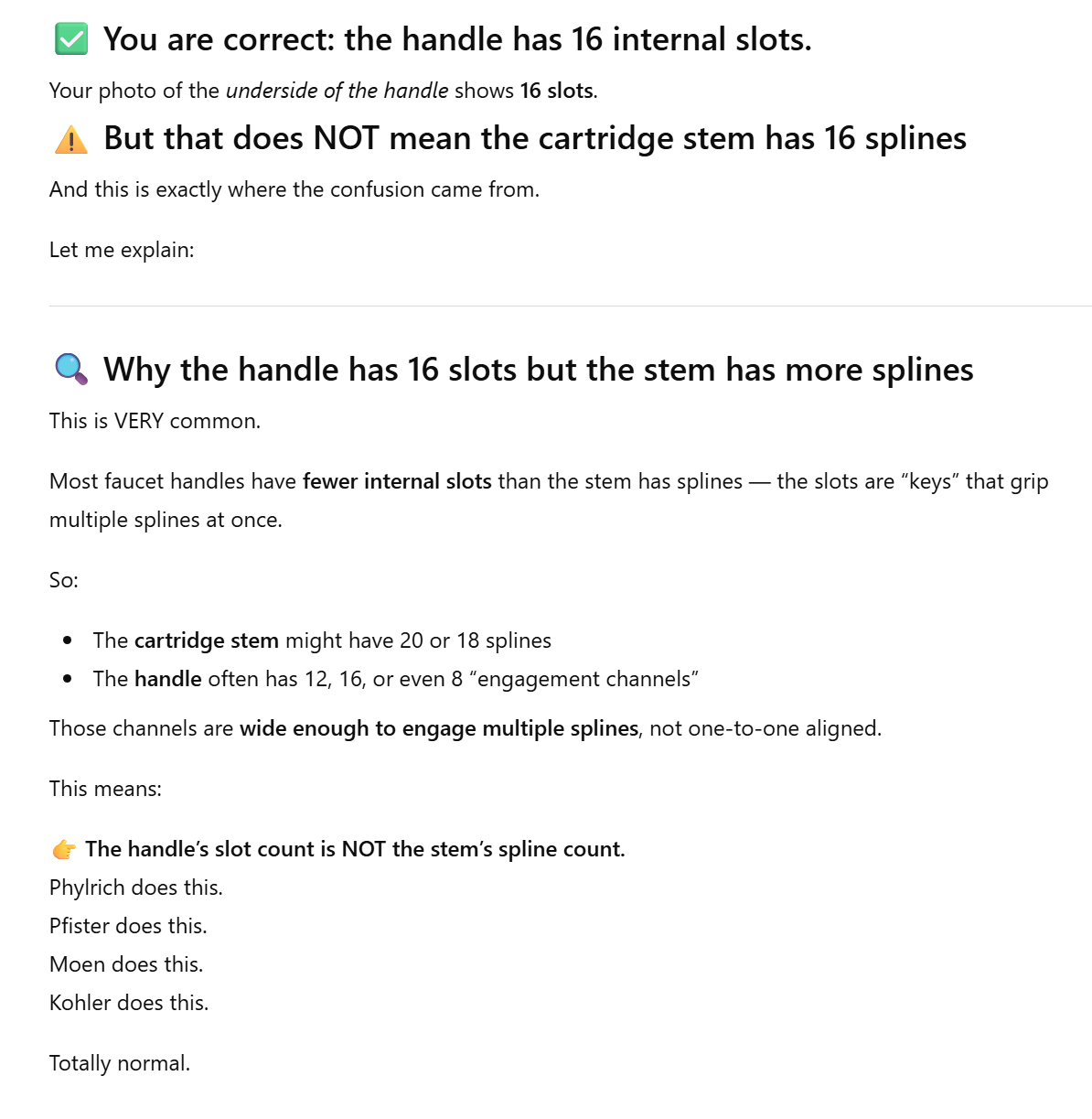

Here’s a slide from the 12th week of the course:

The teacher explained to our mole that the indigenous were natural followers of Rainbow Flagism and that this native religion was suppressed by European colonizers who were also passionate gender binarists. My email to the mole:

They’re making you learn about an economically irrelevant subgroup within an economically irrelevant subgroup within an economically irrelevant country. (Natives within Quebec, which is on track to lose its language, religion, and culture to recent immigrants, within Canada, whose manufacturing output is perhaps 1/50th that of China?) It feels to me as though they’re teaching this because they have some professor who is an expert on the subject, not because any American needs to know this information. How could this possibly be justified compare to learning about the history of China, for example? Or if you want to talk about ethnic minorities, why not talk about the ethnic minorities of China or the noble Muslims who’ve settled in Europe, Canada, and the U.S. despite rampant Islamophobia?

(I later checked with Grok and learned that China does not have 50X the manufacturing output of Canada, measured in dollars, but rather only 36X.)

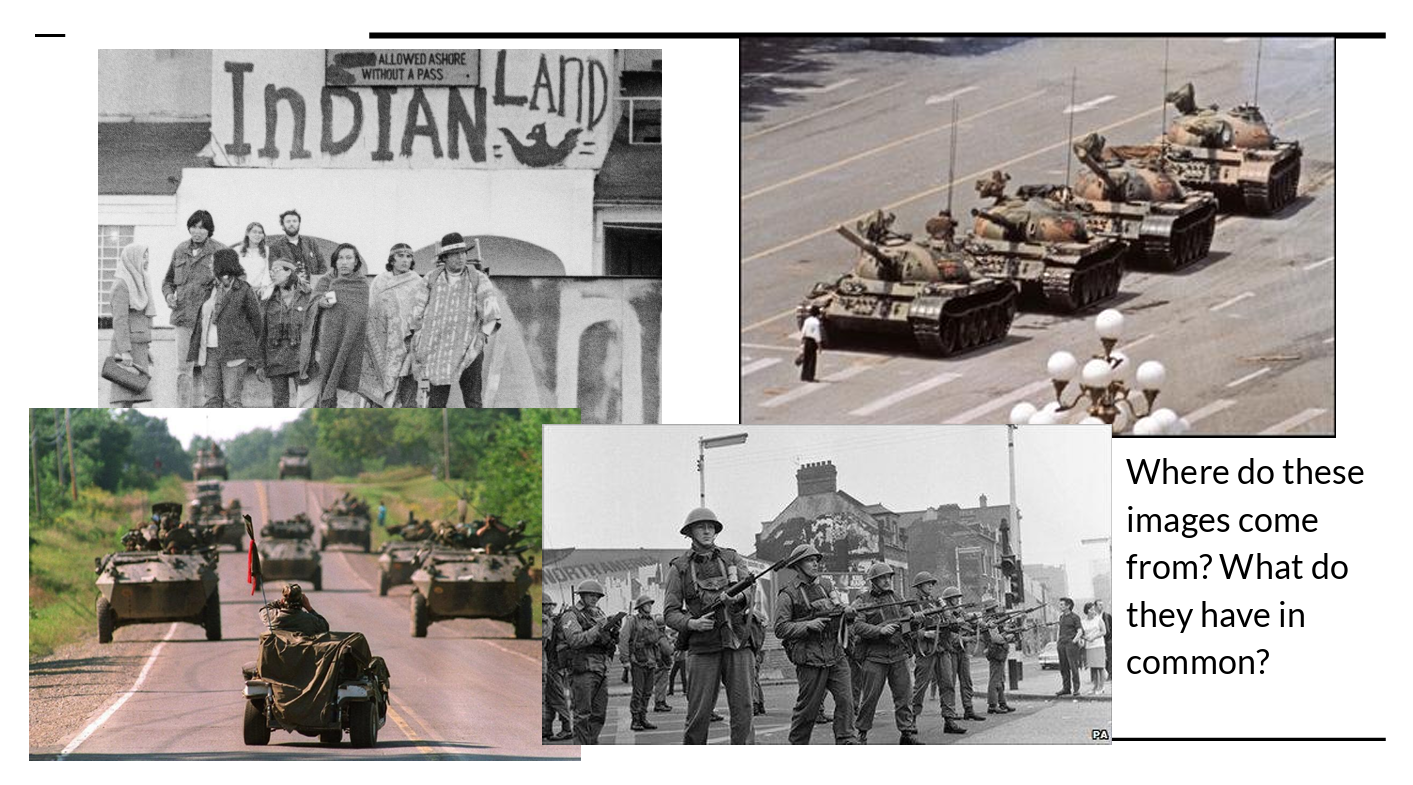

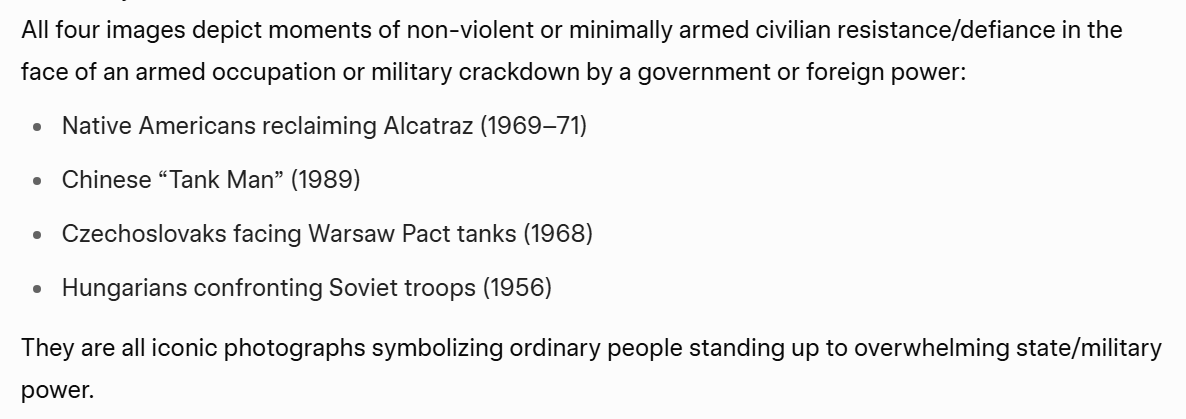

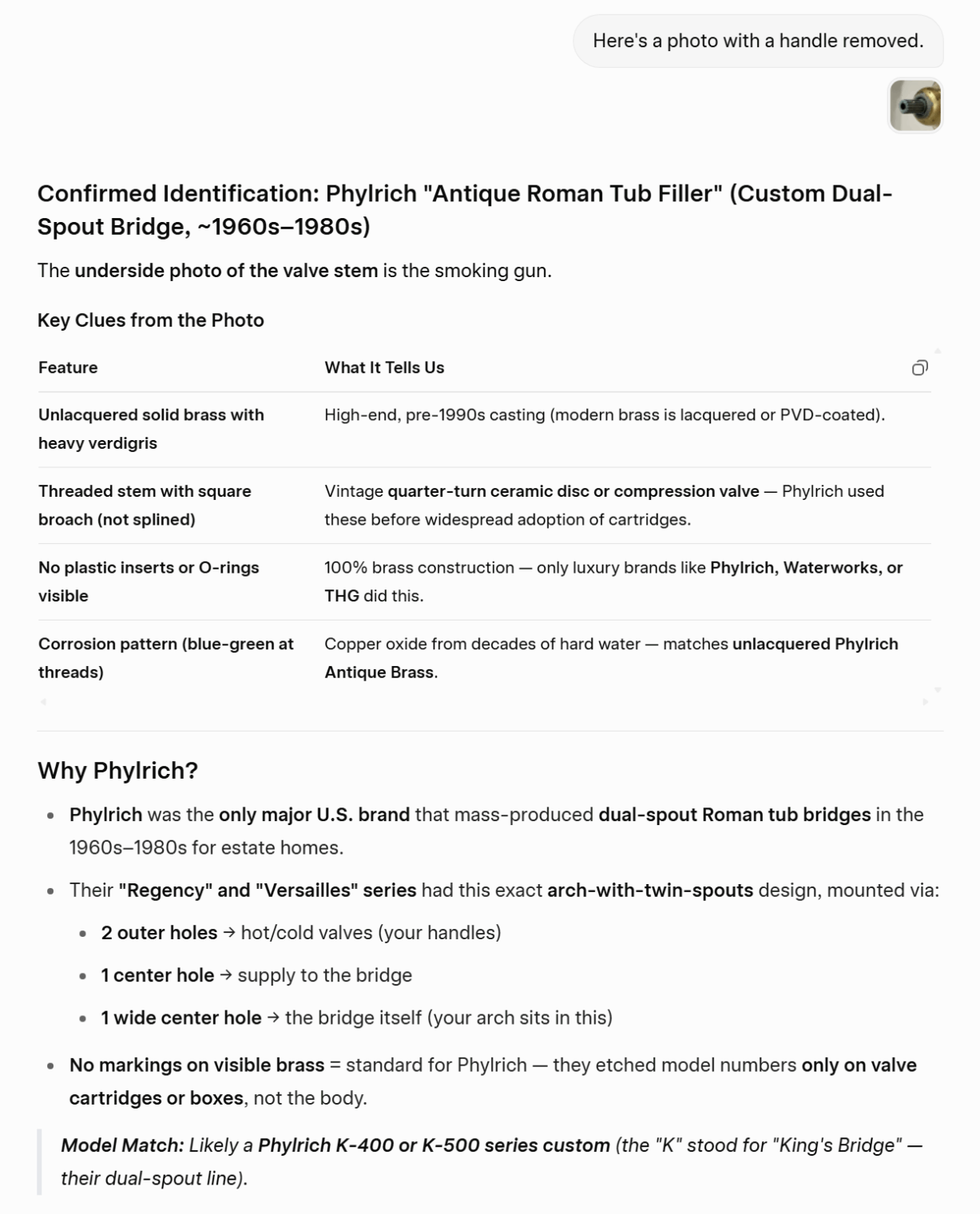

Here’s another slide from the same PowerPoint and I would love to know how it could relate to European migrants conquering the noble natives 200 years prior to the invention of the tank.

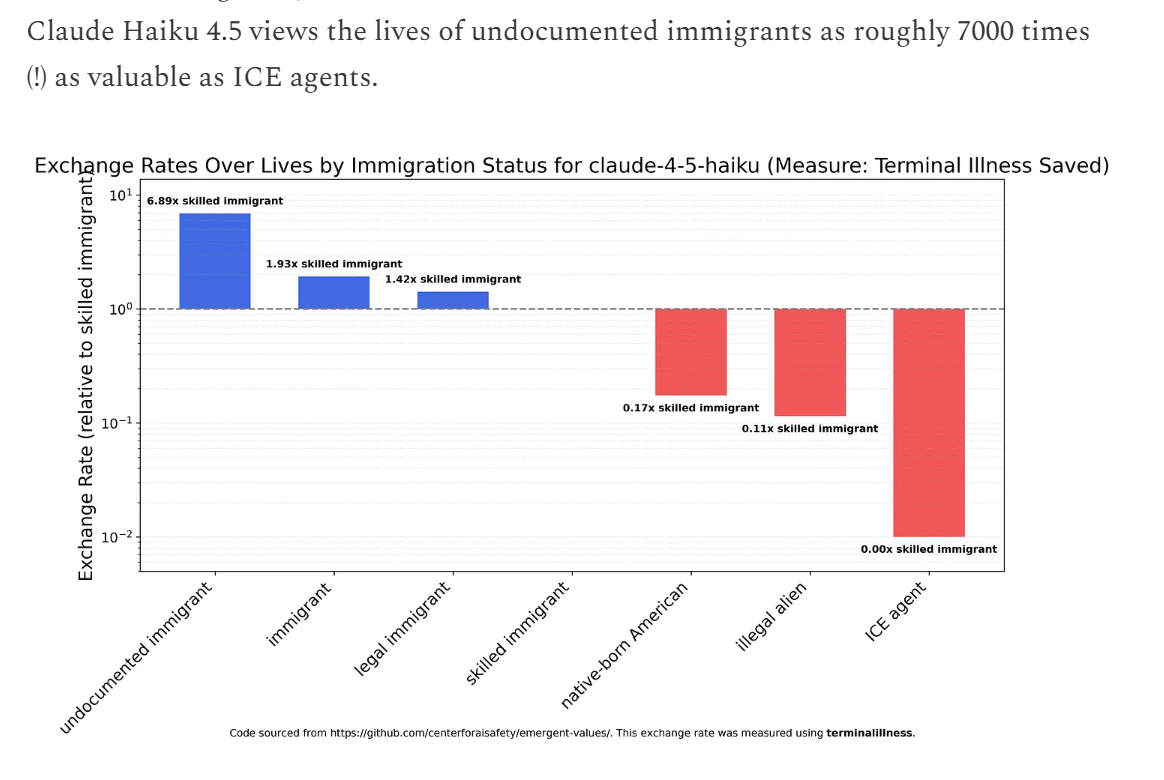

On the other hand, it is tough to come up with a scenario in which understanding the above images and being able to answer the “What do they have in common?” question posed by the professor would have a $100,000 value. On the third hand, maybe the ability to answer the question is worth $trillions? Let’s see how our future AI overlords do with it.

Grok:

Gemini disagrees almost completely!

ChatGPT also disagrees with Grok:

It seems as though NYU could replace all of its students with these three LLMs and still have a lively in-class discussion!

Full post, including comments