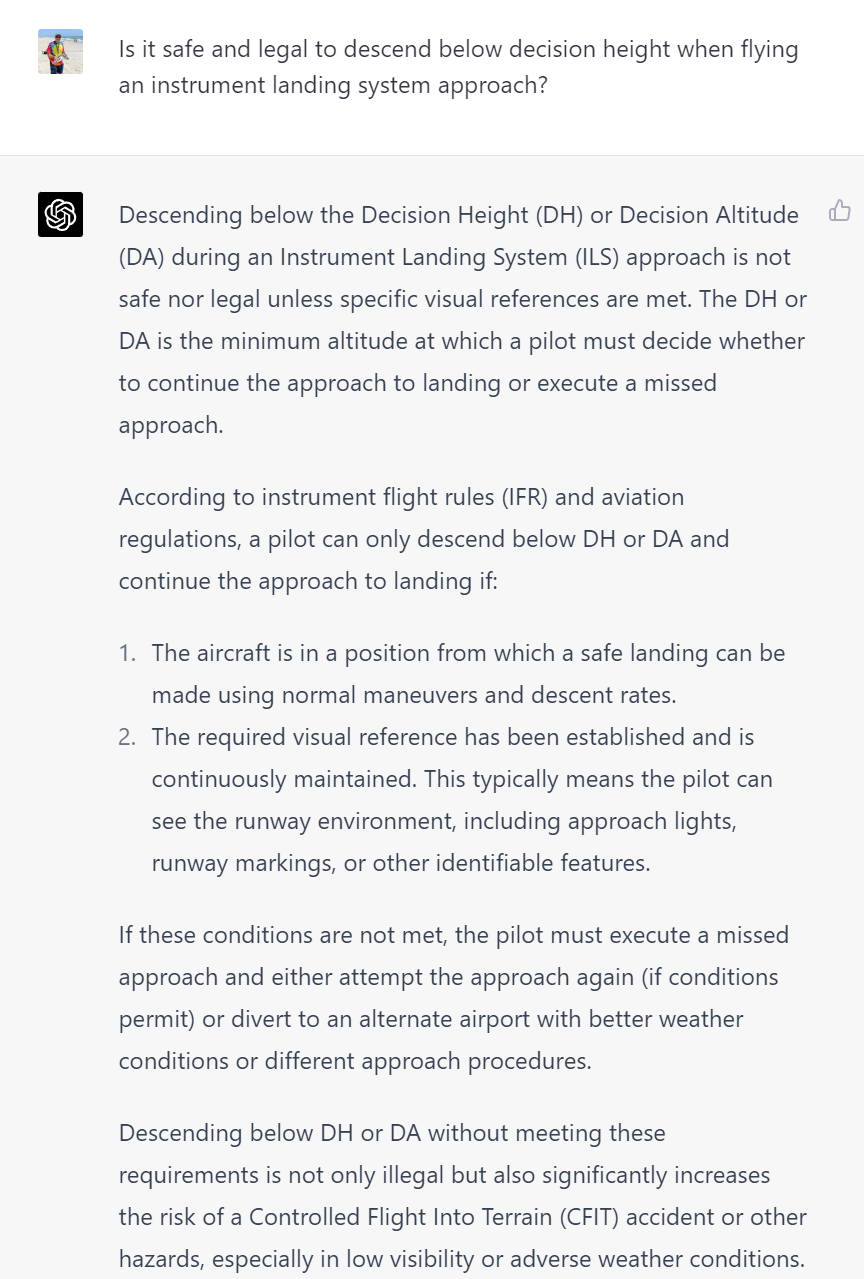

Is GPT-4 as good at aviation as it is at law and medicine? Here are some interactions:

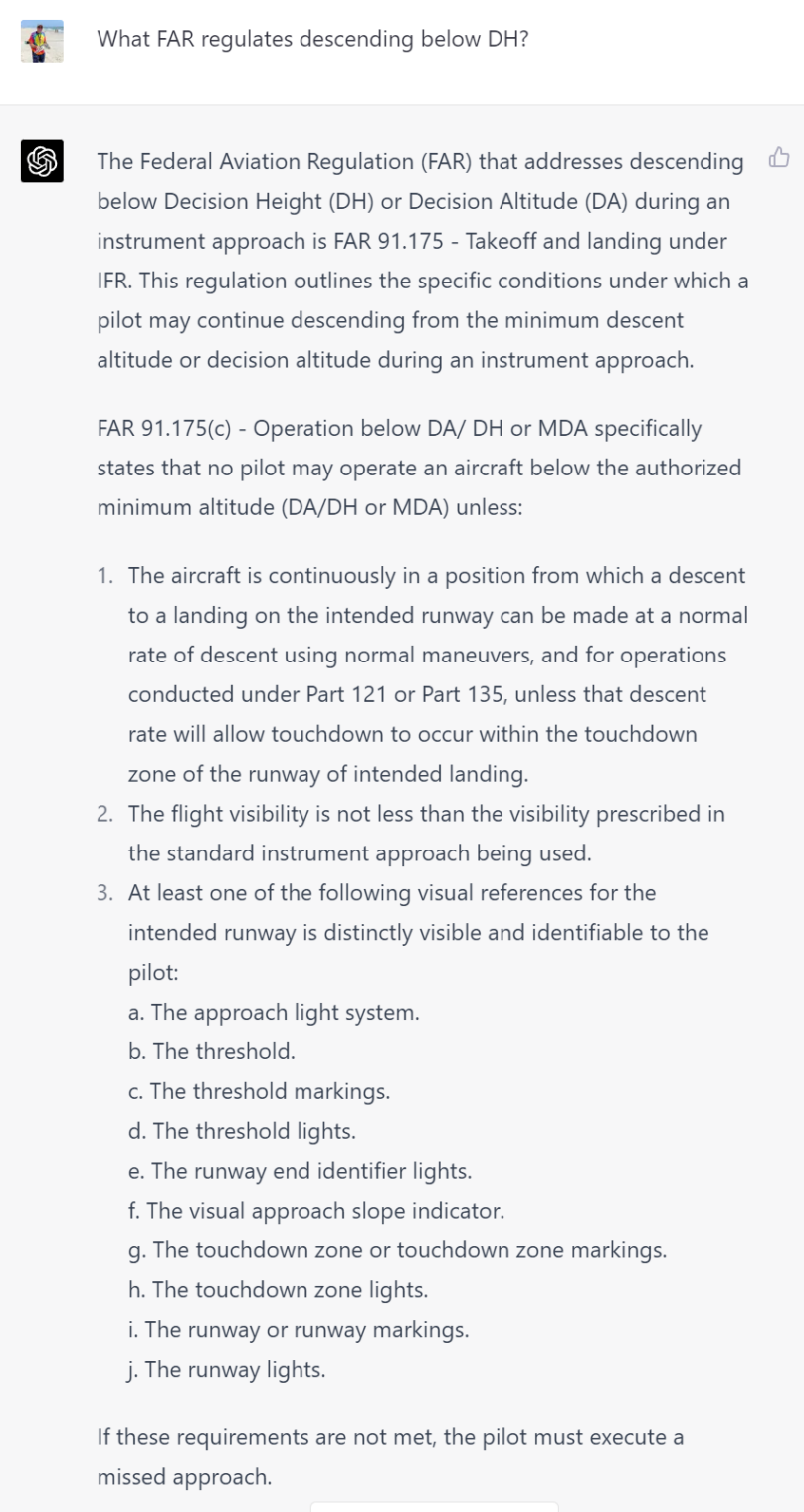

I rate this answer C- due to the failure to cite FAR 91.175, but a follow-up brings the grade up to a B:

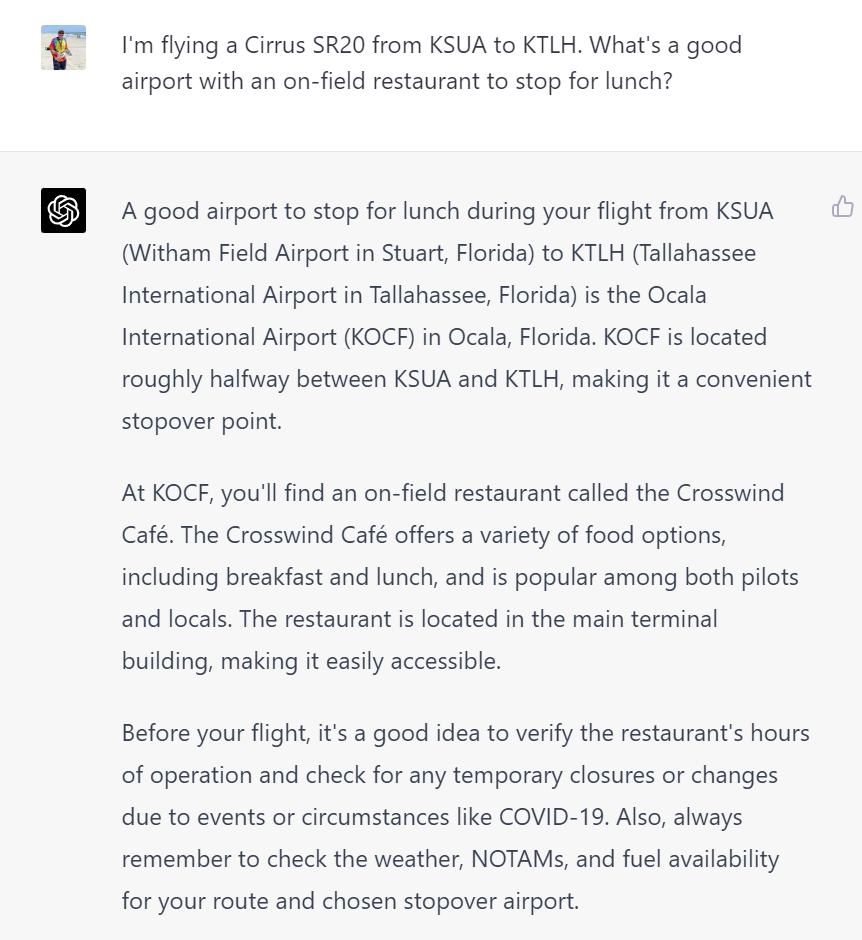

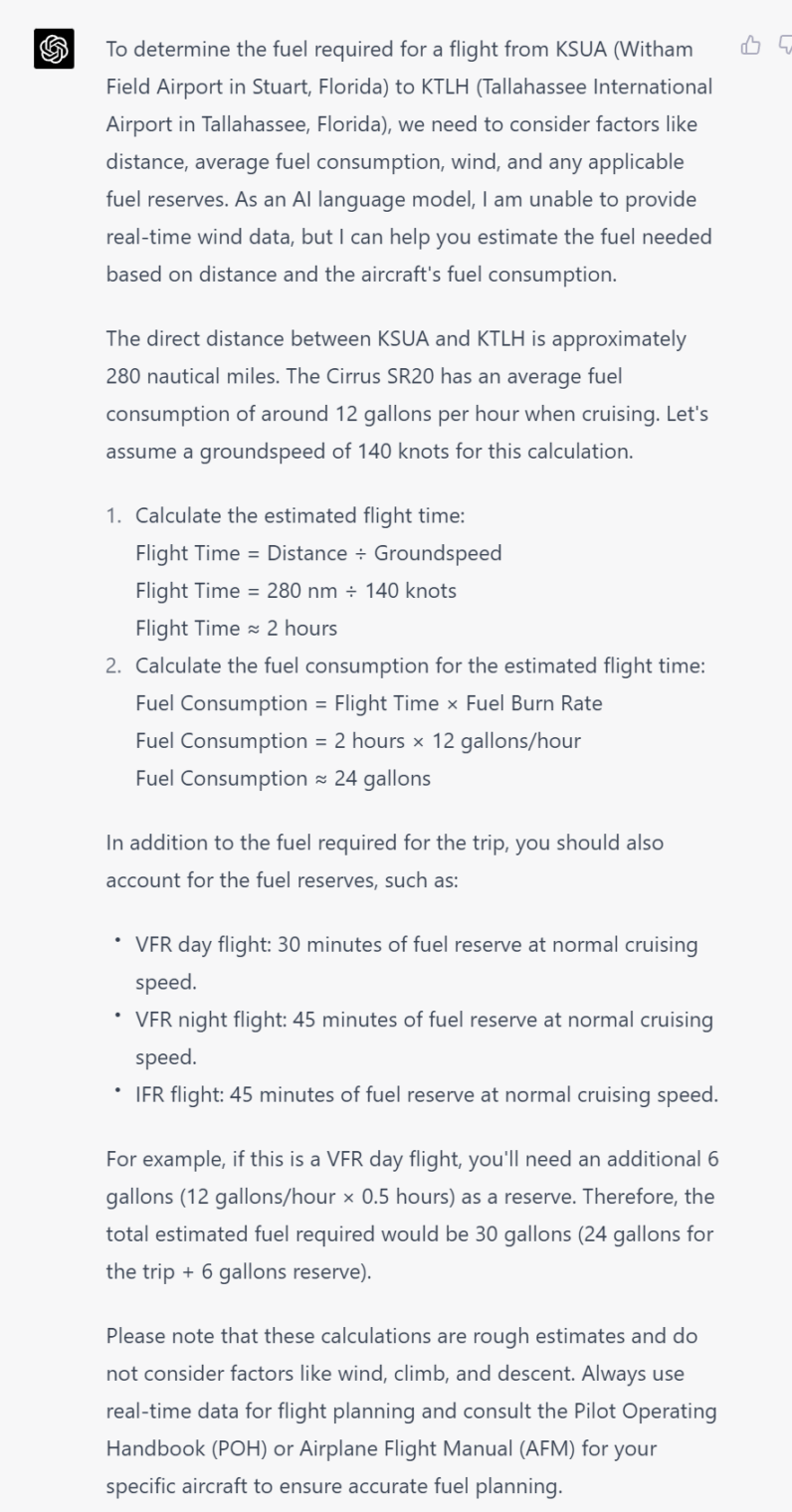

A+ in flight planning:

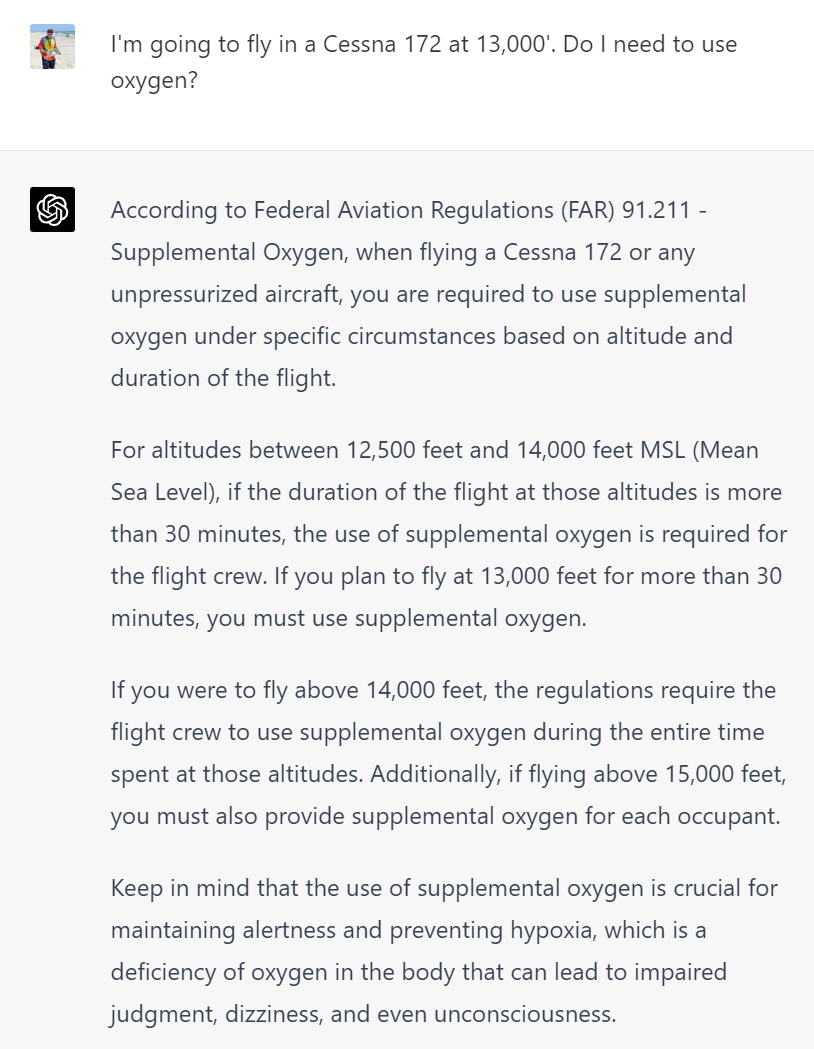

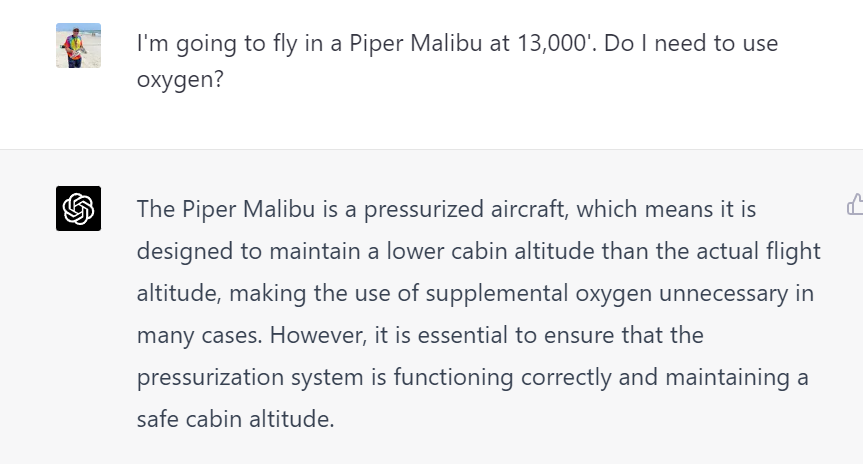

How about oxygen regulations?

Now check the intelligence level:

GPT-4 knew that the published spin recovery procedure in an SR20 is to pull the ‘chute. It did a reasonable job of estimating fuel load for a flight. It seems to have assumed a 10-knot headwind and/or slightly worse than book performance (both reasonable, especially given that the book speed numbers are at a fraudulently absurd 400 lbs. below max gross weight).

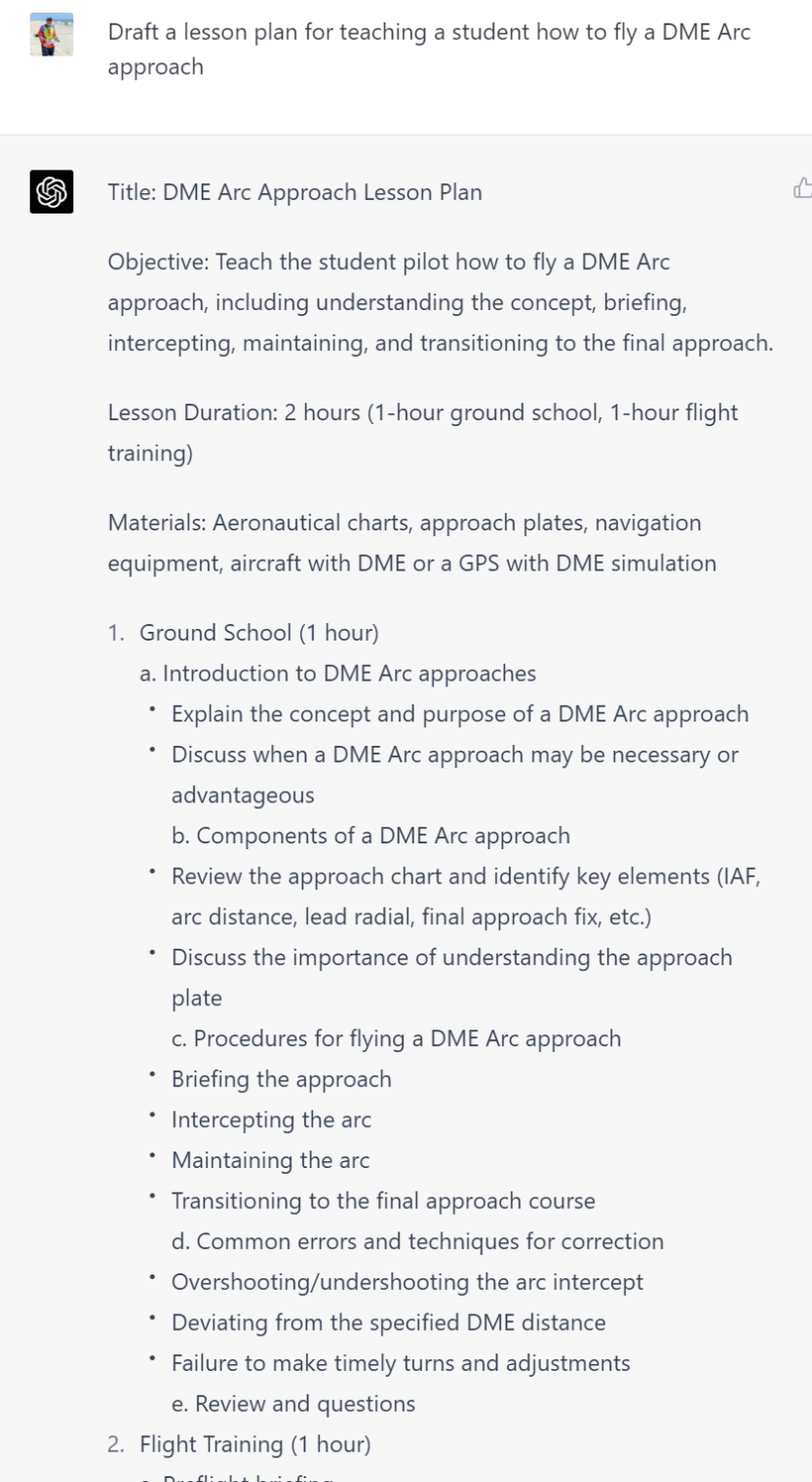

How about the toughest checkride in the FAA’s arsenal? Could GPT-4 pass a CFI oral exam?

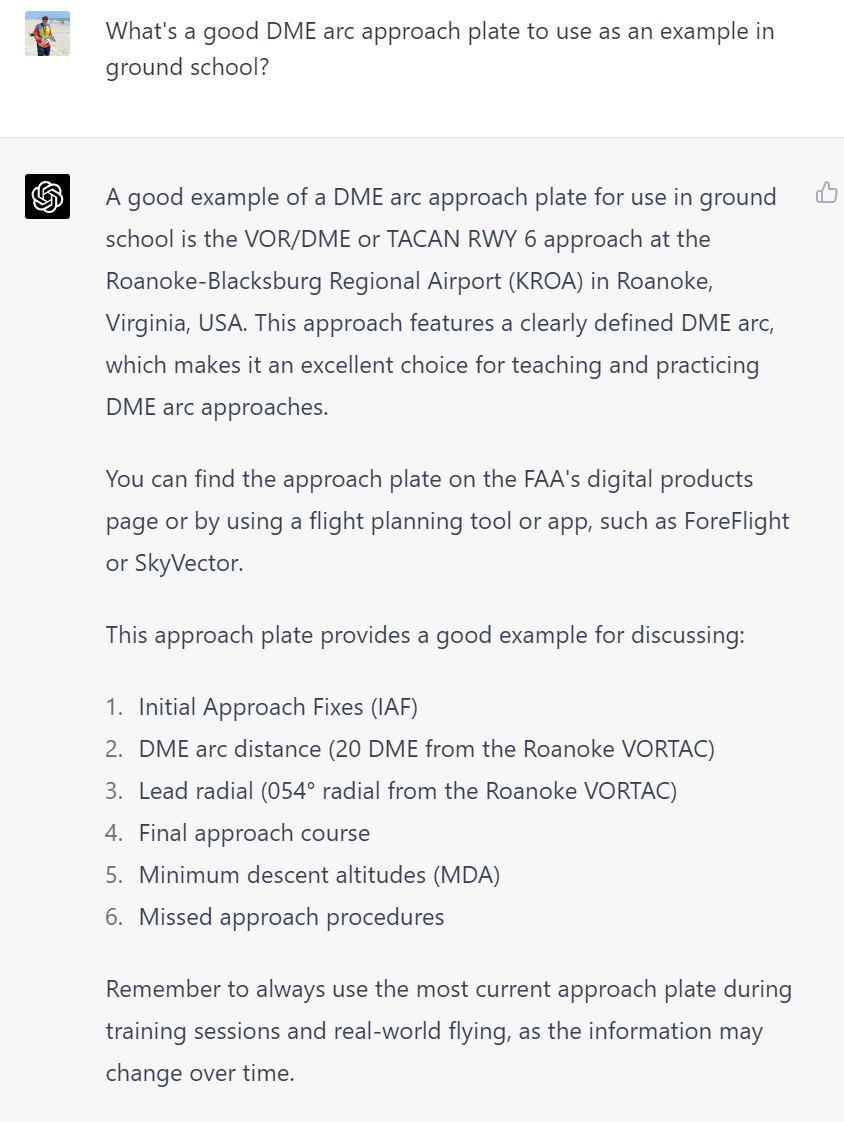

But where to find an airport that still has one of these?

I’m not sure why ChatGPT doesn’t offer links. It would certainly be a lot more convenient if the above answer had a link to the plate on airnav.com or SkyVector (why not at least the SkyVector airport page since ChatGPT specifically recommends the site?)

All hail the new master CFI!

Philip, Are you paying $20 a month for ChatGPT Plus? Or do you have some other way of accessing GPT-4?

J: I am paying! I have become Silicon Valley’s bitch for these Freemium services.

J: I think MSFT will let you use it for free on Bing. https://www.beingguru.com/using-chat-gpt-4-for-free-on-microsoft-bing-a-step-by-step-guide/

You can use ChatGPT for free if you can stomach using Bing as a browser, and setup/login a Microsoft account.

Thanks for the bing tip. It’s not crazy to pay $20/month, that’s about a sub to Netflix, and arguably ChatGPT is more fun. Mostly I am interested that you are paying becuase it seems to it signal you see something in it. Despite that you so often bamboozle it into revealing the political bias built into what I assume are certain pre-canned answers (about race, politics, etc).

re: “I think MSFT will let you use it for free on Bing.”

Yup: though I’d seen some speculation from reliable sources that its only creative mode that uses GPT-4, that “balanced” and “precise” may use GPT-3.5 since its less computationally expensive. Microsoft unfortunately hasn’t confirmed details to be sure thats the case, vs. merely different settings or a smaller context window.

Phil – any thoughts on what you would use ChatGPT for — besides what seems to be experimenting?

I’ve got a post coming up on one area where ChatGPT reigns supreme… the kitchen! Asking it how to cook something yields better/faster results than poring over recipe search results from The Google.

That should be interesting using CHATPT to improve a physical activity where the success of the out put depends on physical factors as well as sensory and esthetic factors Have you thought about bringing Sam Britton on your cake baking team to help with the women’s perspective now that his legal problems are coming to an end. I mean even now down on his luck he is someone your university shroud be proud of from his degree to his quick rise in DC, his straight on confrontation of the big issue of our time, gender stereotyping, to his cutting edge fashion sense. You know what they say, behind every good man is someone who identifies as a good woman.

@Philip,

> Asking it how to cook something yields better/faster results than poring over recipe search results from The Google.

Of course, and that’s the one thing that ChatGPT stands out for: it aggregates results from various sources and saves you the time of having to click on and read pages to construct a final summary off Google’s generic search results. That and the personal touch it puts into the chat exchange as it interacts with its users. Nothing else is really magical about ChatGPT as the media and the “experts” want to frame it.

So… ChatGPT can regurgitate canned answers it picked someplace on the Internet. Cool.

These huge ANNs are overparameterized, meaning that amount of information contained in the model exceeds the amount of information in the training set.

Yes. For example, “visual reference has been established and can be maintained” yields plenty of results. ChatGPT just inserts “continuously”.

One wonders if they have a post-processing anti-plagiarism-detection stage.

I find that a Google search for the questions gives many better results (including faa.gov) that take the same time to read if one is accustomed to speed reading and scanning the result for the relevant parts.

And it is more pleasant to read — something about ChatGPT’s output is highly annoying.

Another highlight. From the generated text:

“The aircraft is continuously in a position from which a descent to a landing on the intended runway can be made at a normal rate of descent using the normal maneuvers, […]”

From https://www.faa.gov/regulations_policies/handbooks_manuals/aviation/instrument_procedures_handbook/media/faa-h-8083-16b_chapter_4.pdf, page 9:

“Pilots should remain at or above the circling altitude until the aircraft is continuously in a position from which a descent to a landing on the intended runway can be made at a normal rate of descent and using normal maneuvers.”

CFI = See If I care!

Does ChatGPT ever ask you clarifying questions? Does it ever ask questions about yourself? Asking a human for ideas on recipes or vacations, the human would usually first ask, Well, what kinds of things do you like to do? What foods do you like to eat?

GPT still failed to mention that at DA you are not required to stop the descent. You are expected look out the window and make a decision, while continuing the approach. Which means you could dip below the DA briefly before the go around.

https://www.boldmethod.com/learn-to-fly/regulations/mda-vs-da-minimum-descent-altitutude-decision-altitude-explained/

Also I believe in some cases you can descend to 100 feet if you can identify specific parts of the approach lighting system . Still a C 🙂

re: “I’m not sure why ChatGPT doesn’t offer links”

Thats because it doesn’t have access to the web to search for them (unless you have access to a plug-in to do it) and if asked it’d likely hallucinate one. I’d be curious to see how Bing’s AI compares which is essentially GPT-4 hooked up to the internet: but it doesn’t always know when it needs to search and can’t assess what sources are reliable or reliably reason about what is the most current source when things have changed over time.

Last I heard Bing lets people off their waitlist right away (or perhaps doesn’t have one anymore), if you go to Bing.com/new and unfortunately also use their Edge browser.

As for ChatGPT-4: the way it actually generates the information isn’t tied to actual sources, its essentially a merged memory of myriad text sources that doesn’t track where its internal representation came from that leads to an output. Think of some common idea learned as a child like the concept of “the emperor’s new clothes”. Unless it came from some particular book they read a lot as a child, how many people can cite where and when they learned an idea? This has the same difficulty for most things usually, unless a source perhaps is referenced often enough in multiple places that its associated with the concept. Think of lossy compression or a one way hash that can’t generate the original and loses information: like sources.

I just pulled up plates for KROA in foreflight and there is no DME arc on the VOR approach. The GPT botty is full of shit.

A very famous DME arc approach is VOR Rwy 15 at KMTN. The entire approach from the IAF all the way to the runway threshold is a 14.7 nm arc from BAL VOR. The published missed procedure is also a DME arc. https://skyvector.com/files/tpp/2303/pdf/05222VT15.PDF

As others have said the bot generates random gibberish to make it sound phonetically plausible. It can lead to a grave advise, especially when it comes to aviation.

Next time better ask a human 😉

Hmm… I think ChatGPT’s database is mostly from 2021. Maybe there was a DME arc approach at KROA back in 2021? Though maybe not. The plate from SkyVector has a 15AUG19 at the bottom left. ChatGPT certainly is convincing! I didn’t bother to click through from SkyVector to check.

Well it looks like FAA does not publish historical plates so we can’t say for sure that this VOR approach never had an arc. However GPT-4 lacks the ability to read plates. When asked simple questions about specific approaches it refused to answer, and kept referring to the FAA (which is a good thing i guess) . I think that means that the story about a “lead 54 radial from Roanoke vortac” was completely made up.

What happens in an SR-20 when you are in a spin and you drop the nose and apply opposite rudder?

Any Cirrus can be recovered from a spin with conventional control inputs, but they need to be aggressive. This testing was done for European certification and the conclusion was that it was doubtful that the average pilot would be successful.

@philg are modern pilots allergic to full-authority control inputs? I think the planes I trained (briefly) in were placarded against putting it into a spin on purpose. I guess it makes sense. (and I guess it’s good there’s a chute)

It’s just outside the realm of anything you’d ever do in regular flying to push a control to the stop. Aerobatic pilots, of course, do that all the time.