Here’s a question at the intersection of marketing and electronics: who is buying Intel CPUs right now after Intel has told the world that they will render the current socket, and therefore all current motherboards, obsolete before the end of 2024?

“Intel’s next-gen desktop CPUs have reportedly leaked” (Tom’s Hardware):

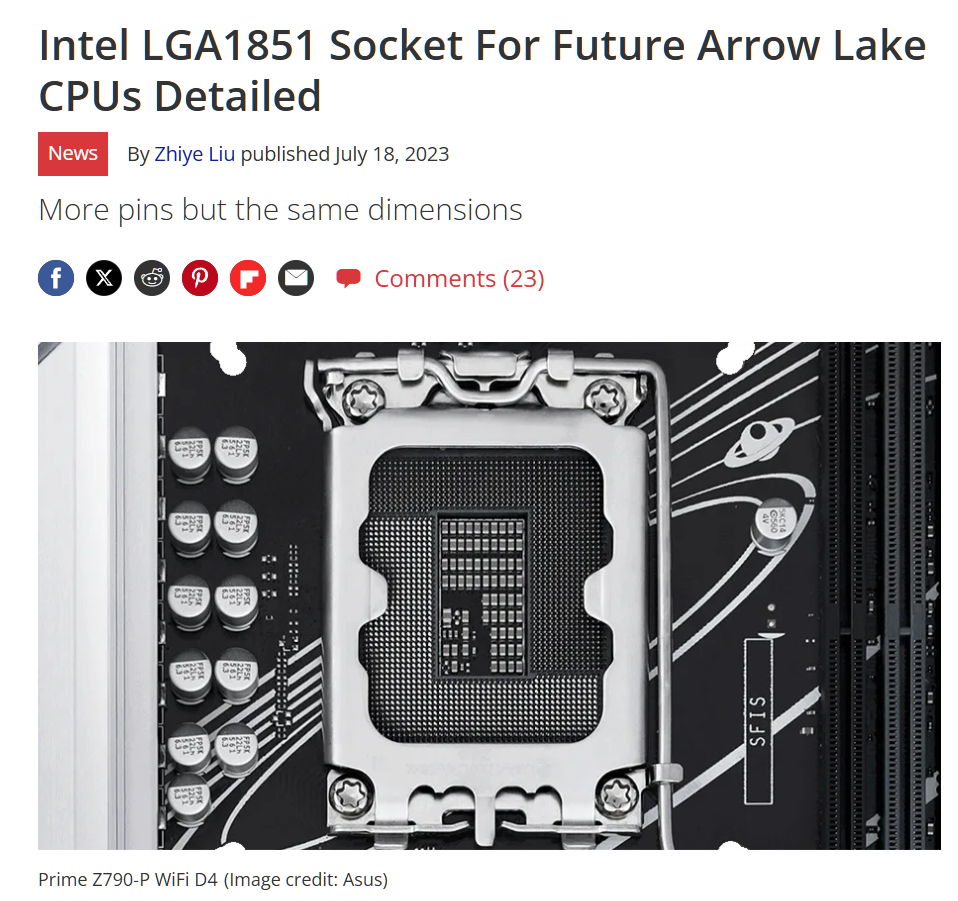

Arrow Lake will reside on new Intel motherboards with LGA1851 sockets and 800-series chipsets. Although the upcoming socket has 9% more pins than the existing LGA1700 socket, the dimensions didn’t change, so you might be able to recycle your existing CPU cooler.

Intel hasn’t provided details on when Arrow Lake will hit the market. But we suspect it’ll be sometime in the fourth quarter of the year since AMD’s upcoming Zen 5 Ryzen processors are on track for launch before the year is over.

Especially given that AMD is not rendering its socket obsolete for another few years, I am having trouble figuring out why demand for Intel desktop CPUs, at least at the high end, doesn’t fall off a cliff.

The news about the socket is actually almost a year old at this point. A July 2023 article:

I guess it is tough to keep a secret when there are so many independent motherboard manufacturers, but shouldn’t we expect a demand collapse, massive price cuts for both CPUs and motherboards, etc. as the Arrow Lake release gets closer?

Is the explanation that anyone who cares about CPU/computer performance buys AMD? I think that Intel claims that their new chips have an onboard AI-optimized GPU.

The Osborne Effect might have been significantly overstated even in the case of Osborne:

https://en.wikipedia.org/wiki/Osborne_effect#Criticism

Some modern trends:

1) Computer performance has long been adequate for a significant portion of users

2) The performance increases are somewhat modest since the end of Dennard Scaling over 20 years ago.

3) In part as a result of #1 and #2, I would expect that processor upgrades are much less prevalent than in the 1980s and 1990s (and even then, perhaps were relatively rare, given that motherboards, memory, graphics, and storage also evolved).

4) Many computers are purchased due to an immediate need (the cat knocked a container of liquid onto the old laptop, or a company hires a new employee). People know that computers in the future may or may not be more powerful, in the same way that they also know that this current sports year will end soon and another will take its place.

Last time I upgraded assembled desktop it was 1990th.

Those who assemble their computers will wait.

Not an issue for me. I’ve probably bought 100+ Intel based servers in the past 30+ years. At no point did it occur to me that I might like to swap out the processors for new ones.

Is it worth waiting to buy a new machine with Thunderbolt 5 support?

People that upgrade anything in an existing system upgrade the Video Card. Not the CPU.

This is from the perspective of DIY enthusiasts. No other sole would ever contemplate a CPU upgrade. Most CPUs might as well be soldered to the motherboard and no one would care.

Today, every few years we get:

– A new generation of Video Cards whose upgrade of matters FAR more than the CPU upgrade

– A new generation of USB

– A new generation of the PCI bus

– A new generation of nvme ssd sockets (related to the PCI bus)

– A new generation of Wifi (built into motherboards)

– A new generation of power connectors at least for the video card

– A new generation of RAM

– A new generation of how you might put a heatsink onto the CPU

– A new generation of how 6-10 case fans connect to the motherboard

– A new generation of how the motherboard controls RGB -external visible to the eye thru the case- lighting effects inside the case on components such as the heatsink, fans, and RAM modules

All of this boils down to, you’re either going to just upgrade the Video Card, or you’re going to upgrade more.