Meet up in New York or Boston?

Loyal Readers:

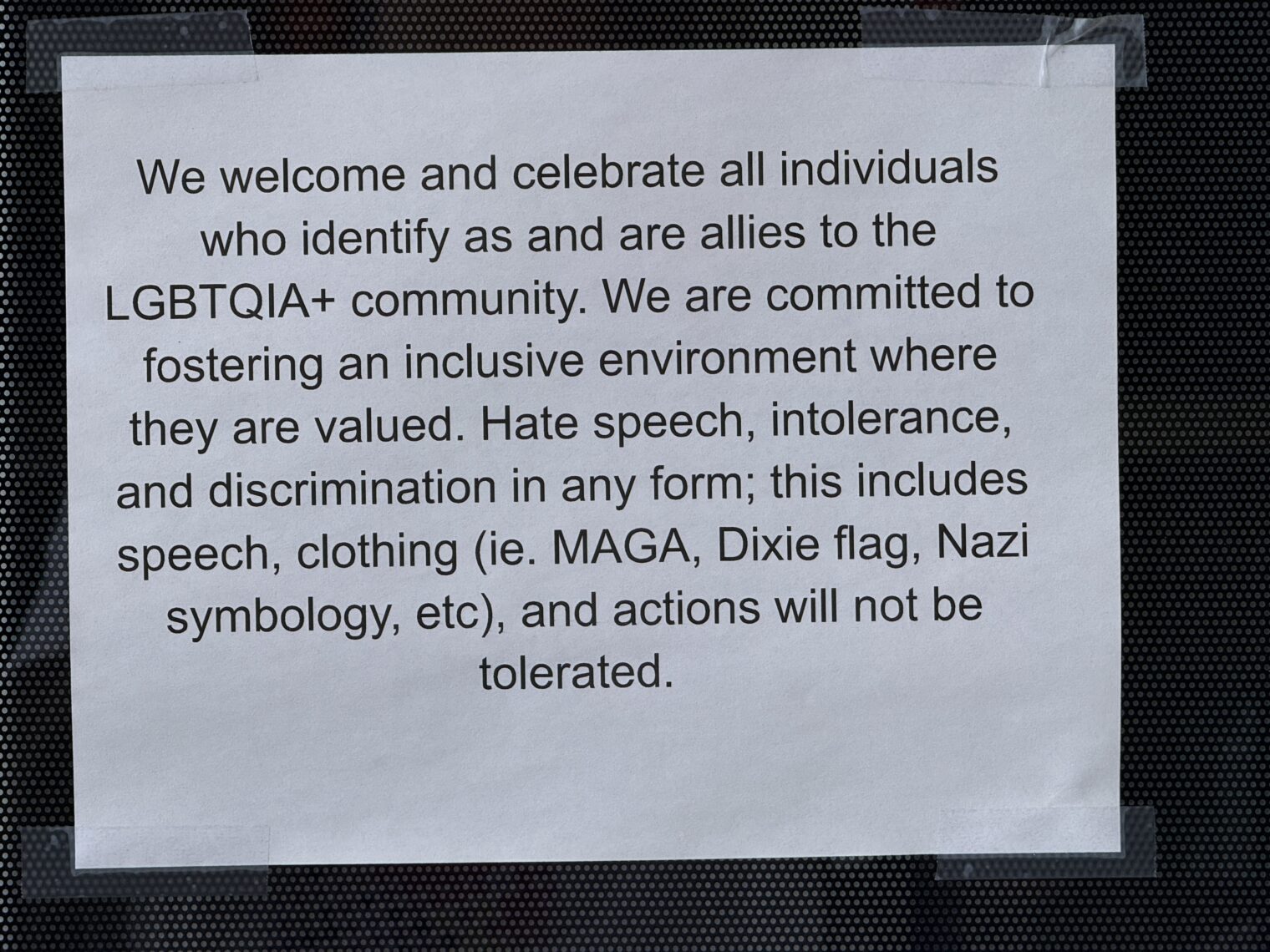

I’m headed to Manhattan this weekend and it would be fun to get together for coffee. Please email philg@mit.edu if interested. I propose Sunday, August 17 at 10:30 am. I propose that we meet near the Tenement Museum on the Lower East Side to celebrate NYC’s return to its glorious heritage of migrants housed in squalor. After coffee we can proceed to the nearby International Center of Photography and its “Great Acceleration” exhibition:

The Great Acceleration is an established term used to describe the rapid rise of human impact on our planet according to a range of measures, among them population growth, water usage, transportation, greenhouse gas emissions, resource extraction and food production, each of which Burtynsky has photographed the outward signs of at length and in great detail over the past forty years.

(And what better way to accelerate the Great Acceleration in the United States than via open borders and associated rapid population growth?)

After NYC I’ll be traveling to Boston/Cambridge. How about a Friday, August 22 noon meeting at Yume Ga Arukara, an udon place in Porter Square that is supposedly great? I’m open to alternative suggestions, but let’s try to avoid the towns in Maskachusetts that are currently drowning in garbage because elite Massachusetts Democrats refuse to pay their peasant workers a fair wage (the state is home to at least 355,000 “illegal and inadmissible migrants” so maybe the hope was that these enrichers would pick up garbage at a lower hourly rate?):

Thank you for your attention to this matter!

Full post, including comments