MIT IAP courses for 2026

I wondered if it was continued fallout from coronapanic, but the course offerings for MIT’s January 2026 term (“IAP” for Independent Activities Period, in which both for-credit and more casual courses are traditionally offered) seemed rather thin when I first looked. The deadline for submitting an event was December 1, 2025 and I checked the schedule on November 29. There were still some awesome classes, e.g.,

Here was another one that seemed likely fun/challenging/technical:

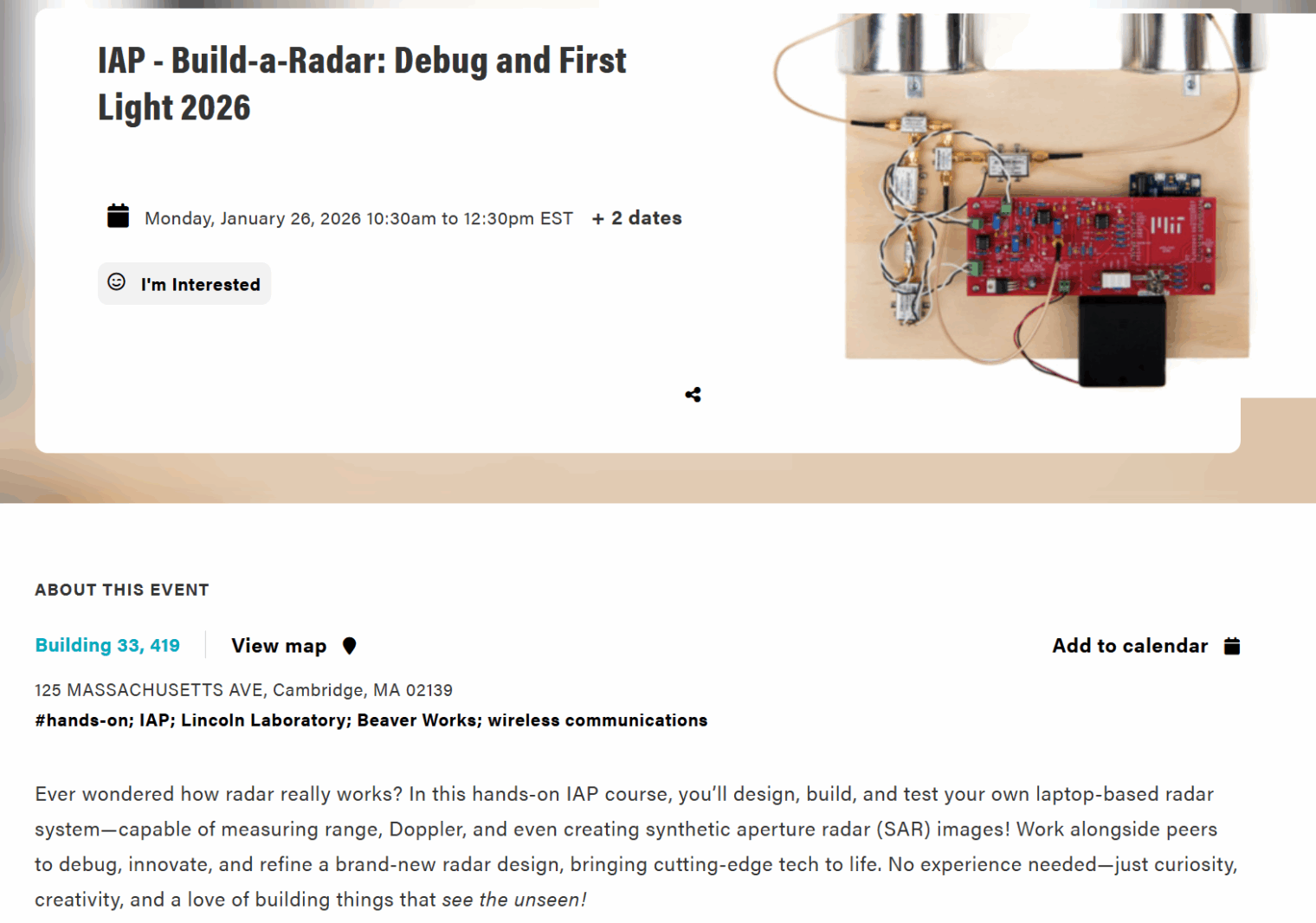

Keep an eye on the neighbors?

Want to learn what’s new and interesting in physics? A search on November 29 revealed zero events (see below for how the site populated later):

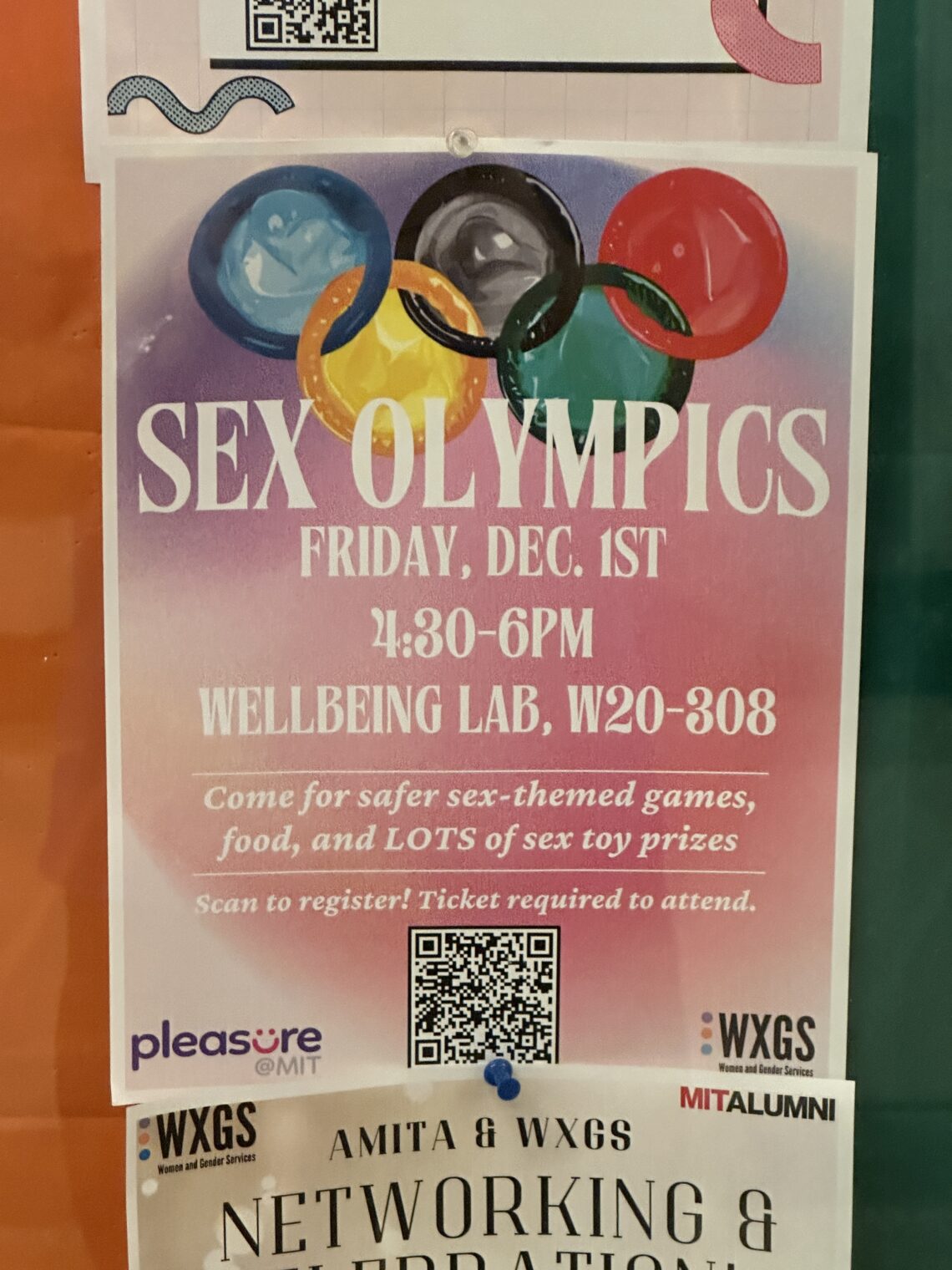

There were two pages of results for Diversity, Equity, and Inclusion:

Let’s look at the first class on Informed Philanthropy: “Students analyze the work of non-profits to address the challenges and opportunities facing MIT’s neighboring communities, with particular focus on community representation, equity, and social justice”. Imagine raising one’s hand towards the end of the class and saying, “Cambridge is critically short of affordable housing and health care. Therefore, in order to advance social justice for those whose ancestors were slaves in the U.S., we should give the $7,000 to ICE so that they can deport all of the undocumented migrants who are living in Cambridge public housing, contributing to long waiting lists to see doctors, and driving up food prices with their SNAP/EBT cards.”

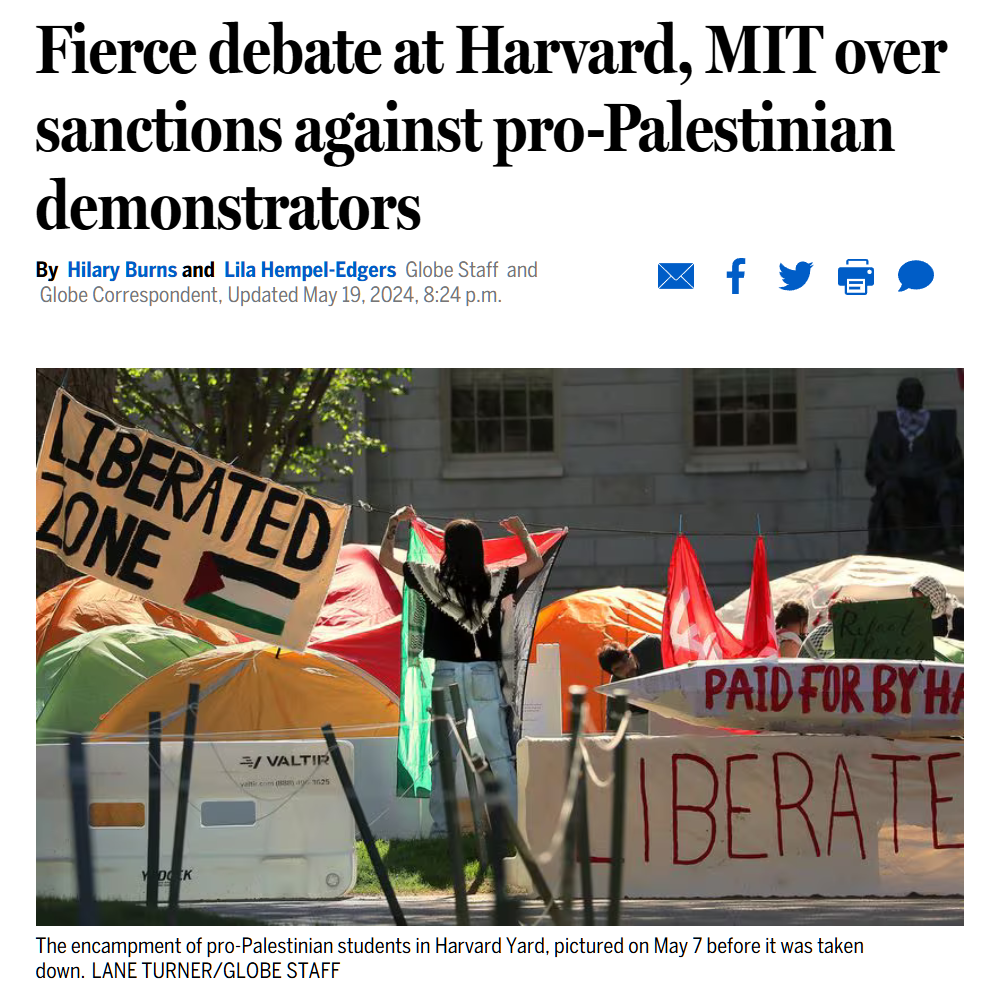

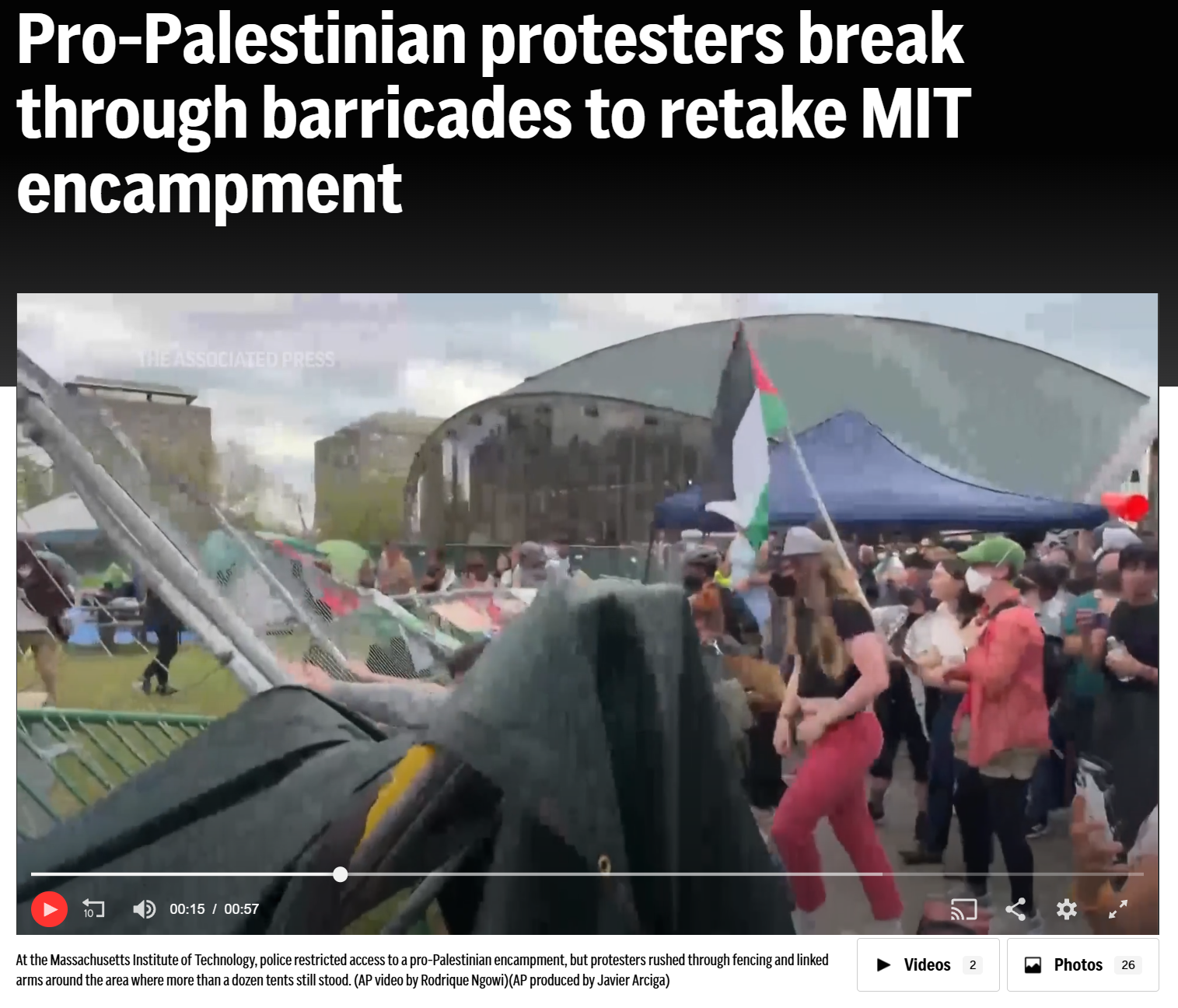

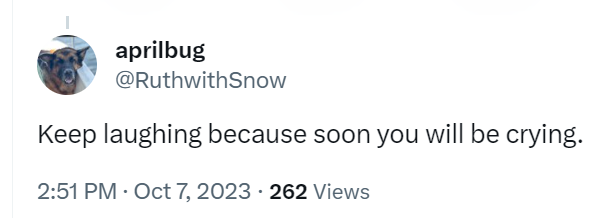

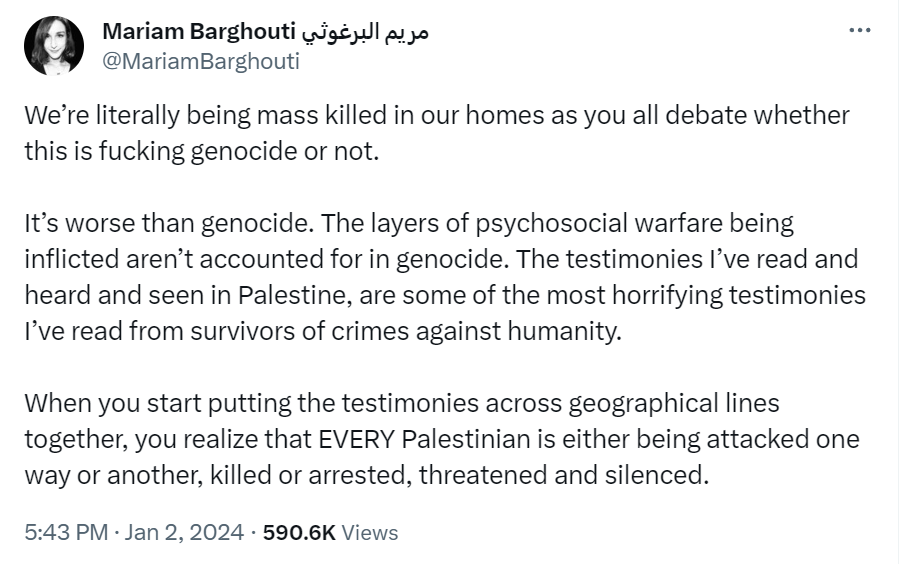

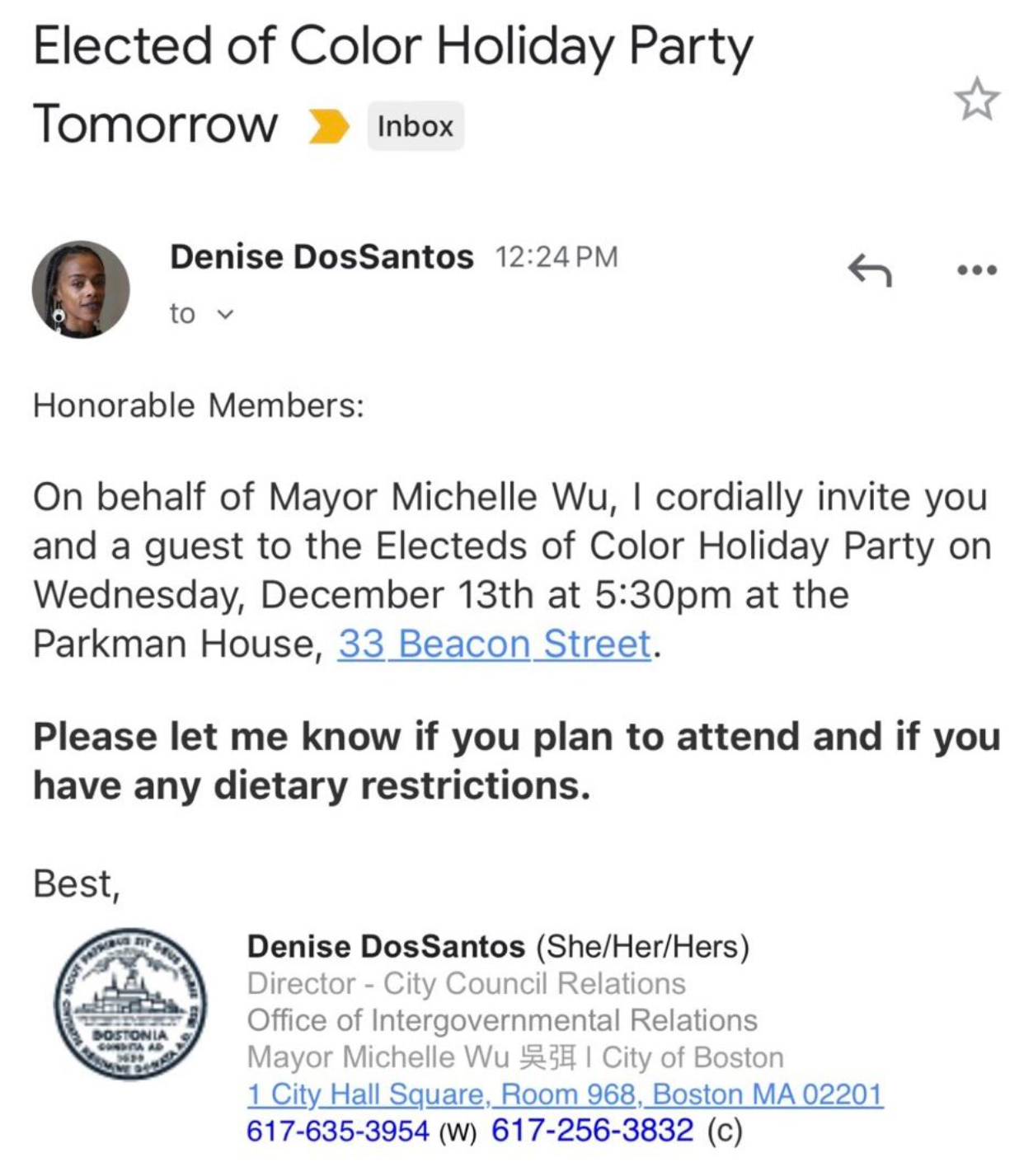

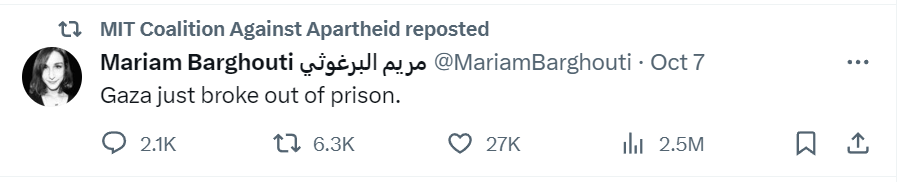

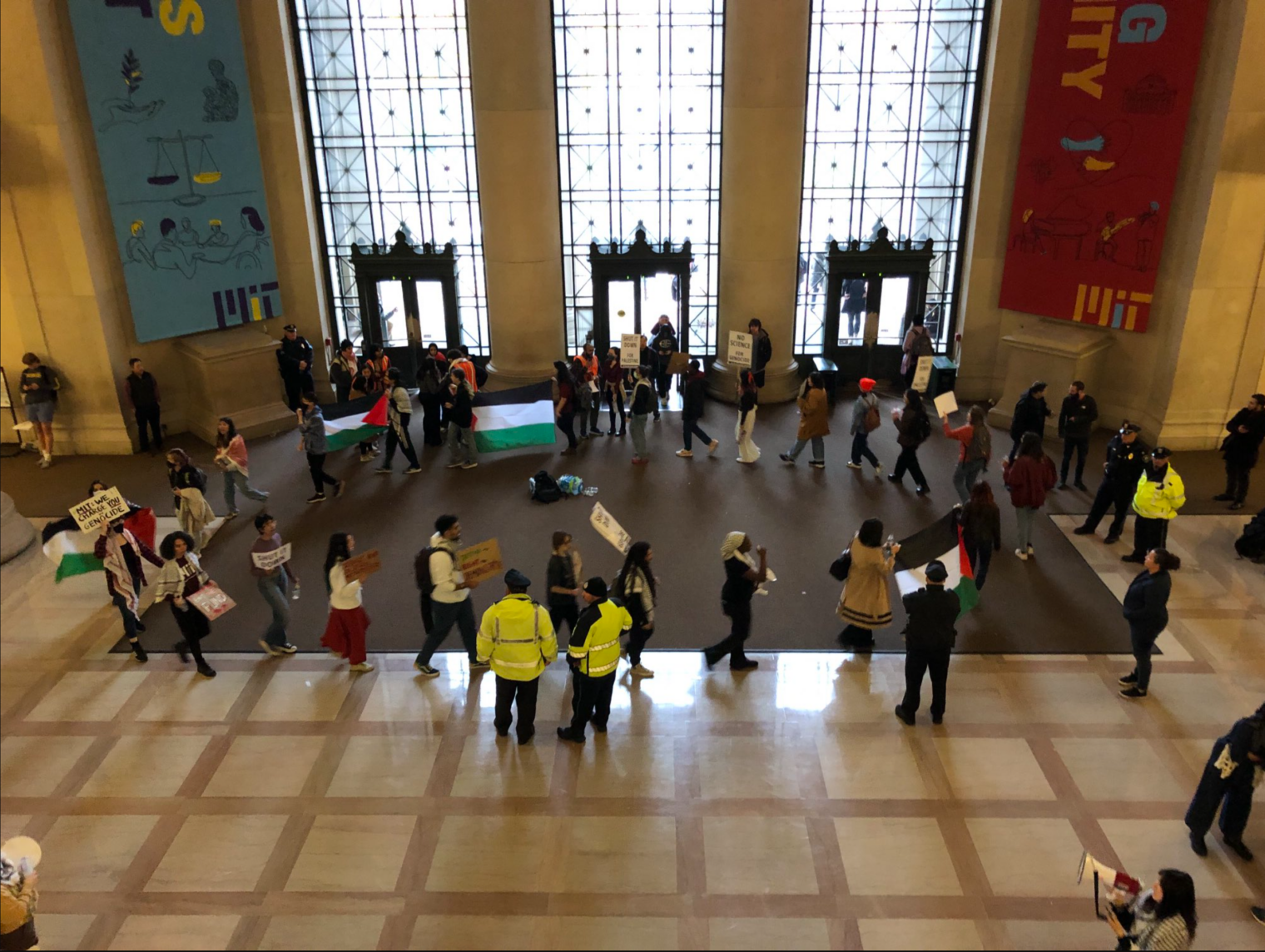

It you were concerned that the recent jihad waged by Rahmanullah Lakanwal might exacerbate the crisis of Islamophobia in the U.S.:

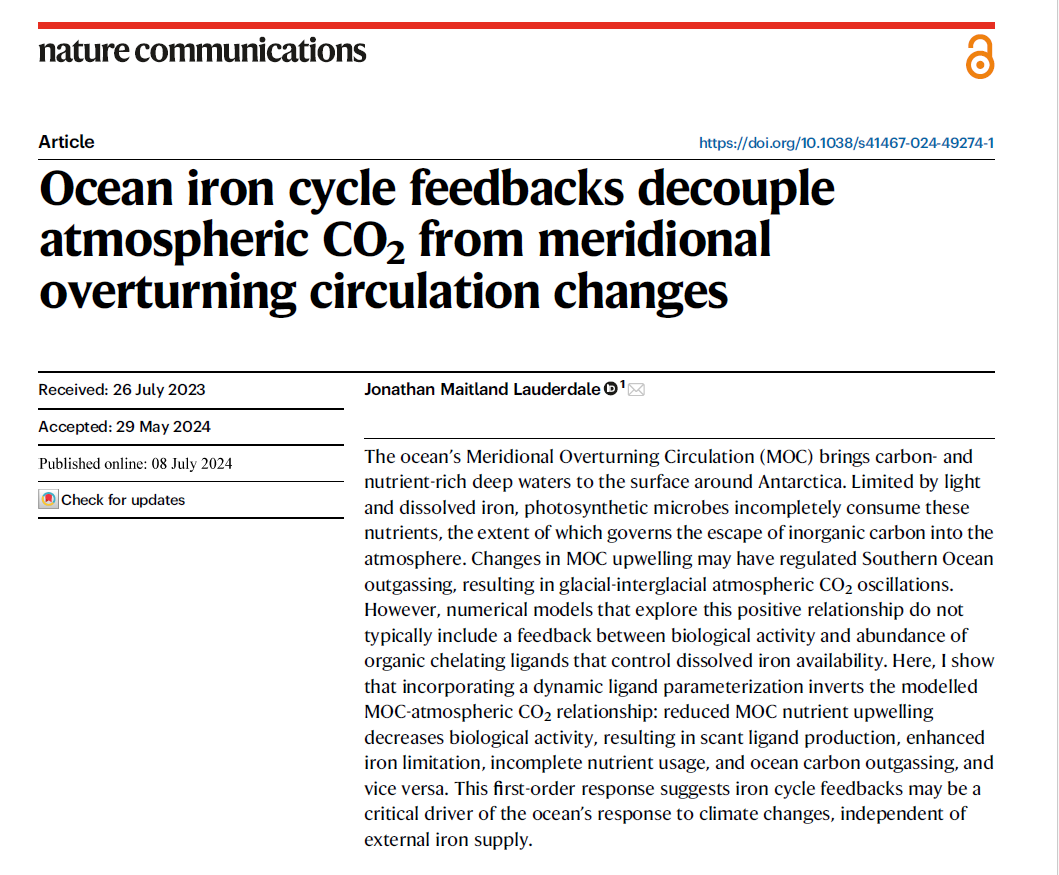

When I searched again on January 14, about 10 physical science events had been added for the month of January. More importantly, since LLMs will soon do all of the physical science research that anyone might want, I found a class on AI!

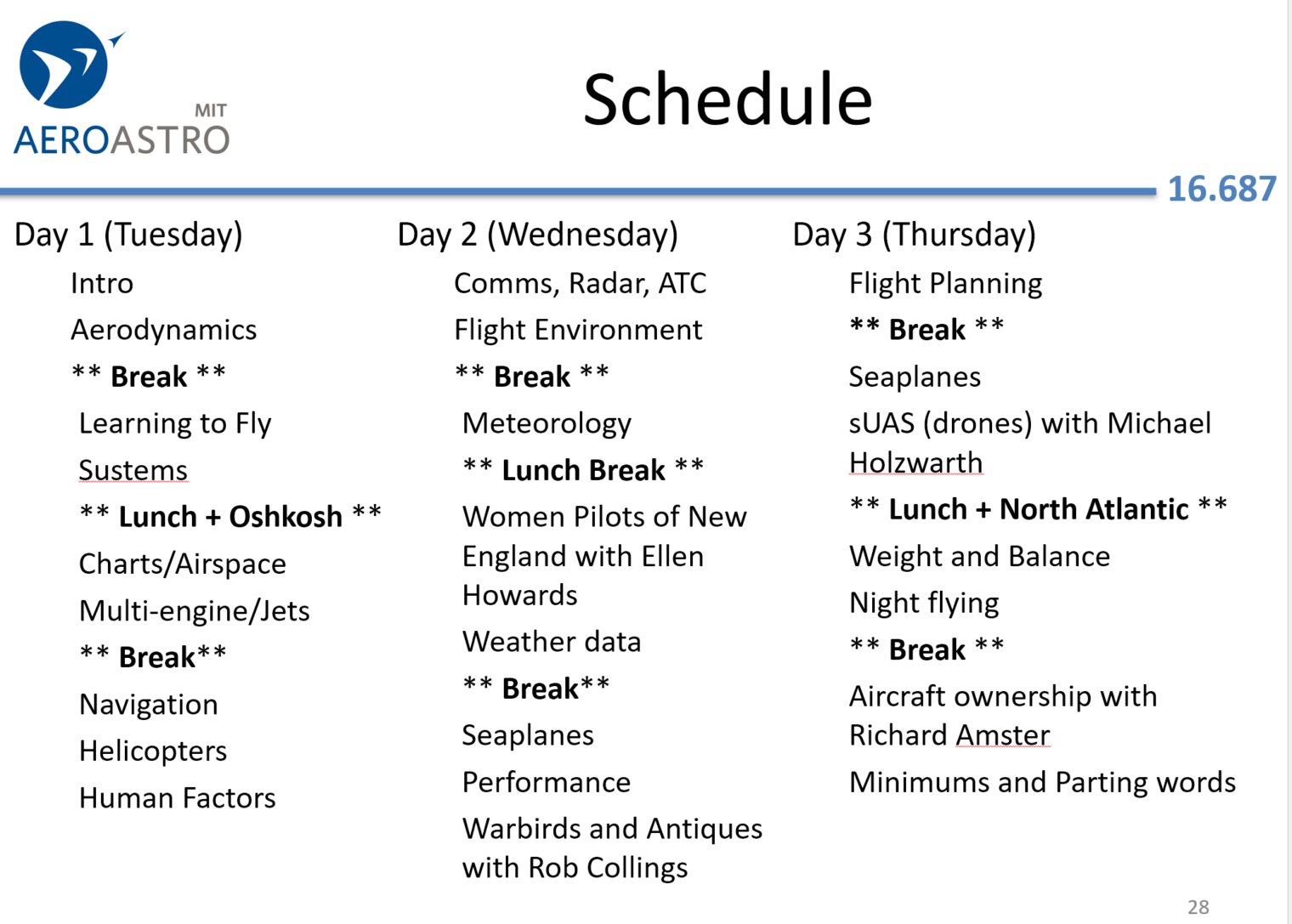

As in previous IAPs, this conflicts with what will probably be an awesome talk on mechanical watches:

(The same folks are going to run 32 students through a 4-hour session (8 at a time) where they’ll actually take apart and put a watch back together.)

Here’s another AI course that you could do after our FAA Ground School class!

It seems that the MIT IAP tradition has survived, but curiously the more technical or scientific the class the later the date at which it would appear in the online guide. Also, it seems that some classes aren’t listed at all in the IAP guide, e.g., our own class is on a Course 16 web page but not in the larger guide.

Full post, including comments