A friend has a powerful new-ish desktop PC with an AMD Threadripper CPU and a moderately powerful GPU. He installed LM Studio and found that running LLama2 used 40 GB of RAM and was able to generate only 1 word per second.

ChatGPT is faster than that and there are millions of users. What’s our best guess as to the hardware and electricity footprint? More than all of the Bitcoin activity?

Just one of Twitter’s server farms, apparently one that could be turned off without compromising the service, was 5,200 racks and each rack held 30 servers (1U each plus some disk or switch boxes?). That’s 156,000 physical servers to do something that isn’t computationally intensive on a per-user basis (though, of course, there are a lot of users).

Why hasn’t OpenAI taken over every physical computer in the Microsoft Azure cloud?

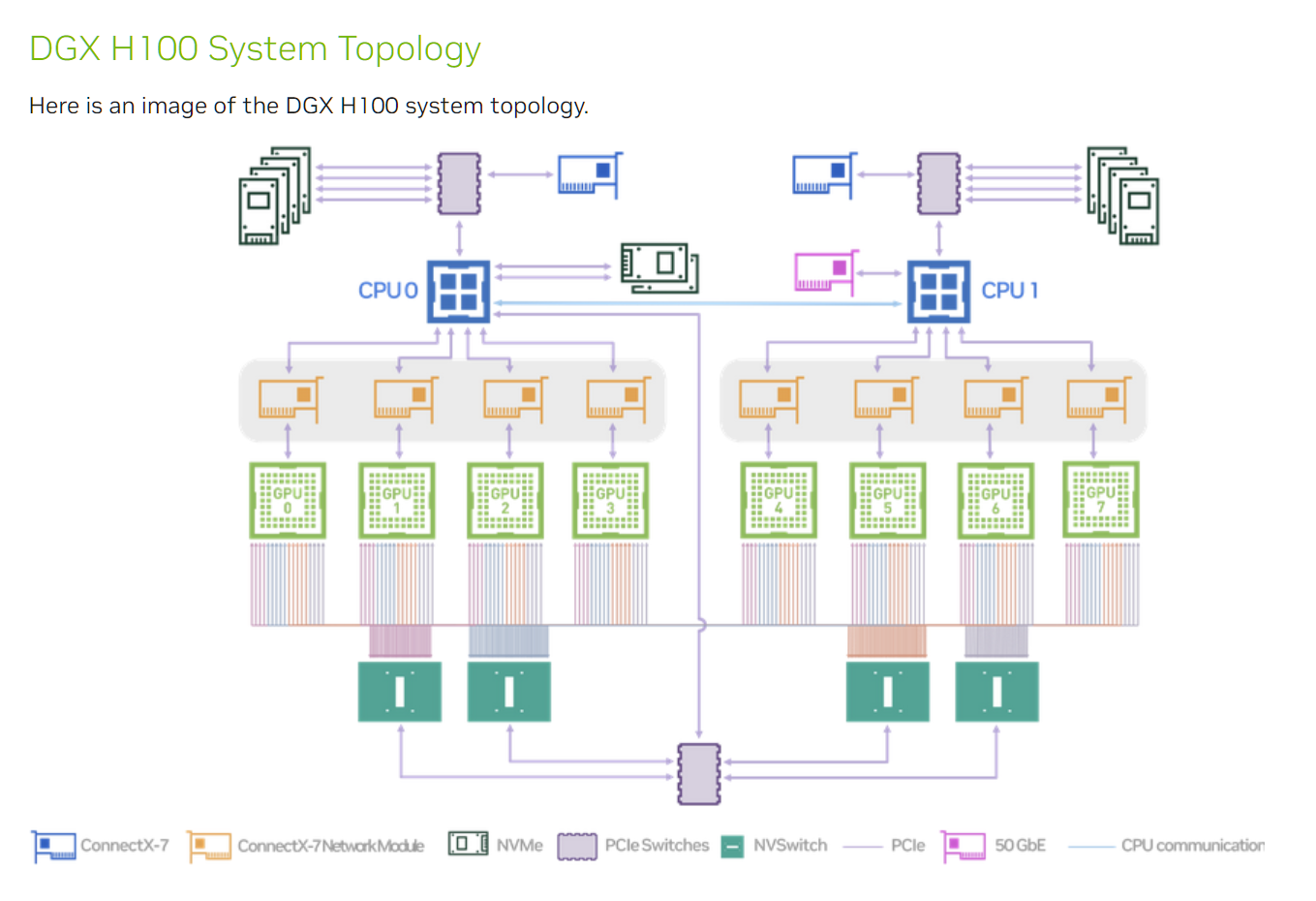

Nvidia porn:

Here are some excerpts from the book Elon Musk regarding the Christmas 2022 move of servers from Sacramento to save $100 million per year:

It was late at night on December 22, and the meeting in Musk’s tenth-floor Twitter conference room had become tense. He was talking to two Twitter infrastructure managers who had not dealt with him much before, and certainly not when he was in a foul mood. One of them tried to explain the problem. The data-services company that housed one of Twitter’s server farms, located in Sacramento, had agreed to allow them some short-term extensions on their lease so they could begin to move out during 2023 in an orderly fashion. “But this morning,” the nervous manager told Musk, “they came back to us and said that plan was no longer on the table because, and these are their words, they don’t think that we will be financially viable.” The facility was costing Twitter more than $100 million a year. Musk wanted to save that money by moving the servers to one of Twitter’s other facilities, in Portland, Oregon. Another manager at the meeting said that couldn’t be done right away. “We can’t get out safely before six to nine months,” she said in a matter-of-fact tone. “Sacramento still needs to be around to serve traffic.”

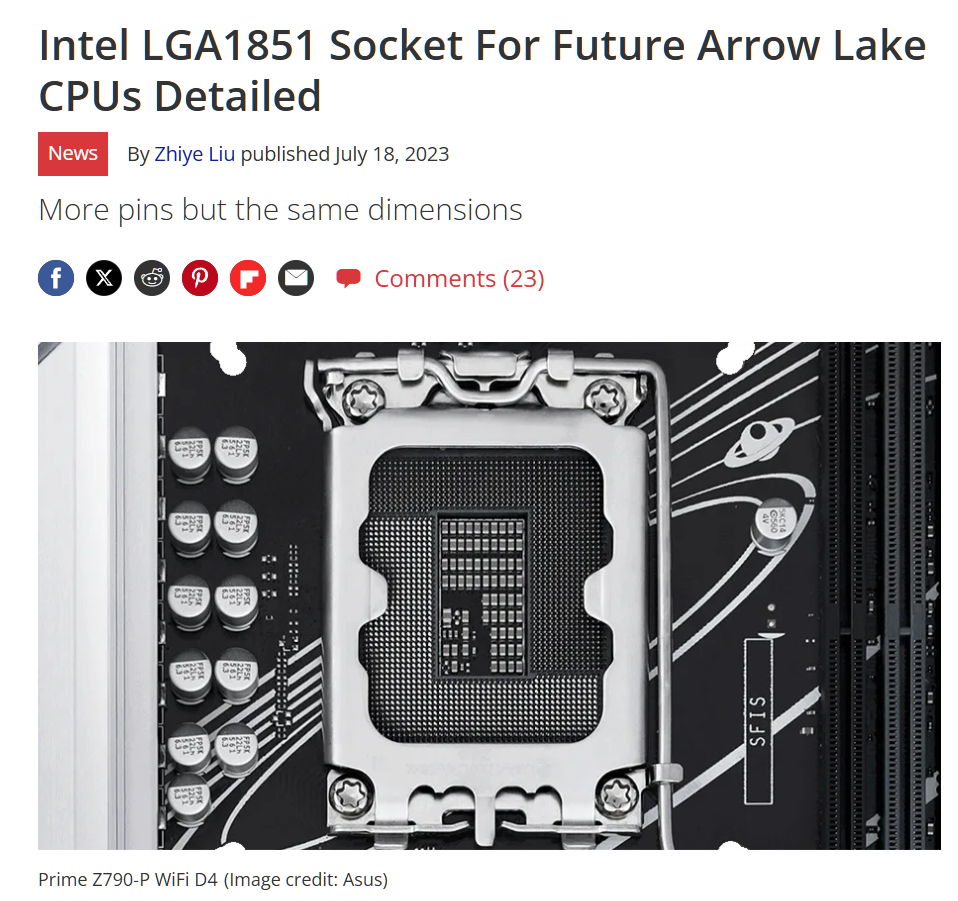

The manager began to explain in detail some of the obstacles to relocating the servers to Portland. “It has different rack densities, different power densities,” she said. “So the rooms need to be upgraded.” She started to give a lot more details, but after a minute, Musk interrupted.

“Do you know the head-explosion emoji?” he asked her. “That’s what my head feels like right now. What a pile of fucking bullshit. Jesus H fucking Christ. Portland obviously has tons of room. It’s trivial to move servers one place to another.” The Twitter managers again tried to explain the constraints. Musk interrupted. “Can you have someone go to our server centers and send me videos of the insides?” he asked. It was three days before Christmas, and the manager promised the video in a week. “No, tomorrow,” Musk ordered. “I’ve built server centers myself, and I can tell if you could put more servers there or not. That’s why I asked if you had actually visited these facilities. If you’ve not been there, you’re just talking bullshit.”

Musk then predicted a two-week move for the 150,000+ servers in 5,200 racks, each of which weighed 2,500 lbs.

“Why don’t we do it right now?” [young cousin] James Musk asked. He and his brother Andrew were flying with Elon from San Francisco to Austin on Friday evening, December 23, the day after the frustrating infrastructure meeting about how long it would take to move the servers out of the Sacramento facility. Avid skiers, they had planned to go by themselves to Tahoe for Christmas, but Elon that day invited them to come to Austin instead. James was reluctant. He was mentally exhausted and didn’t need more intensity, but Andrew convinced him that they should go. So that’s how they ended up on the plane—with Musk, Grimes, and X, along with Steve Davis and Nicole Hollander and their baby—listening to Elon complain about the servers. They were somewhere over Las Vegas when James made his suggestion that they could move them now. It was the type of impulsive, impractical, surge-into-the-breach idea that Musk loved. It was already late evening, but he told his pilot to divert, and they made a loop back up to Sacramento.

“You’ll have to hire a contractor to lift the floor panels,” Alex [a Twitter employee who happened to be there] said. “They need to be lifted with suction cups.” Another set of contractors, he said, would then have to go underneath the floor panels and disconnect the electric cables and seismic rods. Musk turned to his security guard and asked to borrow his pocket knife. Using it, he was able to lift one of the air vents in the floor, which allowed him to pry open the floor panels. He then crawled under the server floor himself, used the knife to jimmy open an electrical cabinet, pulled the server plugs, and waited to see what happened. Nothing exploded. The server was ready to be moved. “Well that doesn’t seem super hard,” he said as Alex the Uzbek and the rest of the gang stared. Musk was totally jazzed by this point. It was, he said with a loud laugh, like a remake of Mission: Impossible, Sacramento edition.

The next day—Christmas Eve—Musk called in reinforcements. Ross Nordeen drove from San Francisco. He stopped at the Apple Store in Union Square and spent $2,000 to buy out the entire stock of AirTags so the servers could be tracked on their journey, and then stopped at Home Depot, where he spent $2,500 on wrenches, bolt-cutters, headlamps, and the tools needed to unscrew the seismic bolts. Steve Davis got someone from The Boring Company to procure a semi truck and line up moving vans. Other enlistees arrived from SpaceX. The server racks were on wheels, so the team was able to disconnect four of them and roll them to the waiting truck. This showed that all fifty-two hundred or so could probably be moved within days. “The guys are kicking ass!” Musk exulted. Other workers at the facility watched with a mix of amazement and horror. Musk and his renegade team were rolling servers out without putting them in crates or swaddling them in protective material, then using store-bought straps to secure them in the truck. “I’ve never loaded a semi before,” James admitted. Ross called it “terrifying.” It was like cleaning out a closet, “but the stuff in it is totally critical.” At 3 p.m., after they had gotten four servers onto the truck, word of the caper reached the top executives at NTT, the company that owned and managed the data center. They issued orders that Musk’s team halt. Musk had the mix of glee and anger that often accompanied one of his manic surges. He called the CEO of the storage division, who told him it was impossible to move server racks without a bevy of experts. “Bullshit,” Musk explained. “We have already loaded four onto the semi.” The CEO then told him that some of the floors could not handle more than five hundred pounds of pressure, so rolling a two-thousand-pound server would cause damage. Musk replied that the servers had four wheels, so the pressure at any one point was only five hundred pounds. “The dude is not very good at math,” Musk told the musketeers.

After Christmas, Andrew and James headed back to Sacramento to see how many more servers they could move. They hadn’t brought enough clothes, so they went to Walmart and bought jeans and T-shirts. The NTT supervisors who ran the facility continued to throw up obstacles, some quite understandable. Instead of letting them prop open the door to the vault, for example, they required the musketeers and their crew to go through a retinal security scan each time they went in. One of the supervisors watched them at all times. “She was the most insufferable person I’ve ever worked with,” James says. “But to be fair, I could understand where she was coming from, because we were ruining her holidays, right?”

The moving contractors that NTT wanted them to use charged $200 an hour. So James went on Yelp and found a company named Extra Care Movers that would do the work at one-tenth the cost. The motley company pushed the ideal of scrappiness to its outer limits. The owner had lived on the streets for a while, then had a kid, and he was trying to turn his life around. He didn’t have a bank account, so James ended up using PayPal to pay him. The second day, the crew wanted cash, so James went to a bank and withdrew $13,000 from his personal account. Two of the crew members had no identification, which made it hard for them to sign into the facility. But they made up for it in hustle. “You get a dollar tip for every additional server we move,” James announced at one point. From then on, when they got a new one on a truck, the workers would ask how many they were up to.

By the end of the week they had used all of the available trucks in Sacramento. Despite the area being pummeled by rain, they moved more than seven hundred of the racks in three days. The previous record at that facility had been moving thirty in a month. That still left a lot of servers in the facility, but the musketeers had proven that they could be moved quickly. The rest were handled by the Twitter infrastructure team in January.

Getting everything up and running in Portland took about two months, in the end, due to incompatible electrical connectors and hard-coded references in the Twitter code to Sacramento. Elon beat the 6-9 month estimate, but not by 6-9 months, and he admitted that rushing the move was a mistake.

Full post, including comments